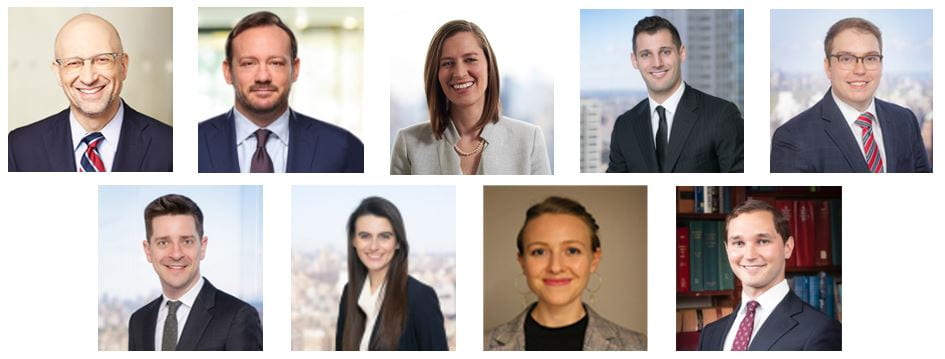

by Eric Dinallo, Avi Gesser, Erez Liebermann, Marshal Bozzo, Matt Kelly, Johanna Skrzypczyk, Corey Goldstein, Samuel J. Allaman, Michelle Huang, and Sharon Shaji

Top (from left to right): Eric Dinallo, Avi Gesser, Erez Liebermann, Marshal Bozzo, and Matt Kelly

Bottom (from left to right): Johanna Skrzypczyk, Corey Goldstein, Samuel J. Allaman, Michelle Huang, and Sharon Shaji (Photos courtesy of Debevoise & Plimpton LLP)

On January 17, 2024, the New York State Department of Financial Services (the “NYDFS”) issued a Proposed Insurance Circular Letter regarding the Use of Artificial Intelligence Systems and External Consumer Data and Information Sources in Insurance Underwriting and Pricing (the “Proposed Circular” or “PCL”). The Proposed Circular is the latest regulatory development in artificial intelligence (“AI”) for insurers, following the final adoption of Colorado’s AI Governance and Risk Management Framework Regulation (“CO Governance Regulation”) and the proposed Colorado AI Quantitative Testing Regulation (the “CO Proposed Testing Regulation”), discussed here, and the National Association of Insurance Commissioners’ (“NAIC”) model bulletin on the “Use of Artificial Intelligence Systems by Insurers” (the “NAIC Model Bulletin”), discussed here. In the same way that NYDFS’s Part 500 Cybersecurity Regulation influenced standards for cybersecurity beyond New York State and beyond the financial sector, it is possible that the Proposed Circular will have a significant impact on the AI regulatory landscape.

The PCL builds on the NYDFS’s 2019 Insurance Circular Letter No. 1 (the “2019 Letter”) and includes some clarifying points on the 2019 Letter’s disclosure and transparency obligations. The 2019 Letter was limited to the use of external consumer data and information sources (“ECDIS”) for underwriting life insurance and focused on risks of unlawful discrimination that could result from the use of ECDIS and the need for consumer transparency. The Proposed Circular incorporates the general obligations from the 2019 Letter, adding more detailed requirements, expands the scope beyond life insurance, and adds significant governance and documentation requirements.