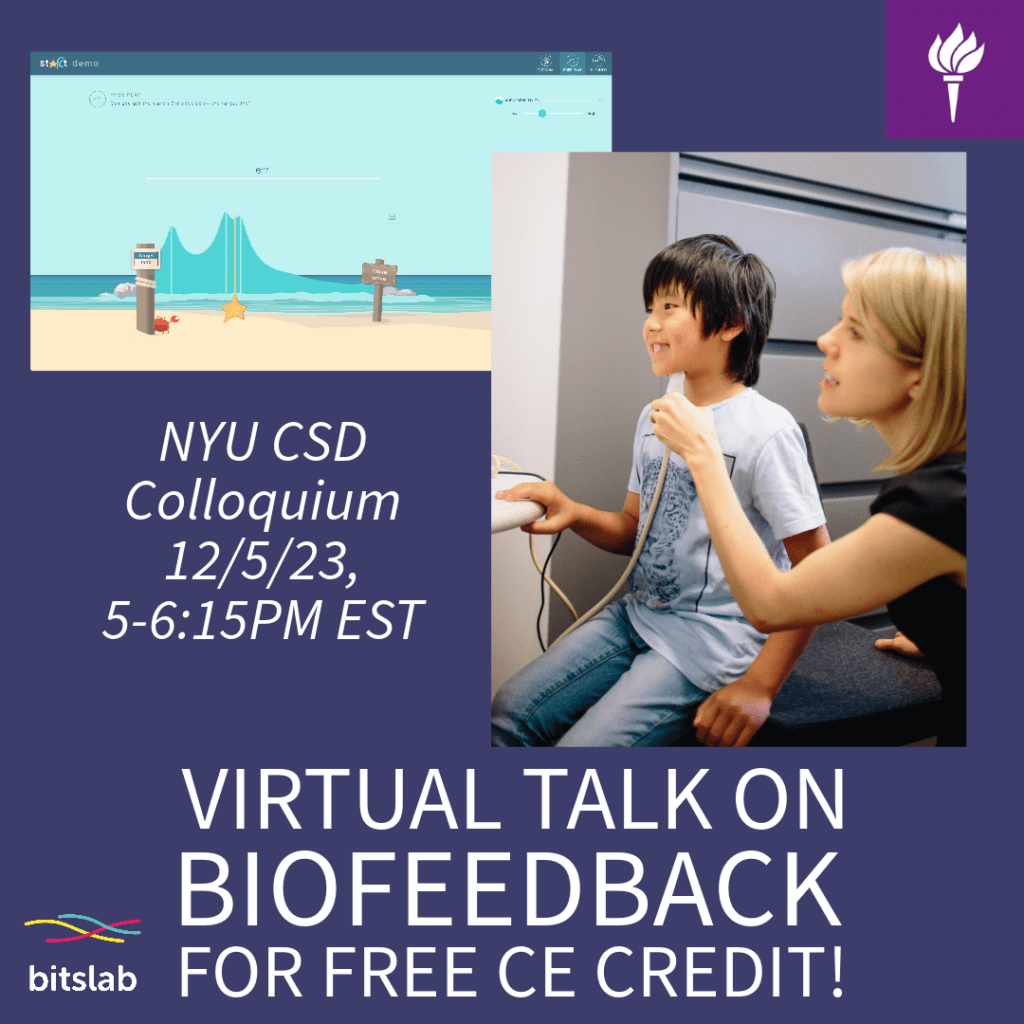

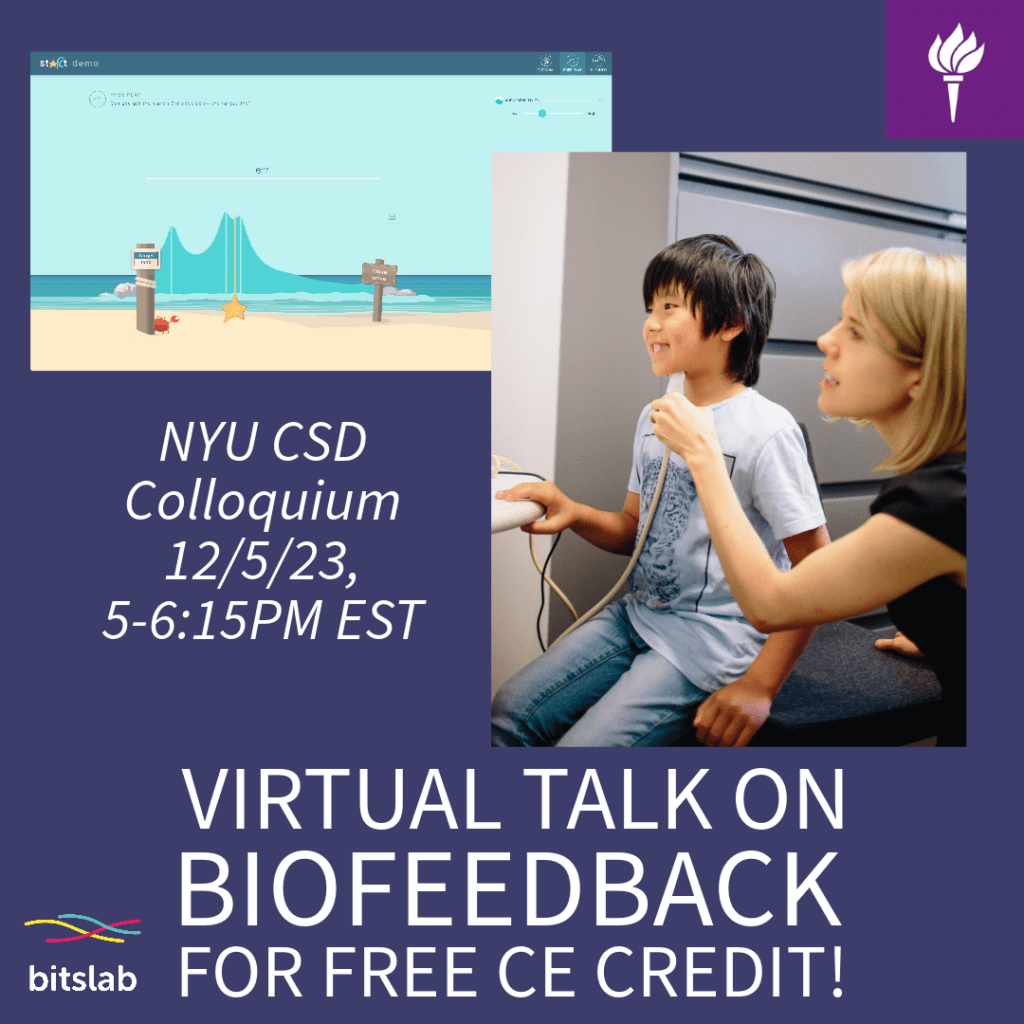

On 12/5/23 I will be presenting in NYU CSD’s colloquium series in a special session eligible for CE credit. Here are the details!

Technology-enhanced treatment for speech sound

disorder: Who gets access, who responds, and why?

This course discusses the use of biofeedback training for speech in older children with residual speech sound disorder. It reviews evidence on the efficacy of ultrasound and visual-acoustic biofeedback and discusses how biofeedback may have its effect, such as by replacing faulty sensory input. The course

also covers barriers to clinical uptake and steps to expand access to speech technologies.

Presented by Dr. Tara McAllister

December 5, 2023 | 239 Greene St, 8th Floor

5- 6:15 PM | Presentation

6:15- 6:45 PM | Reception

Please register here for Zoom attendance.

Please register here if you wish to earn ASHA CEU credit.

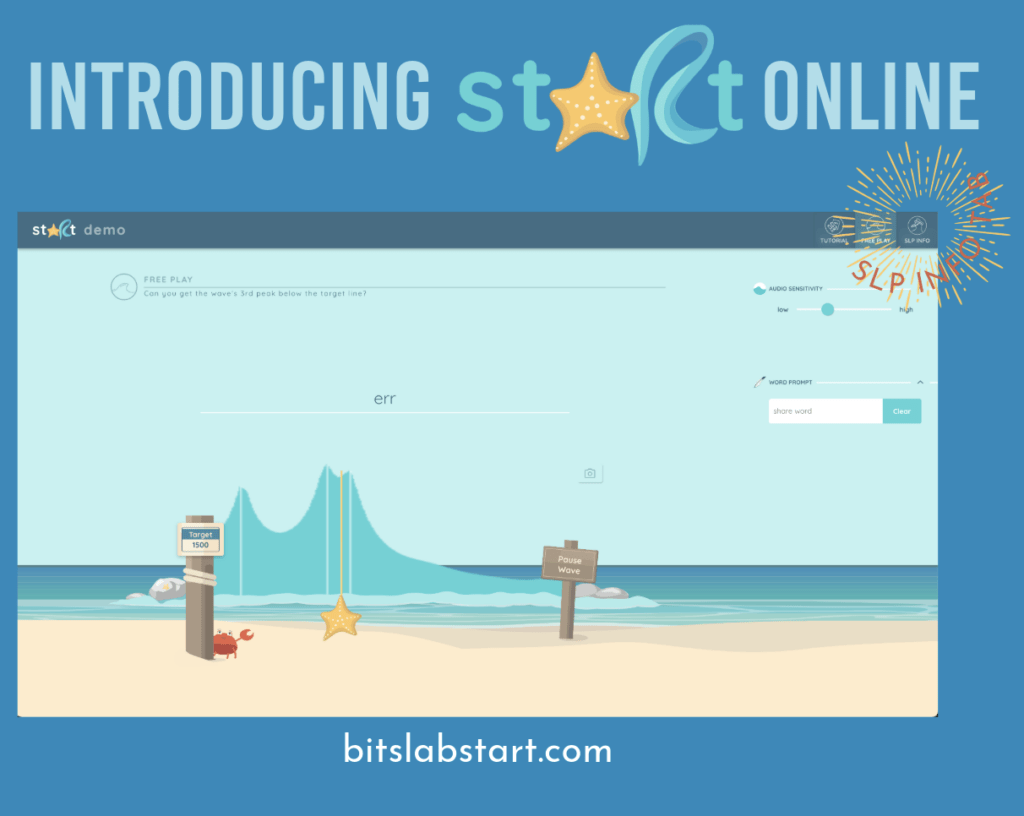

Financial Disclosure: This research was partly funded by the National Institutes of Health. This talk will discuss the staRt app for visual-acoustic biofeedback. Dr. McAllister is a member of the board of Sonority Labs, LLC, a for-profit entity that was created to explore the possibility of

commercialization of the staRt software. The staRt app is currently distributed as a free download, but in the future it may be sold or licensed in a for-profit capacity. Other products for biofeedback delivery will also be discussed in this course.Nonfinancial Disclosure: No relevant nonfinancial relationship exists.

Time-ordered Agenda:

Define biofeedback and its use for residual speech sound disorder (10 min)

Review evidence regarding the efficacy of biofeedback (10 min)

Discuss models of how biofeedback works in relation to models of sensory function (15 min)

Discuss the possibility of personalizing biofeedback for a learner’s sensory profile (10 min)

Discuss barriers to clinical uptake of biofeedback and steps to expand access (10 min)

Discuss other populations who might benefit from biofeedback (10 min)

Questions, discussion, and self-assessment (10 minutes)

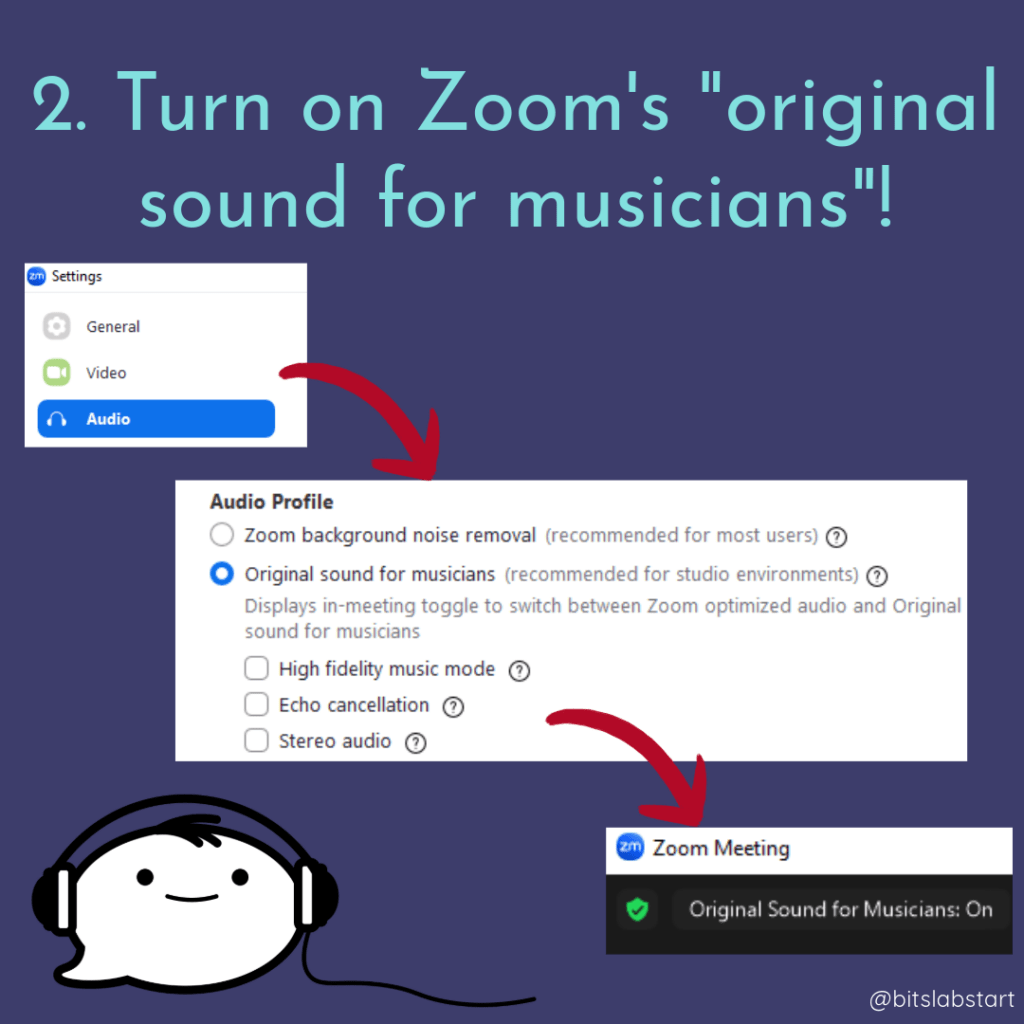

ry quiet space if you try it!

ry quiet space if you try it!