A. Title

侬说啥 (What did you say?)

B. Project Statement of Purpose

In this project, my partner and I are aiming to educate young children on the dying languages and dialects in different provinces around China. Unlike most Western countries, China has multiple languages and variations of language spoken throughout the country. Even though Mandarin is the most common one, some cities like Shanghai also have their own dialect called “Shanghainese” (上海话) (Asia Society). In order to preserve cultural dialects such as Shanghainese and others, my partner and I have devised a plan to educate younger children on the many dialects throughout China. In many articles, it is evident that languages other than Mandarin in China are being phased out because the Government is requiring people to use Mandarin in official scenarios (GaoKao exam and government forms for example) (The Atlantic). It is important to maintain the vast array of cultures in China through spoken language . We will appeal to the younger generation through a puzzle game which displays a map of China. The child will take one of the provinces (a singular puzzle piece) out and trigger the screen and audio to begin playing a clip from a native speaker of that region. Each province that is available to source from will have a native speaker from NYU Shanghai introducing themselves. This way children can listen, practice, and most importantly, be aware of the diversity among languages in China. Hopefully through this project, my partner and I can inspire classmates, teachers, mentors, and children to keep dialects alive in China.

C. Project Plan

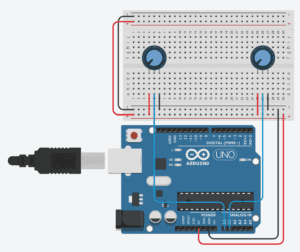

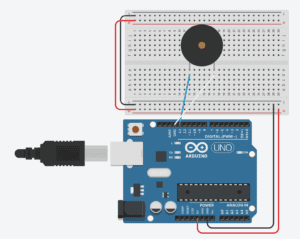

The main goal of this project is to preserve the cultural traditions of different languages and dialects around the provinces of China. In order to appeal to younger kids through education, we’ve created a puzzle game which correlates with a screen, joystick, and audio speakers. The first component is creating a map of China, which is split into different puzzle pieces based on provincial boundaries. Each province puzzle piece will be linked through wires to the screen so that when it is taken out of the puzzle, a video of the native speaker from that selected province introducing themselves plays. The joystick will be linked to the screen and control a cursor which can move over a virtual map of China. As the cursor mouses over a province, the voice of that dialect begins to whisper, creating intrigue in the participant. My partner and I plan to film and record different students from NYU Shanghai who come from separate provinces such as Guangdong, Shanghai, Beijing, Hebei, Sichuan and more. We realize that not every province is represented at our school, so we will try and accrue as many as possible. Although, the search process for finding students that speak dialects other than Mandarin has been difficult because of the Government mandated policy of Mandarin only learning in recent years. We have designed the base of the puzzle and plan to laser cut it while we will 3-D print the model of China and its pieces to fit within the base. We also need to coordinate the sensor wires with the puzzle pieces once they are fully printed. After, we will link the joystick with the on screen graphics through the Arduino values we’ve learned and processing skills to create a virtual map.

D. Context and Significance

In my preparatory research for this project, I stumbled upon a similar project named Phonemica which also aims to preserve Chinese cultural dialects by physically traveling to those places and recording the people there. I found it very inspiring that there were other people who are also passionate about preserving the local culture of China. Our project differs from Phonemica though in that we are only in one location and are recording people in our school. We are also trying to appeal to a younger generation by making it a game, rather than a website. Another project that inspired me was the musical bikes, just a block away from our school. The premise of this project was interactivity through music and exercise. When all four people were riding the bikes, the whole song would play. I think that our puzzle and joystick feature creates a similar incentive because at first the voice whispers when they mouse over it with the joystick and only once the child takes the puzzle piece out will it fully play the phrase. The joystick creates an incentive to take out a puzzle piece just as the songs created an incentive for all four people to be exercising on the bikes. I think our project is unique in that we are enlisting the help of the whole school to guide our educational process. It’s not only my partner and I working to preserve Chinese dialects, but those from that region too. To me, it aligns with my definition of interaction because it involves a child playing with a puzzle which translates a result to the screen and helps that child learn. It’s a conversation through the physicality of the puzzle, and the encouragement to repeat the phrase after they are finished watching the video. Just as Chris Crawford explains in what exactly is interactivity? , interactivity has to do with conversations moving back and forth between the user and the machine. It’s not just once, but multiple times in a fluid back and forth process (Crawford). I agree with Crawford and think that my project on interaction is a conversational learning process geared towards children. Hopefully, this project can inspire others in the future to build similar models such as Phonemica’s to help maintain cultural identities in China. These educational tools such as speech repetition games, apps, and computer games could possibly be used in schools around China and specifically in each province too. Seeing as the future is digital, I hope Chinese dialects can survive the new wave of technology.

Works Cited

https://asiasociety.org/china-learning-initiatives/many-dialects-china (Asia Society)

http://www.phonemica.net/

https://www.theatlantic.com/china/archive/2013/06/on-saving-chinas-dying-languages/276971/ (The Atlantic)

Chris Crawford, What exactly is interactivity? (In class reading)