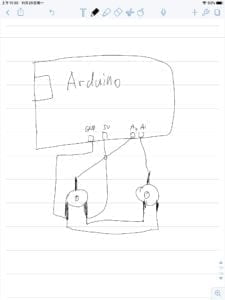

In my recitation, I planned to use two potentiometers to control the image in the Processing. The first potentiometer is to control the size of the image, and the second potentiometer is to control the degree of the blur of the image.

At first, after I read the Powerpoint in the folder, I used the function “resize” to change the size of the image and the grammar of “Resize” is photo.resize( , ). However, a mistake happened. When I resize the picture for several times, the picture blur a little. So I know the resize function will change the function, which leads to the change of the picture. Therefore, later I chose another function which do not change the image:

image(photo,0,0,a);

Second, when I first use the code that can transfer data from Arduino to Processing, the error said the ArrayException. At first, I thought it was because of the number from Arduino exceed the range that Processing. However, when I asked Rudi, he said the number of the blur function couldn’t be so big. So I used

float a=map(sensorValues[1],0,1024,0,40);

to make the number from Arduino smaller.

Here is my arduino code.

void setup() {

Serial.begin(9600);

}

void loop() {

int sensor1 = analogRead(A0);

int sensor2 = analogRead(A1);

// keep this format

Serial.print(sensor1);

Serial.print(“,”); // put comma between sensor values

Serial.print(sensor2);

Serial.println(); // add linefeed after sending the last sensor value

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(100);

}

Here is my processing code

import processing.serial.*;

String myString = null;

Serial myPort;

PImage photo;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

void setup() {

size(500, 500);

background(0);

photo=loadImage(“12deeaa706628e537518aa533d6c8658.jpeg”);

setupSerial();

}

void draw() {

background(0);

updateSerial();

printArray(sensorValues);

image(photo, 0, 0,sensorValues[0],sensorValues[0]);//,width,height);

float a=map(sensorValues[1],0,1024,0,40);

println(“senmap!”,a);

filter(BLUR,a);

//

//filter(BLUR, sensorValues[1]);

// use the values like this!

// sensorValues[0]

// add your code

//

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 4 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

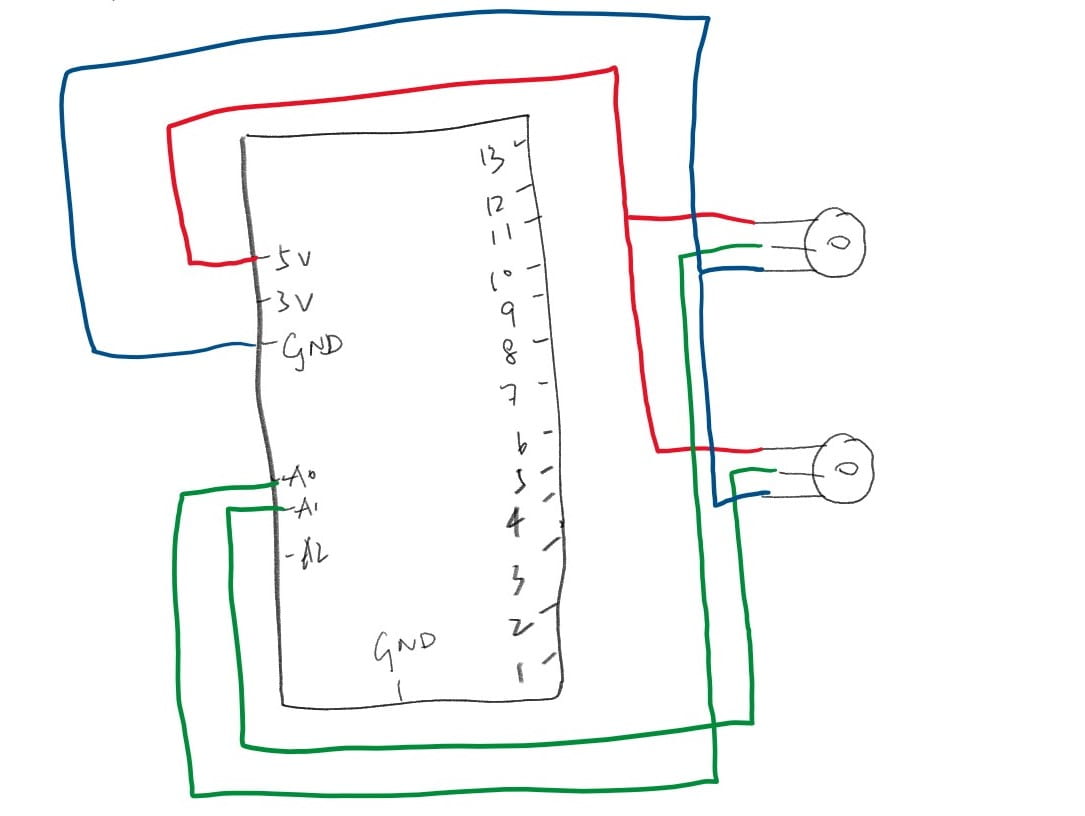

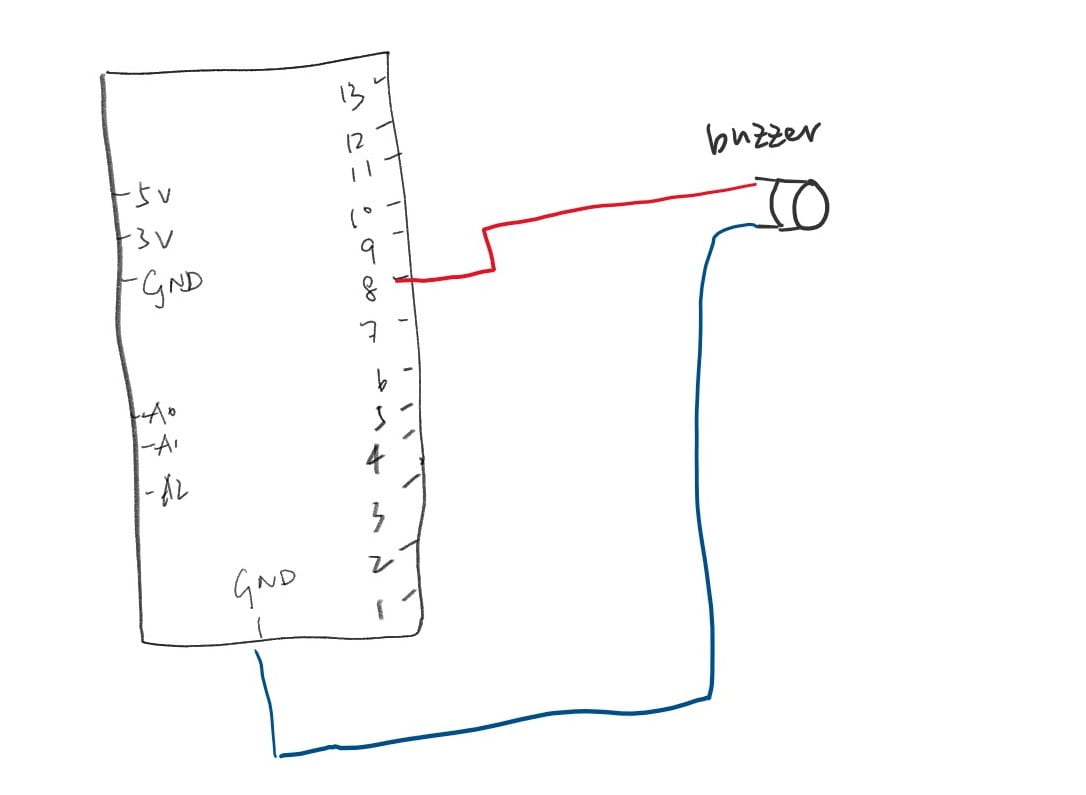

Here is my circuit:

Here is my video:

Reading

In this week’s reading, the author talked about the development of Computer Image analysis. It showed that computer vision doesn’t only can be used in military and law-enforcement actions. One of the most impressive project based on the technology is called “Standards and Double Standards”, which can recognize the people in the room and make the belt rotate to face to the audience. The project used belts to represent people face to the audience using the tech.

What makes me think is that the game I want to make can use this technique to track the movement of the player in order to make them like moving the stick on the screen, but I’m not sure if I can manage to understand it.