Tribute to my partner: Year Cao.

Project Description

This is a metaphorical illustration of the collision between human-constructed spatial structures and nature itself. The general concept of collision permeated through our overall design and realization of the project from the beginning to the end. While we used different techniques in the field of audiovisual performance, we deliberately conveyed this concept by choosing the most representative and sonorous literary and visionary topos of them, concrete as the process of human construction, flower as the visionary beauty in nature.

The theme was triggered and influenced by the experience of living in the busiest and highly populated area in Pudong, along with massive construction plants visible with bare eyes, noises of steel and concrete is unbearable when passing through them, and the invisible pressure on individuals that stimulates the city towards material prosperity. However, aside from viewing industrialization and construction as evil counterparts of nature, just as people normally think of in a nostalgic metanarrative, we can actually perceive nature and non-naturistic human construction as an intertwined, morbidly beautiful unison.

To exquisitely express that kind of theme, there is no static art form such as fine arts, sculpture, or even film, that is suitable for the expression. They lack instant dynamic impulse that should be functioned as conveying the message of the duality of intertwining human construction and nature. So that the most suitable medium is realtime audiovisual performance, not only it has to be audiovisual, but also performable so that the piece itself is dynamically interesting, yet also avoids being repetitive.

By building up the performable system, both the audio system and the visual system, different practices are used from sampling, synthesizing, realtime 3d rendering, and routing them with interconnections. My partner Year and I utilized the system that we have built to conduct operations on the interface, and throughout practices, we performed along with others in the class at club Elevator, Shanghai.

Perspective and Context

Rooted and influenced by a lot of genres within the context of audiovisual performance, from visual music, to live cinema, and to VJing, and towards the general term “audiovisual performance”, our influences and contextual fitting position is complex and distinct from any of the specific historical contexts. If to sort them clearly, our work can be deconstructed into several components.

♦ First, conceptually, we believe in the essence of building an interpretive system, that should be a human-friendly interface that interprets instinctive human actions into machine language that controls the technological essence of the performance, that is rooted from the earliest pioneers including Thomas Wilfred with his Clavilux machines, and many others that dedicated to building performable instruments for audio/visual or audiovisual performances. ♠ Second, methodologically, our approach involves techniques used in the contexts of live cinema, such as live video stream processes. ♣ Third, in terms of live performance, Year and I are divided into a duo setup that is common in contemporary DJ/VJ practices, while I am managing a generated audio live set, what Year did in the performance fits several characteristics of a VJ performance, that includes links with the groove and the audio, performing in a club context, etc. ♥ Yet overall, our performance is not solely one of each kind of category but viewed as a duo performance, it is a complexity of realtime audiovisual performance. So that without either audio or visual involvement, it does not convey any message, and only if both audio and visual elements intertwine, the piece is completed and achieved the effect of 1 + 1 > 2, in which the surplus meaning is the mentally and rhetorically synesthetic connection between audio and visual elements.

One of the advantages that contemporary digital technology has brought us is the ability to stream and link high-quality sound and video streams in realtime and because of the development of computer graphics, especially the OpenGL engine we are using, highly empowered us to be not constrained by analog technology as previous audiovisual artists had been. Historically, artists find it hard to perform both the audio and the visual simultaneously, with solid performing aspects being kept (that means a self-running ground layer does not fit this criterion).

From one of the earliest pioneers in sound and visual combination, Oskar Fischinger, due to the constraints of technology in the 1930s, is still “using a modified camera that was capable of recording black geometric shapes painted on scrolls right onto the film soundtrack” (Brougher, 125). Fischinger’s work is often composed with a symphony recording track as backing, and illustrate the imaginative visual expression utilizing shotted stop-frame animation, so that the connection between sound and visual is single-wayed. It is not until the massive usage of computer technology did people start to gain the ability to automize the procedure of audiovisual linkage, aside from doing them manually, as the Whitney Brothers took usage of the earliest computing devices to generate synthetic sound and visions. Yet it is until the massive application of modular synthesizer that people can perform with a set of instruments, in the sense of liveness, or even considered as realtime.

Hence, the essence of our performance is the realtime process, as if we are a duet performing separated instruments but in harmony. It is related to what some VJs refer to as improvisation, as they respond to the music in realtime so that the visual outlet varies at different takes in time and space. However, VJs have their own constraints, namely the lack of taking up the leading role in performances and always works as an accompanied wallpaper corresponding to the DJs who gained all spotlights. Our approach on stage is cooperative, communications are in both ways, so that not a single medium is taking the lead role, but efforts are put into the immerse and entanglement of audiovisual mediums. (add jazz musicians improvisation).

Development and Technical Implementation

The workflow is direct and logical. We had been having conversations about conceptual and technical implementations before we started working on actually building the system. For most of the time, we meet together and work on the system on two separate computers, yet we report our current status and make revisions after conversations.

Our technical exploration started with shooting video footage that later appeared in the final performance. Since our theme includes the collision of concrete and human construction, I desperately need footage that enriches our visual elements. Hence I shot some fluent 60fps 1080p footages of the train station, skylines along the metro line and shuttle bus, constructions, and architectures. These footages are later converted into HAP format and used as video textures of 3d objects.

Some examples of the video:

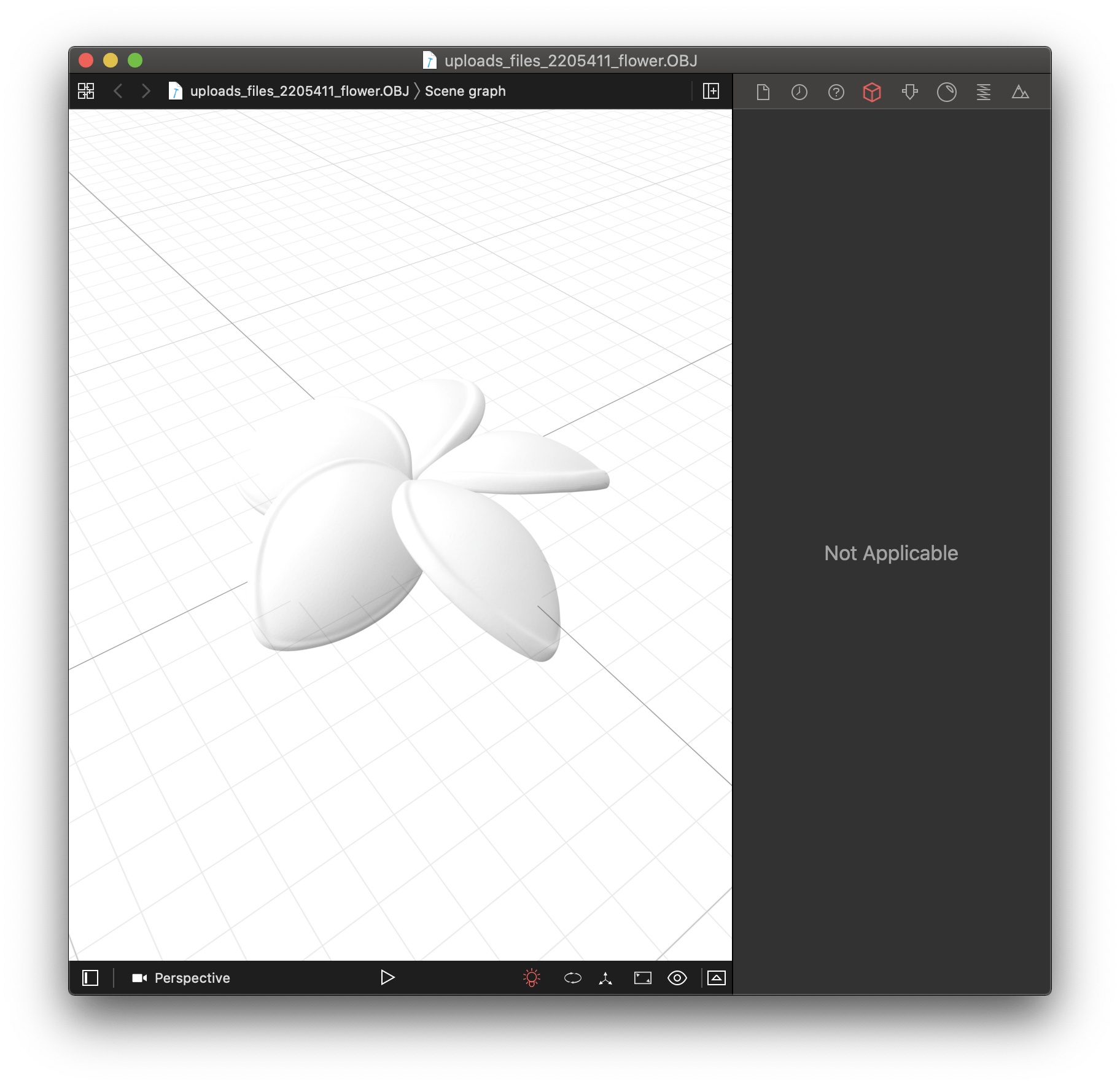

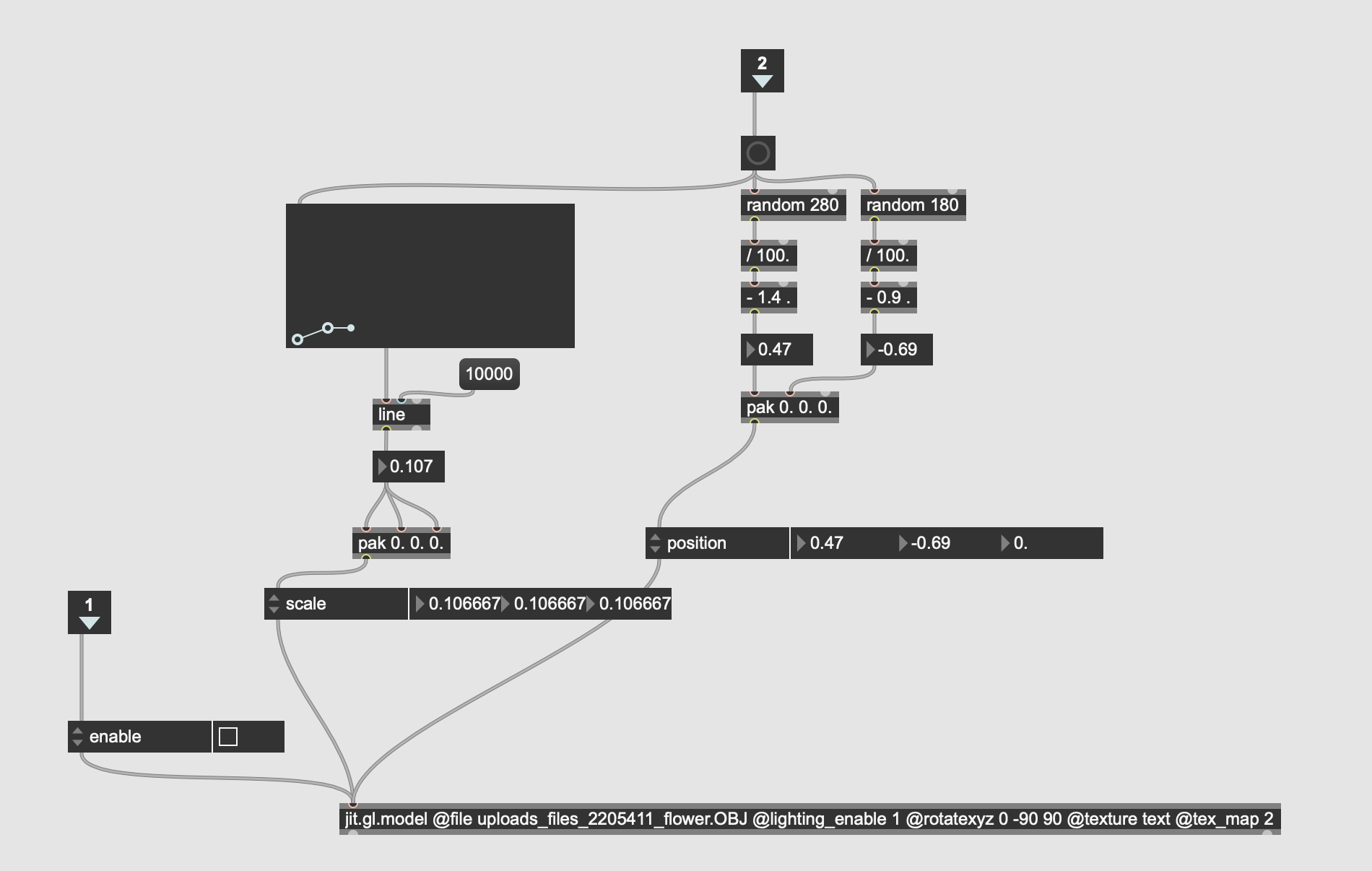

[googleapps domain=”drive” dir=”file/d/12LQkEgV1BnHo8ZelUUHYE2zQ6xqpouoN/preview” query=”” width=”640″ height=”480″ /]On the other hand, before our final project proposal, we had already started collecting potential sources of 3d models. We found and purchased a 3d flower model, that is not too realistic, and it eventually appeared in the final visual.

Enlarge

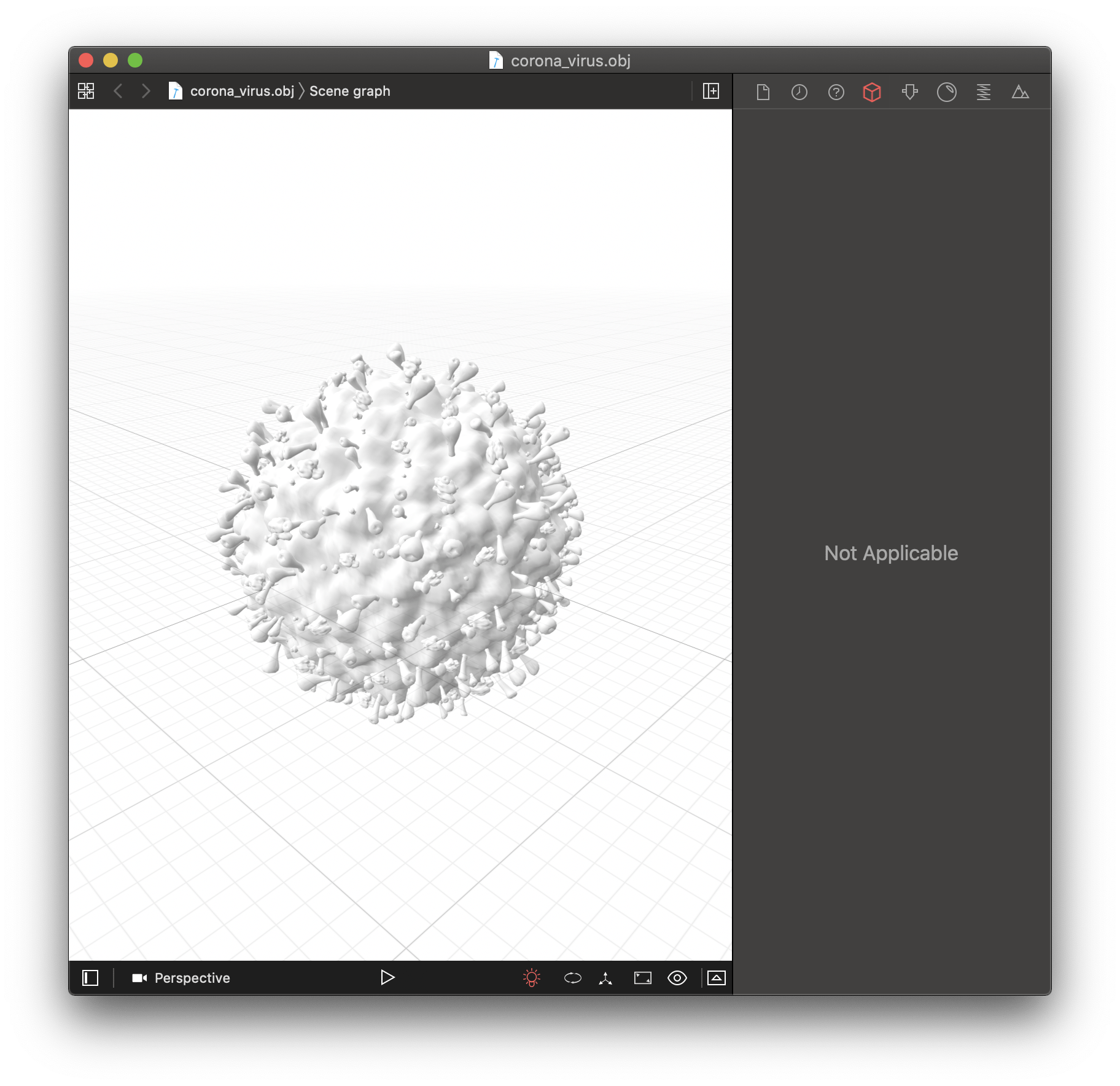

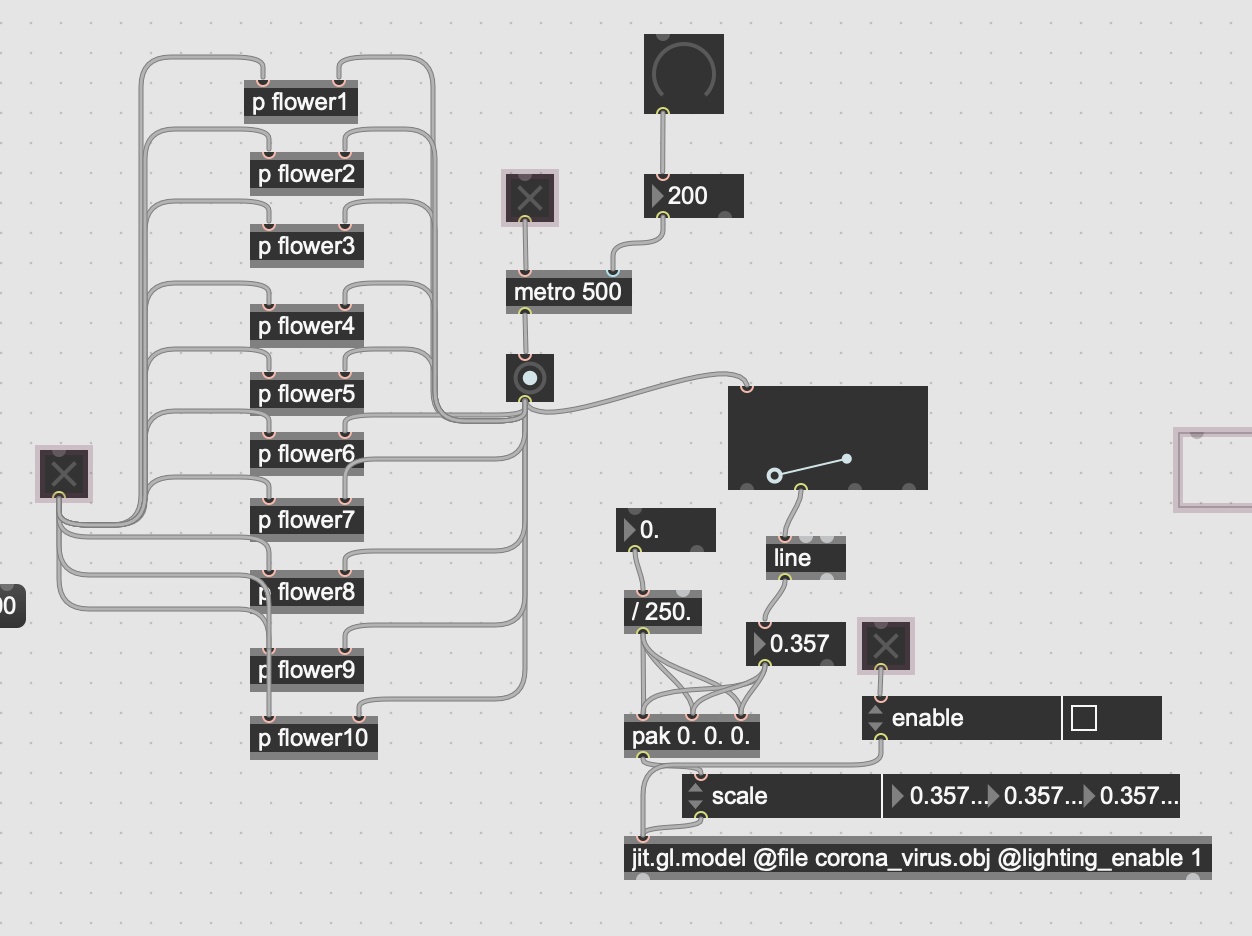

Another one we used is a model of coronavirus, which works perfectly fine with our theme.

Enlarge

As we gathered all video footage and enough resources, we begin to work on the visual representation of the project in Max 8.

Our visual implementation works with jit.gl as the bases, while we used the OpenGL engine to render 3d graphs and their movements.

Our patch can be divided into several elements.

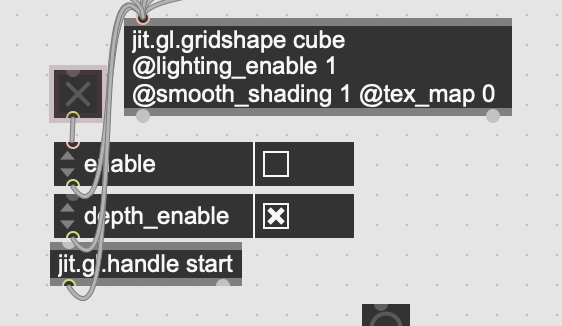

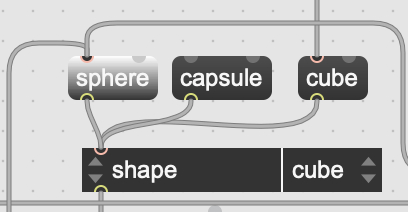

The first part is where we textured the footage that I shot and applied it to a 3d base shape.

Enlarge

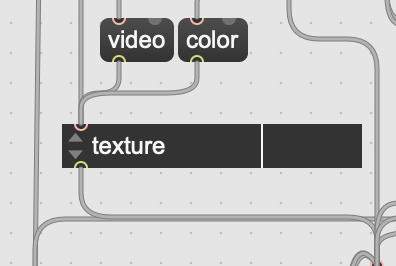

There is a function that changes between two kinds of textures for the shape.

Enlarge

However, the shape can also change accordingly.

Enlarge

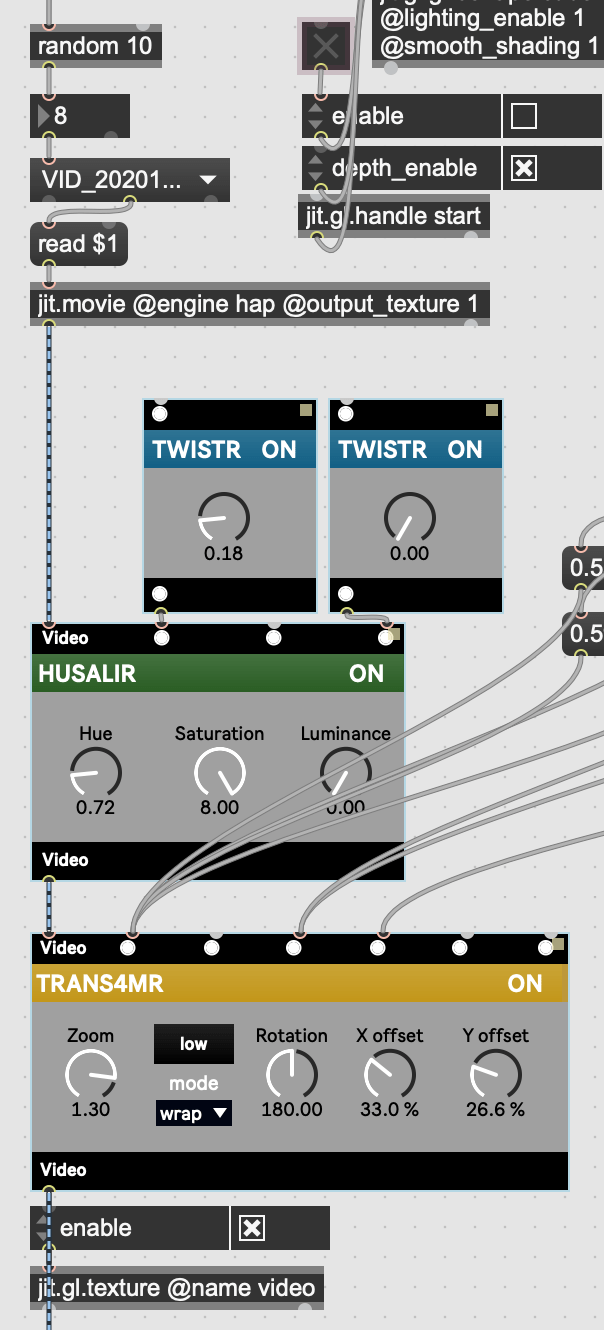

There are several other movement functions that we encapsulated in the p patcher. For the video texture, it is selected by a drop-down menu, with a random switch.

Enlarge

The second segment of the patch is the flower model. One of the mistakes we made at this part is that we did not always use jit.gl.multiples so that the multiple flowers are the result of copy and paste.

Enlarge

In each of the sub-patcher, it is a jit.gl.model object.

Enlarge

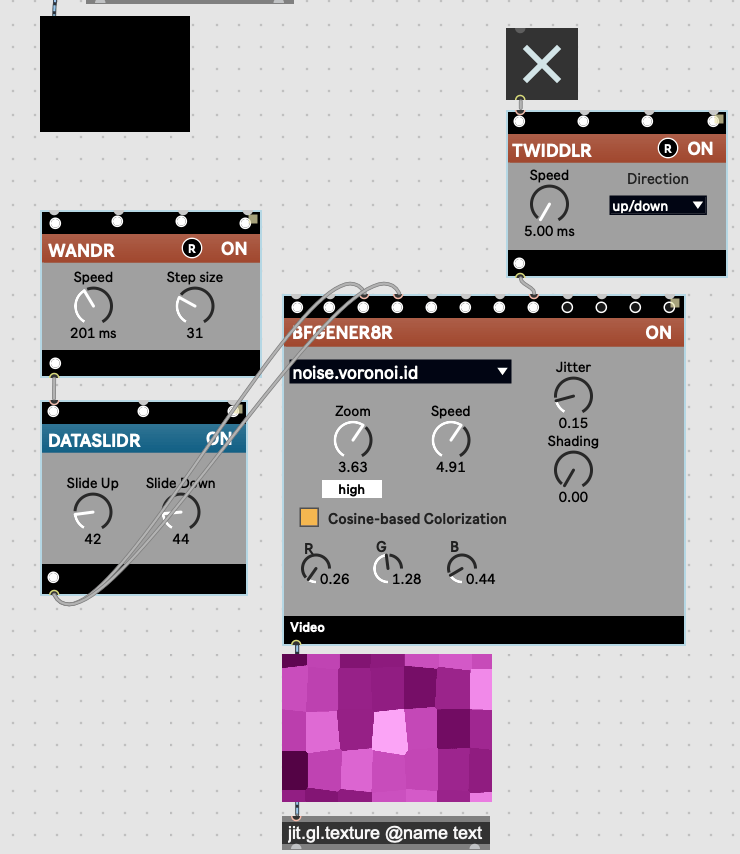

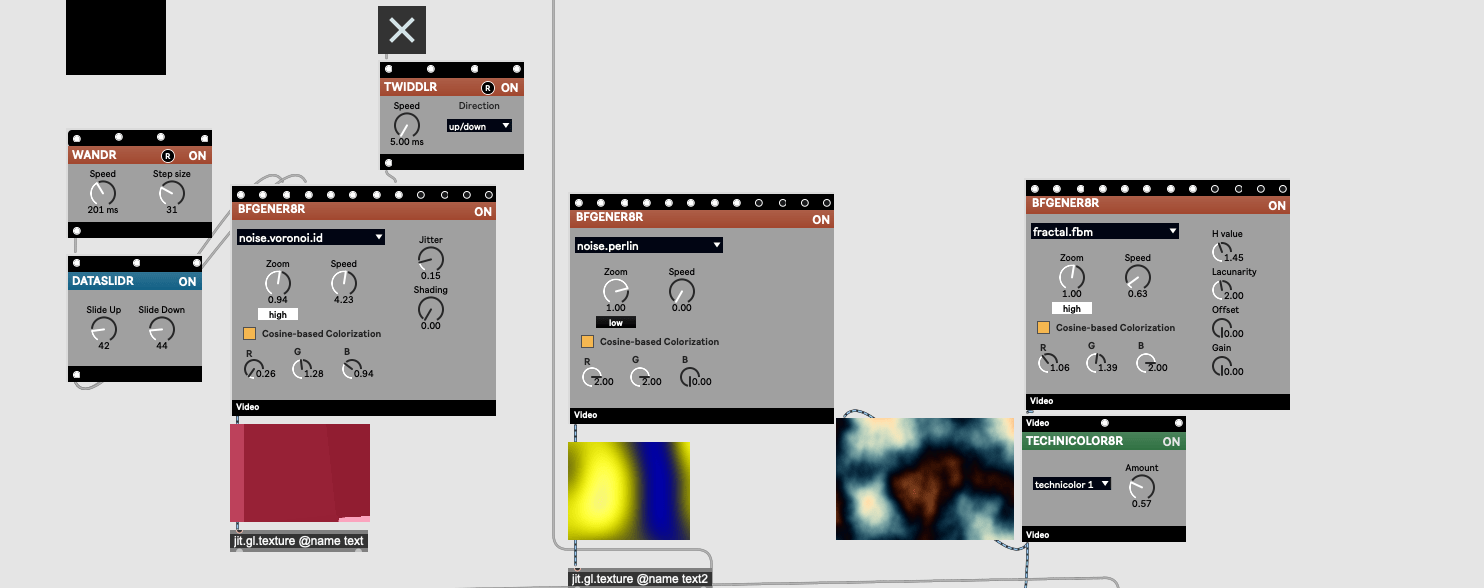

In order to create the magenta/purple/bright yellow color texture, with little details revealing, we choose to use the BFGENERATR as the source of texture.

Enlarge

Using a TWIRDDLR, the texture gradually changes its color and shape.

There are two other BFGENERATRs that function as other textures in different segments of our performance.

Enlarge

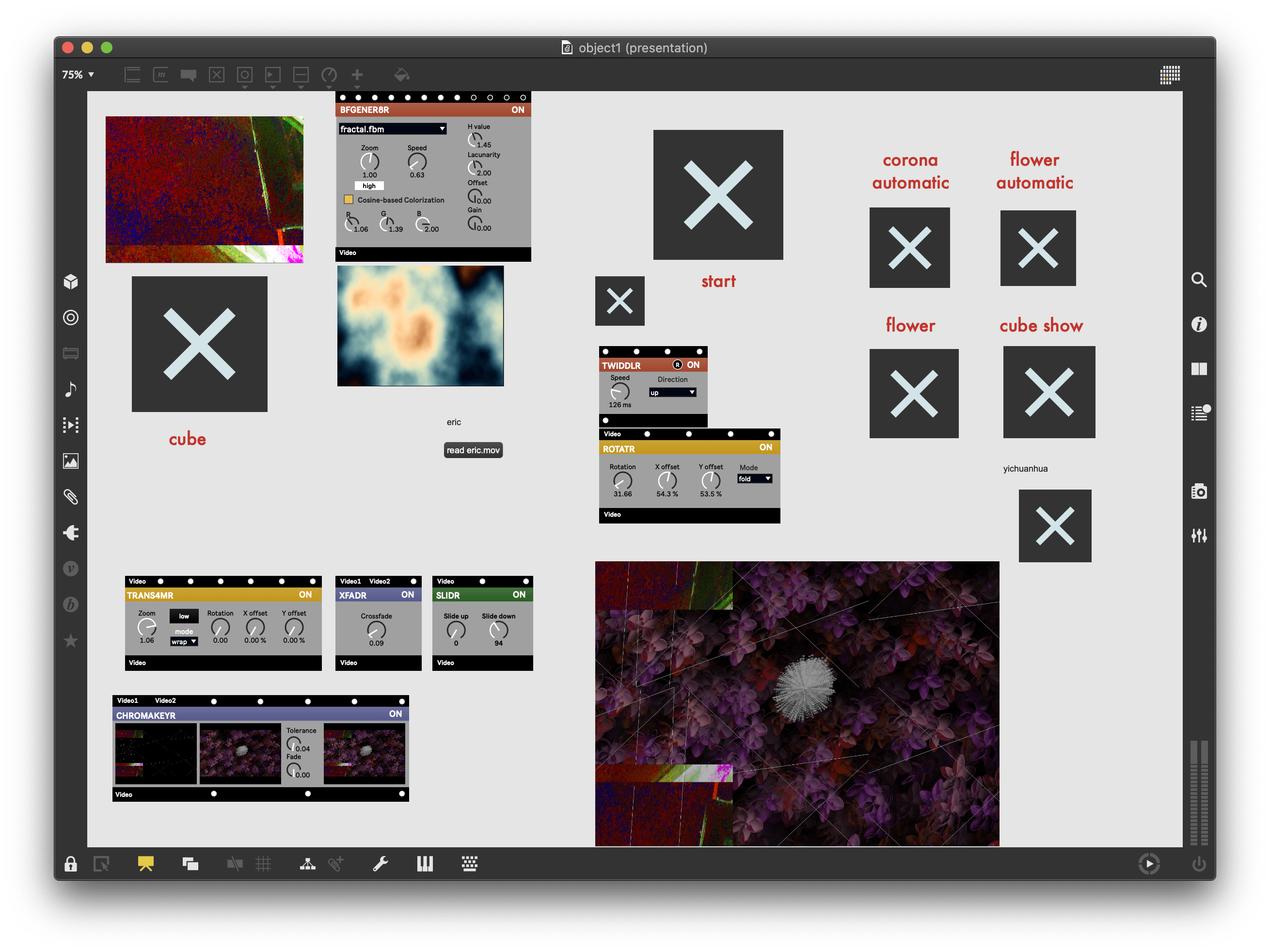

For most of the toggles and buttons, they are mapped to the midi mix panel, and during the performance, Year has direct control of the parameters. And it has a clear presentation mode.

Enlarge

For the audio segment, I uploaded a run-through demo of the system that I built. (Without the UNO Synth sound)

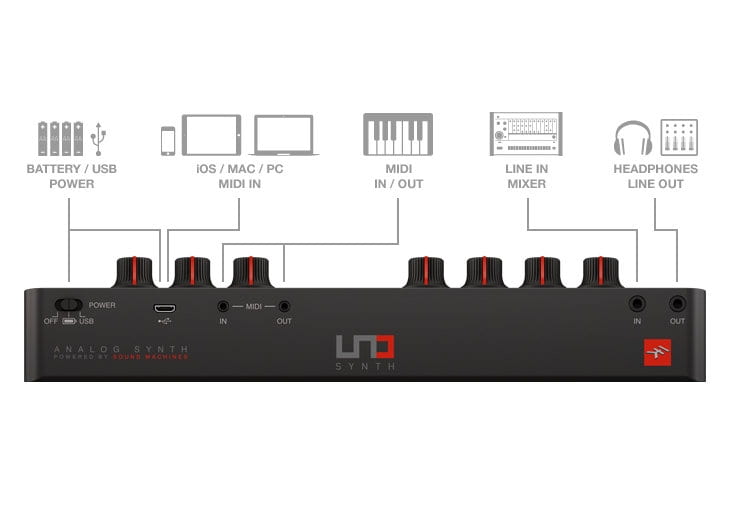

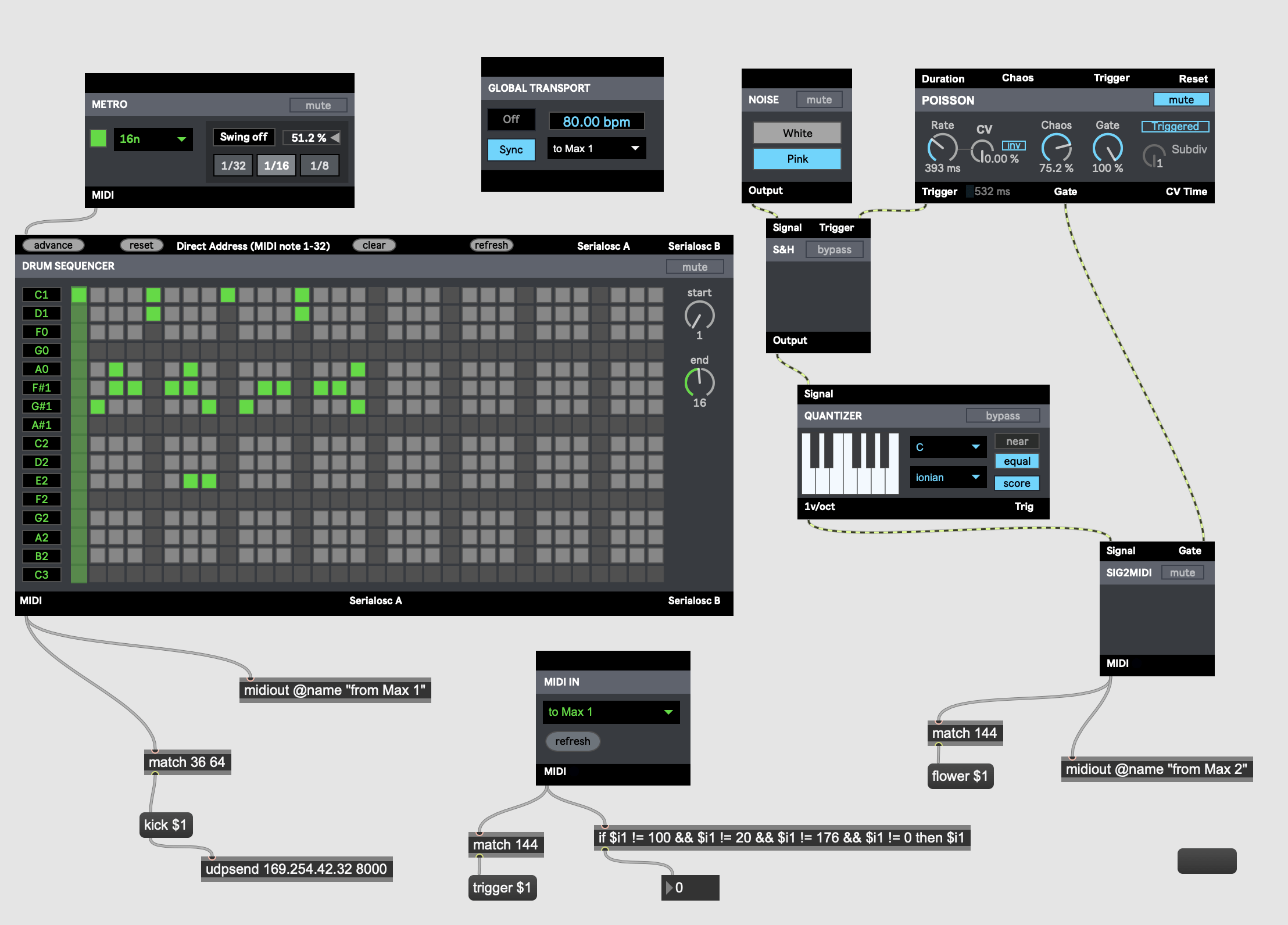

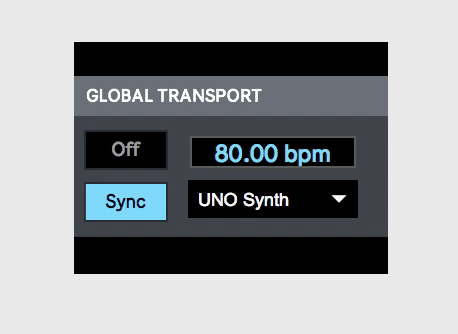

For this audio system, it consists of basically three segments. Max patcher, Ableton Live set, and the UNO Synth by IK Multimedia.

Enlarge

Within this system, the Max patch is in charge of controlling midi information and communicate with the computer that used for visual elements using udpsend and udpreceive. The Ableton Live Set is mostly in charge of receiving midi information from Max, and turn them into audio signals, then mix together to the audio output. The UNO Synth is the extra audio source that we are using. Since if to input the output audio of the synth into the computer, an extra soundcard will be needed. Instead, I chose to route the audio signal reversely. Since the UNO Synth can receive audio input, the computer output can be sent into the UNO Synth, and together to the output.

Enlarge

In the max patcher, we routed two midi signal generators. One for the drum machine, and one for the bell sound that is controlled by the Poisson module.

Enlarge

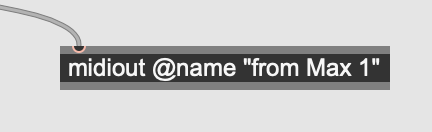

There are APIs that let people send midi information from Max to Ableton, and the channels are named fromMax 1 & fromMax 2. Using a midiout object, the information can be sent into Ableton.

Enlarge

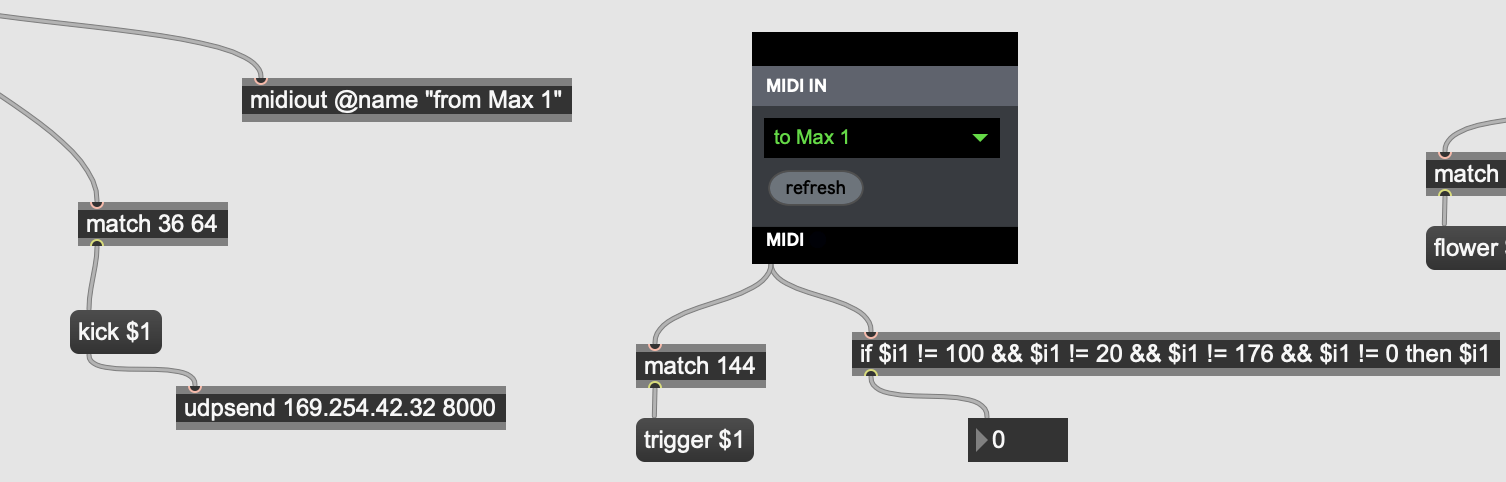

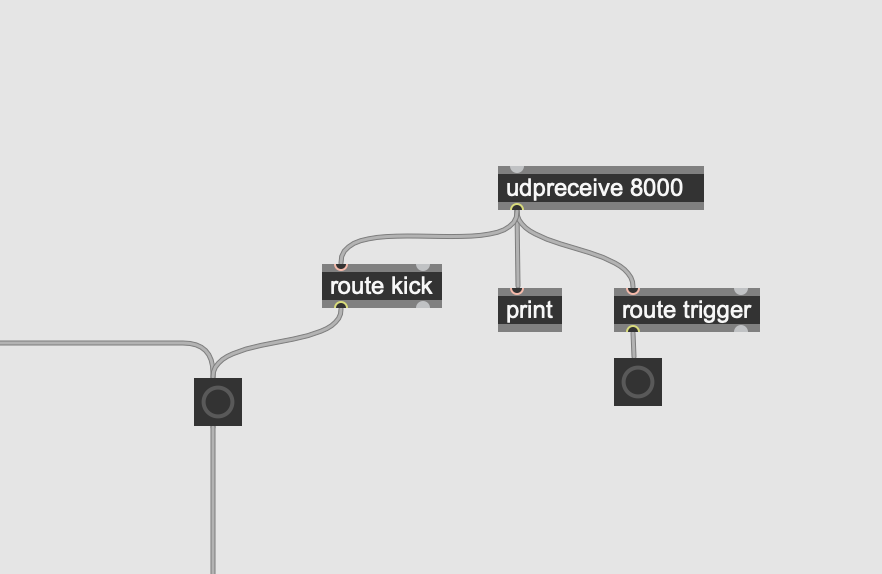

Also, in order to synchronize rhythm with the other computer, a local computer network is needed. This is achieved by using UDP, by simply hosting a local network and load the IP address into the max patch.

Enlarge

Enlarge

In this way, the beat is synchronized in the performance.

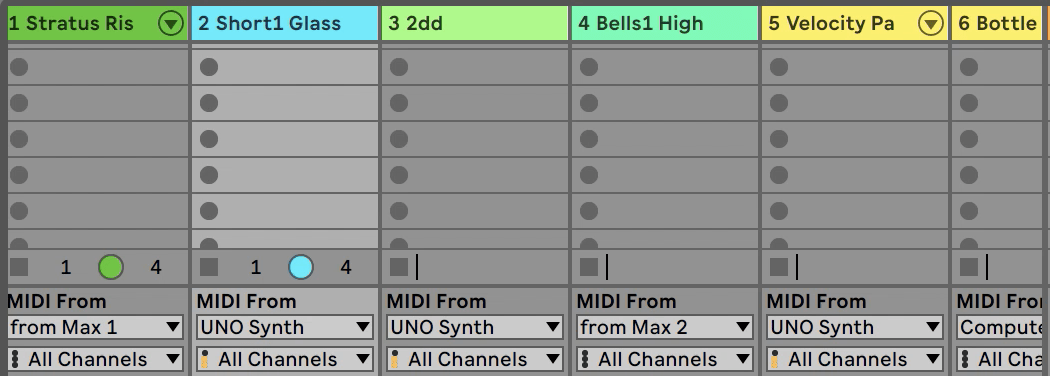

For the Ableton Live Set, I mixed the audio and arranged 6 tracks, along with the synthesize, there are 7 tracks in total.

All together they have 4 channels of midi information, which are as follows:

- Computer Keyboard

- UNO Synth as MIDI

- fromMax1

- fromMax2

Enlarge

While in UNO Synth, it is connected to the computer using a micro-USB cable. (This synth is analog at the audio module but digital in terms of controlling interface), so that it works as a MIDI input as well.

I utilized the built-in arpeggiator to perform on stage and the arpeggiated MIDI information will also be sent into Ableton Live so that some of the corresponding tracks will also be triggered.

Enlarge

Also, I mapped some keys that will trigger certain functions. In order to remember them, I drew some simple icons that remind me how to operate.

Enlarge

Performance

During the performance, and also the rehearsal takes we did before the performance, the most important aspects are cooperation and coordination. For contemporary realtime audiovisual performances, audio and visual elements should be consistent, linked, or at least rhetorically similar. A lot of our coordination came from the instinct of improvisation so that our intuition gave us the command of what kind of elements should the visuals correspond to the audio, and vice versa. This kind of improvisation is kept through performance.

In the actual performance, most procedures went well. We kept the time flow, the transition between different stages, and the correlation between visual and audio elements. The audience is supportive and cheerful, which brought our enthusiasm through. However, when I was setting up the audio cables and system, I forgot to set up one important segment, the bpm synchronization in Max with the arpeggiator in the UNO Synth. So that the rhythm is off during the performance, yet nobody could tell the actual difference, since offbeat could also be seen as an artistic approach.

Enlarge

Conclusion

In general, the final output is cheerful and rewarding. I believe within the context of a nightclub, the groove, and aura of the performance is vital, and we successfully seized that aura and made things happen. There are people that I don’t know applauded for us, and that is a great satisfaction.

If to be critical, I believe the system is still not very mature. We wanted more stable local networks that are capable of sending massive information between the computers so that we can achieve more. When it comes to content, I believe that we still lack another stage of visual elements. The status quo reveals to be a little repetitive, especially towards the end of the performance.

In the future, I will still continue to explore the possibilities of realtime audiovisual performances, maybe implement something more musical and calm, maybe I will still cooperate with others to build complicated visual system, since the fact that I am very keen on the concept of information structure that functions in a performance system, and the value of liveness.