Changes in Describing Shaders

Every initial understanding of shaders cannot be fully correct, and could easily be misled by its name and infer that it is a programmable color tint or generative gradients. However, once the person understood some basic knowledge of the rendering pipeline, they can really understand why on the OpenGL Documentation, it writes:

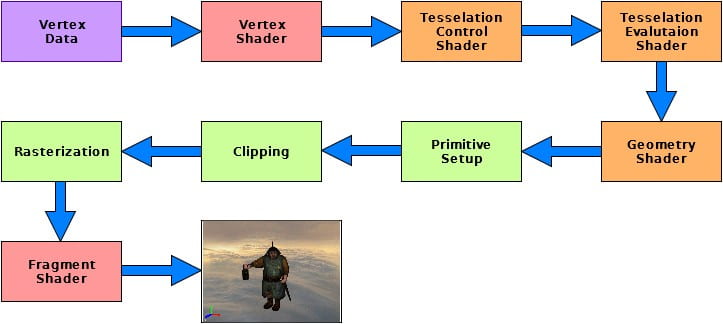

A Shader is a user-defined program designed to run on some stage of a graphics processor. Shaders provide the code for certain programmable stages of the rendering pipeline.

Enlarge

From this point of view, writing shaders can be interpreted as replacing default components of a set of puzzle with your own production, and you would get a customized output based on your needs and aesthetic choices. With the developments of new GLSL versions, it gradually updates some new steps to program, giving end users more choices of designing the customized rendering pipeline for specific needs.

Also, equipped with more intuitive knowledge of GPU’s parallel computing, and with some brief knowlegde about neural networks and deep learning, it is obvious that why hardware manufacturing company NVIDIA is becoming prominent in AI industries. Since both of them share similar computing structures.

Applications

My initial thoughts of using shaders are to write generative visual programs that are good in both in creativity and runtime efficiency, yet previous tools either only have good creativity (processing, p5) or runtime efficiency but not syntax friendly, yet also with good compatibility that the program can be distributed to different technological stack without too much-converting effort. This is essential to new media production workflow in the future.

As a creative person who does not have intuition and drawing techniques but is good at coding, I benefit a lot from coding in shaders (GLSL) and generally expanded my creativity in color theory, geometric patterns, and 3d graphics. Also, due to its high runtime and syntax efficiency, it can be used for numerous live coding sets to provide visual outcomes, can be attached to different devices, from normal screens to LED matrix to projections.

Some drawbacks can be that they are hard to debug since no string and character data type is represented in GLSL (at least in the current version). To compensate for that, unity had developed ShaderGraph, a unity-only visual interface for programming shaders. Yet it might lose cross-platform compatibility.

Examples and Technological Analysis

This shader takes in webcam dataflow and maps it on to a glass texture surface, with an additional Z-axis popping up according to both a grayscale value and other communicative ways to detect video frame boundaries. However, I cannot directly tell how he managed to manipulate points on the Z-axis to make them correspond to a certain amount of nodes relating to each camera pixel. Hence, to understand that we should look more into vertex shaders.

This is a very classic FFT audio reaction visual pattern, with the Z-axis mapped as frequency domain amplitude and some noise mixture. However, the implementation of the visuals are appealing, also fitting towards the background track. Similar to the previous example, I cannot completely tell how to manage single points or adding new points to the vertex shader.