Understanding Cortical Networks Related to Speech Using Deep Learning on ECoG Data

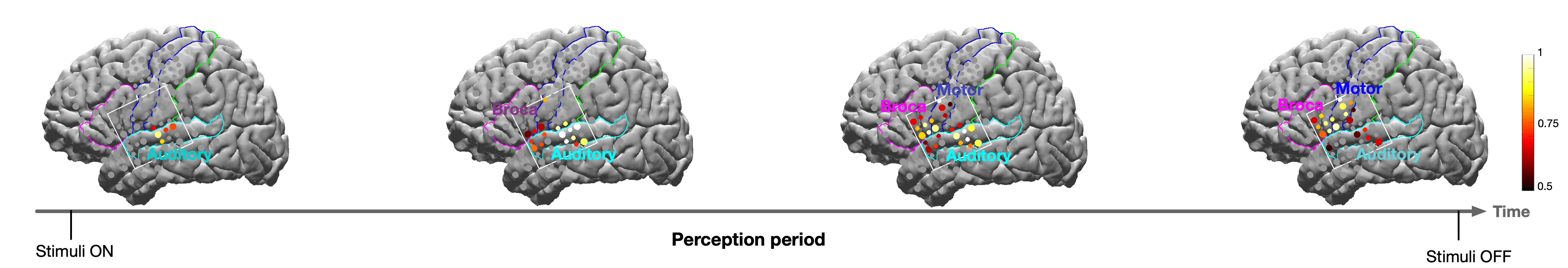

The plot shows the averaged evolution of the attention mask embedded in the encoder for a subject with the hybrid grid. The color in each electrode indicates the value of the attention mask, following the color bar. The white square shows the 8×8 grid used in the experiment. A similar dynamic is also observed in the other HB subject.

The plot shows the averaged evolution of the attention mask embedded in the encoder for a subject with the hybrid grid. The color in each electrode indicates the value of the attention mask, following the color bar. The white square shows the 8×8 grid used in the experiment. A similar dynamic is also observed in the other HB subject.

Project Summary

Despite significant advances in neural science, the dynamics by which neural activity propagates across the cortex while we think of a word and produce it remains poorly understood. This project aims to develop novel, data-driven approaches for understanding functions and interactions of various brain regions by leveraging rare neural recordings obtained with electrocorticography (ECoG) sensors while neurosurgical patients participate in tasks involving language perception, semantic access, and word production. We will produce a set of validated novel computational tools for estimating neural representations and their dynamics as well as elucidate the cortical networks subserving perception, semantic access, and production of speech. Although these tools will be developed for ECoG data, the proposed frameworks are applicable to other neural data modalities including fMRI and EEG, and thus have broad applications in neuroscience. The ability to robustly translate between speech and its neural representations is vital to the development of speech prosthetics, which would allow patients with degenerative conditions (Amyotrophic Lateral Sclerosis) or neurological damage (locked-in syndrome) to drive a speech synthesizer via control from intact cortical structures. The network connectivity tools could shed light on the propagation dynamics of epileptic seizures as well as on how cortical communication, when impaired, gives rise to language aphasias and disconnection syndromes. Furthermore, the decoding and network connectivity tools could help develop novel language mapping approaches for brain surgery without the associated risks of electrical stimulation mapping.

The project consists of three core thrusts:

- developing neural decoders for language processing: The neural decoders will be based on deep-learning architectures able to learn a transformation between neural signals and the speech heard by the patient, the speech produced by the patient, or the semantic concept represented by the stimulus word.

- developing directed connectivity models: The connectivity models will generalize and coalesce current approaches for estimating the task-dependent, time-varying directed connectivity between cortical regions.

- experimental validation: Lastly, these findings will be experimentally validated via clinical electrical stimulation data, and cortico-cortico evoked potential (CCEP) stimulation experiments.

Current modeling approaches of ECoG data have mostly focused on variants of linear models and on speech acoustics. This project will harness the potential of highly non-linear and deep networks for modeling neural responses to both speech acoustics and access to semantics. Additionally, tools for inferring direct connectivity and interactions among neural regions will provide a detailed characterization of the network dynamics, which is largely overlooked by most ECoG decoding studies.

Participants

Yao Wang, Principal Investigator, Lab Page

Adeen Flinker, Co-Principal Investigator, Lab Page

Ran Wang, Ph.D. student

Amirhossein Khalilian-Gourtani, Ph.D. student

Xupeng Chen, Ph.D. student

Leyao Yu, Research Associate

Diana Gomez, B.S. student (Summer Research)

Asma Khursheed, B.S. student (Summer Research)

Sponsor

This material is based upon work supported by the National Science Foundation with Grant Number 1912286.

Recent Progress

Developing neural decoders for language processing

Speech neural decoding with a Differentiable Speech synthesizer (2021)

Decoding spoken speech from human neural activity can enable neuro-prosthetics. Advances in speech neural decoding have shown successful achievements using deep learning. The scarcity of training data, however, hinders a complete brain-computer-interface (BCI) application. In this work, we propose a novel speech neural decoding framework. Our speech neural decoding framework achieves high accuracy in various objective metrics, such as correlation coefficient (CC), short-time objective intelligibility (STOI), and mel-cepstral distortion (MCD) while reducing the need for an extensively large dataset.

For more detailed progress please check this page: Decoding Feedforward and Feedback Speech Control in Human Cortex (Please contact us for the password if you want to browse this page).

Generative Adversarial Network-based synthesizer (2020)

In our recent progress, we propose a transfer learning approach with a pre-trained GAN to disentangle representation and generation layers for ECoG to audio decoding. Our approach could achieve high reconstruction accuracy.

By visualizing the attention mask embedded in the encoder, we observe brain dynamics that are consistent with findings from previous studies investigating dynamics in the superior temporal gyrus (STG), pre-central gyrus (motor), and inferior frontal gyrus (IFG).

For more detailed progress please check this page: Stimulus Speech Decoding From Human Cortex With Generative Adversarial Network Transfer Learning, ISBI 2020 Best Paper Finalist

We also explore the speech decoding architecture during multiple language tasks (audio repetition, audio naming, sentence completion, word reading, and picture naming) using the above approach. Here is the summary of the decoding result.

Developing directed connectivity models

Directed connectivity analysis via autoregressive model

In this part of the project, we are interested in developing techniques to estimate the directed connectivity between cortical regions. Directed connectivity describes the directional influence of one neural unit over another, and in a sense is the union of the structural and functional connectivity. Towards this goal, we have developed a toolbox of algorithms enabling us to investigate the dynamic directed connectivity between different brain regions. A multivariate autoregressive (MVAR) model is utilized to represent the effect of signal from one electrode to another in a data-driven manner. The developed toolbox allows for the investigation of different pre-processing techniques and Granger causality measures. Furthermore, an automatic active electrode selection algorithm eliminates the need for manually selecting a few electrodes (which is a common difficulty in the literature). From the fitted MVAR coefficient, different measures of connectivity can be calculated and analyzed. We further cluster the connectivity dynamics between different electrodes in an unsupervised manner. The prototypical temporal connectivity patterns for the identified clusters follow the expected dynamics of different tasks. Furthermore, the clustered connections allow for visualizing the connectivity dynamics in the brain.

For more detailed progress please check this page: Directed Connectivity Models (Please contact us for the password if you want to browse this page).

Directed connectivity analysis via switching dynamics

Neural computation during a task involves the orchestration of different cortical processes. In many cases, the distributed interaction between different regions is likely to change over time and depend on the context or the task. To correctly identify the information flow between different regions, it is important to take these dynamics into account when modeling a process while keeping the model simple enough that causality analysis is still possible. Here, we use a class of models known as switching linear dynamic systems (SLDS). This class of models allows us to estimate the brain-state in an unsupervised fashion while the connectivity can still be measured via Granger causality. The stability of the existing methods in the literature hinders the applicability of these methods to neural data and is an active area of research.

The report HERE introduces SLDS models and Granger causality and provides the required theoretical backgrounds for understanding the algorithms and procedures. We further show preliminary experimental results with the ECoG signal.

Related Publications

Distributed feedforward and feedback cortical processing supports human speech production (The press release about our work can be found here)

Stimulus Speech Decoding from Human Cortex with Generative Adversarial Network Transfer Learning

Reconstructing Speech Stimuli From Human Auditory Cortex Activity Using a WaveNet Approach

Long-term prediction of μECOG signals with a spatio-temporal pyramid of adversarial convolutional networks