Explore ImageNet. ImageNet sample images, Kaggle ImageNet Mini 1000, What surprises you about this data set? What questions do you have? Thinking back to last week’s assignment, can you think of any ethical considerations around how this data was collected Are there privacy considerations with the data?

I was surprised by the simplicity of the sample images. For example, on ImageNet sample imges on github, often there were images with just a part of an animal (like hen and cock). Most also had a simplified, or blurred background to focus on the object even more. Some even had animals in their unnatural environment — the jellyfish sample image was artificial jellyfish in a fishtank. I thought this contradicted to what most people would use as an input to these ML Algorithms. Additionally, I thought it was note worthy to state that there were only one sample image per object, which would greatly decrease the confidence level of the algorithm. Additionally, for the category of “groom,” there were multiple people in the image, making it difficult for an accurate learning. I am also questioning to what extent the datas will skew against or for a specific race, gender, age, etc. For example, on the category of “groom,” it’s a family of East Asian people. How would this affect the outputs when a user uses a black family as an input? What about two grooms getting married? What if it’s interracial?

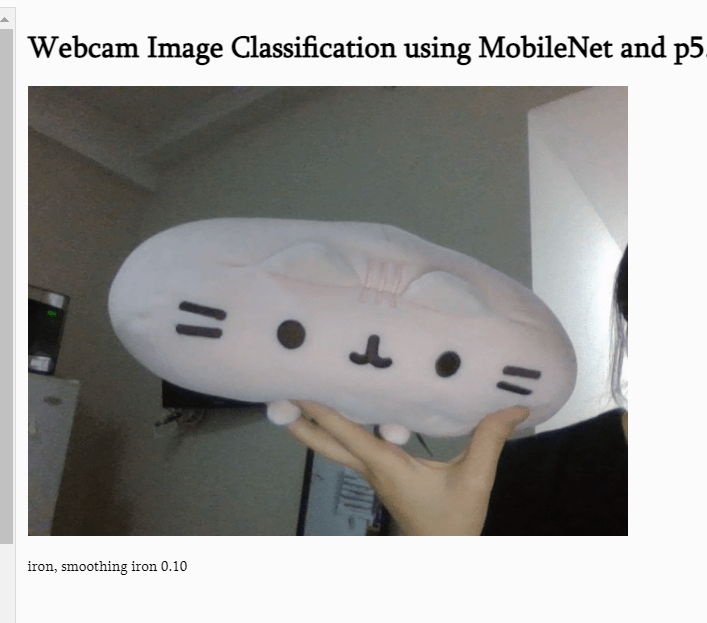

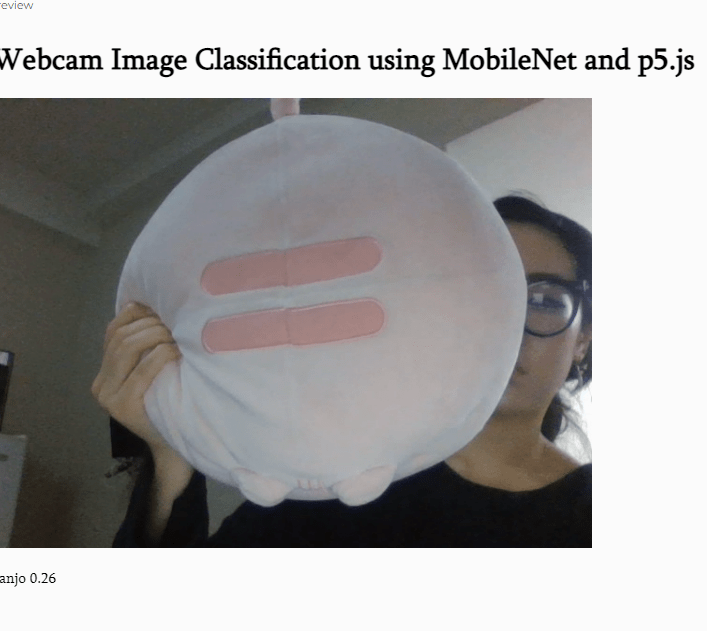

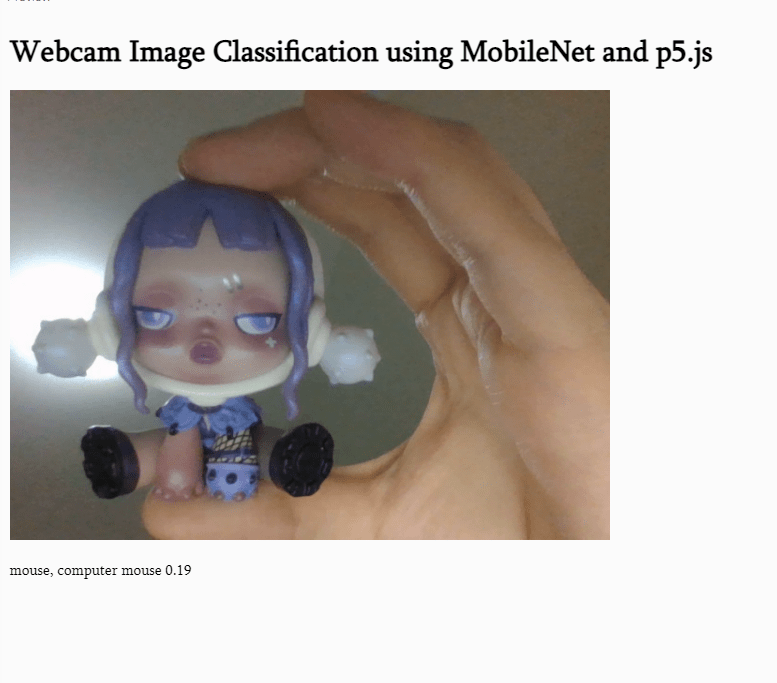

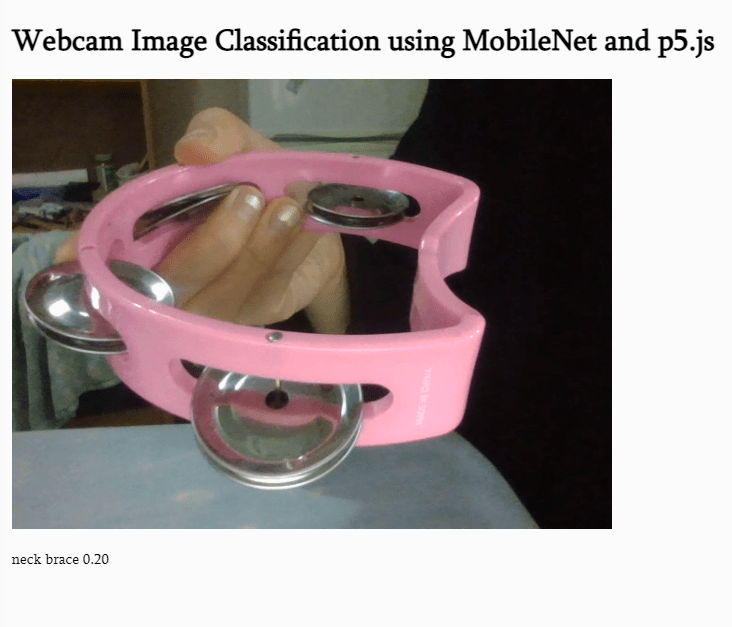

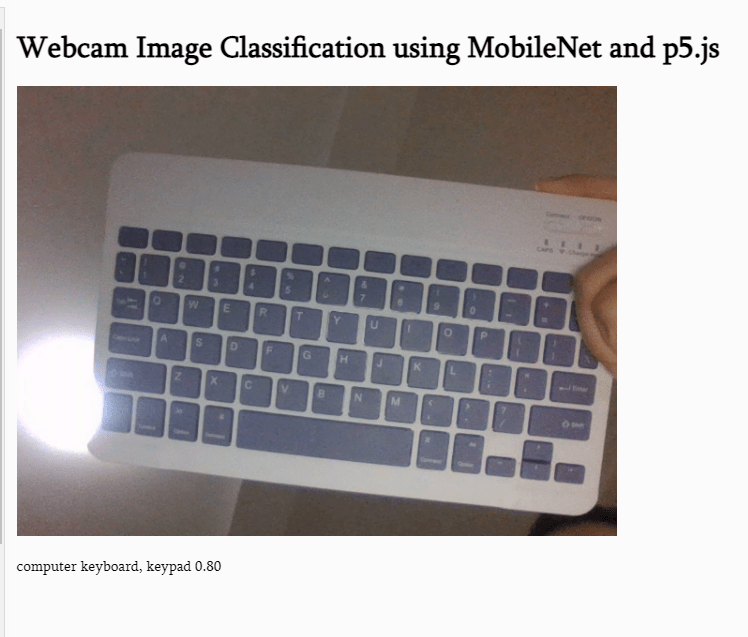

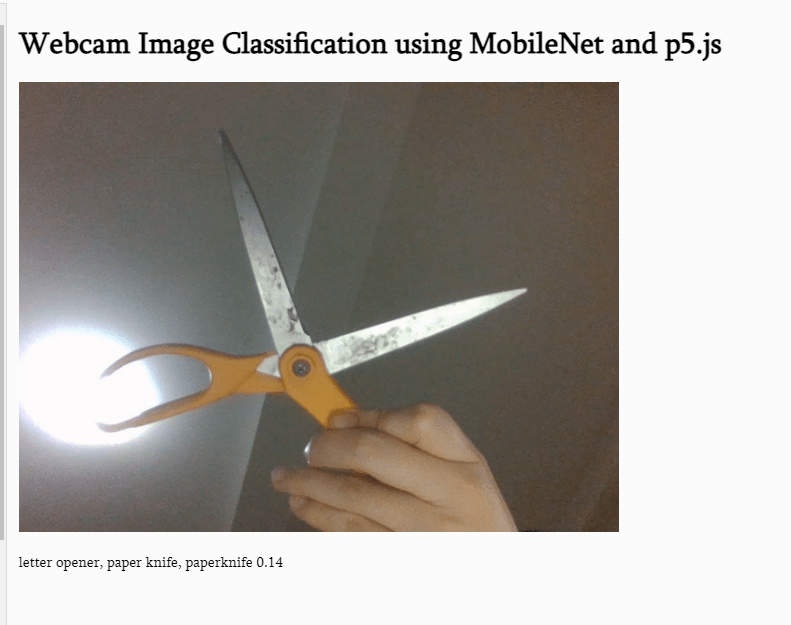

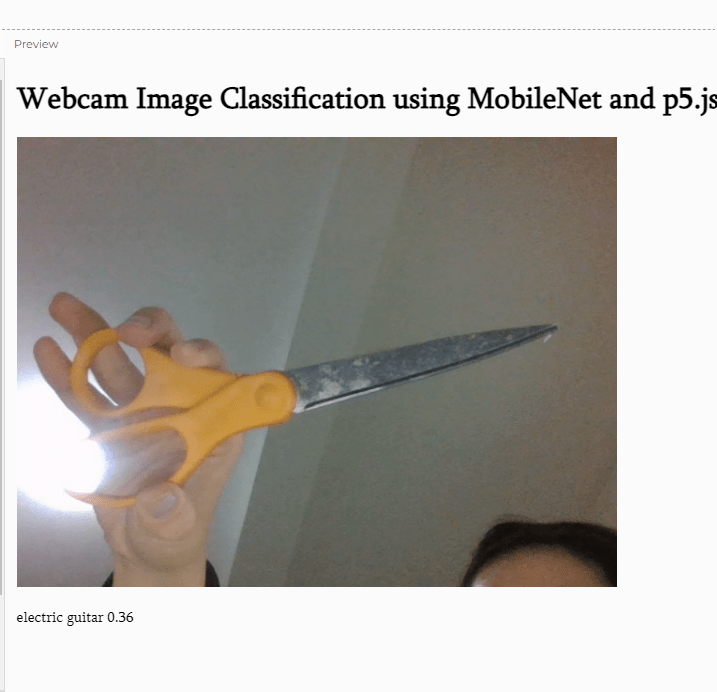

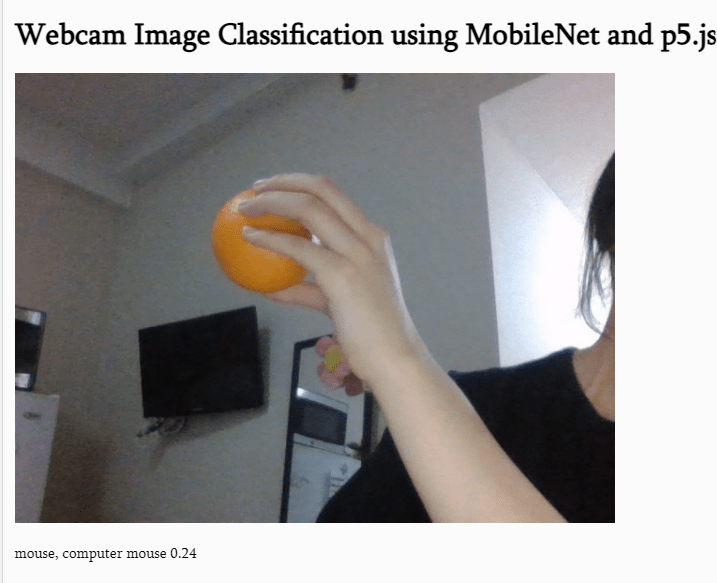

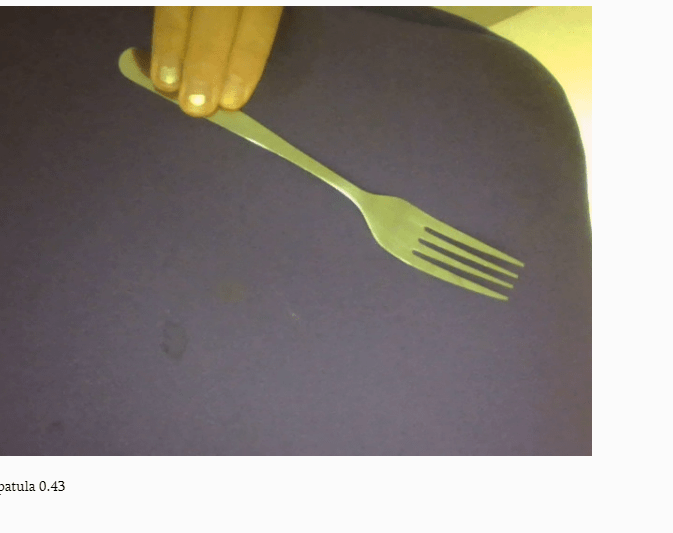

Using the ml5.js examples above, try running image classification on a variety of images. Pick at least 10 objects in your room. How many of these does it recognize? What other aspects of the image affect the classification, including but not limited to position, scale, lighting, etc.

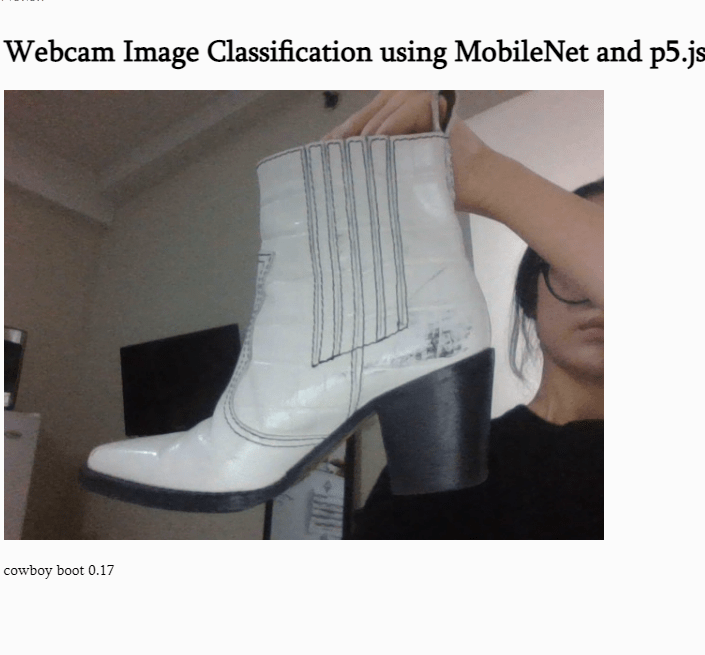

I was surprised to see that the ML was able to get cowboy boots correctly. Not only was I shocked by the fact that it recognized a show, but I was more shocked by the fact that the ML had a specific category of “cowboy boots.”

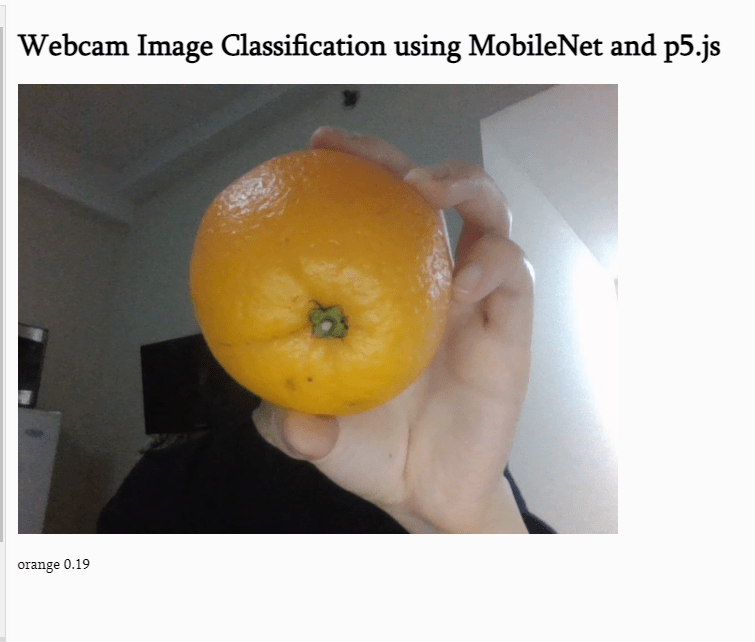

I also thought it was funny how some objects were identified as two different things depending on the distance and the angle of the object. For example, orange was easily spotted when it was up close, but once it got far away, it was read as a computer mouse.

Doing this exercise made me realize how limited each data base can be. I am maybe thinking that it might be better to have a more specific image identifier, so that the data samples can be bigger. For example, there could be an image identifier for JUST bird eggs. On this identifier, the creator could have more image samples per egg, instead of having 1-2 that present a specific type of an egg in a broader image identifier.