- Title:

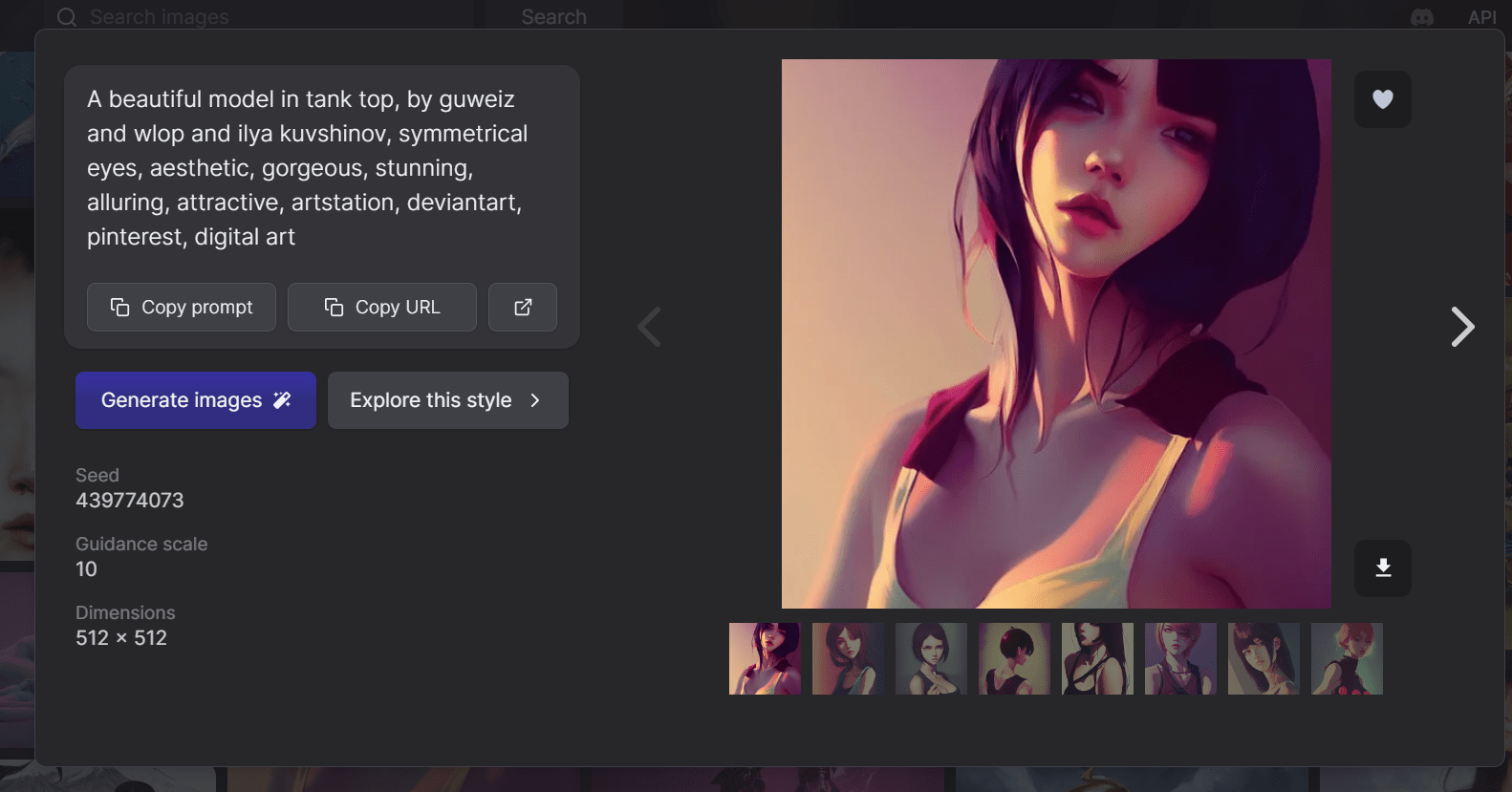

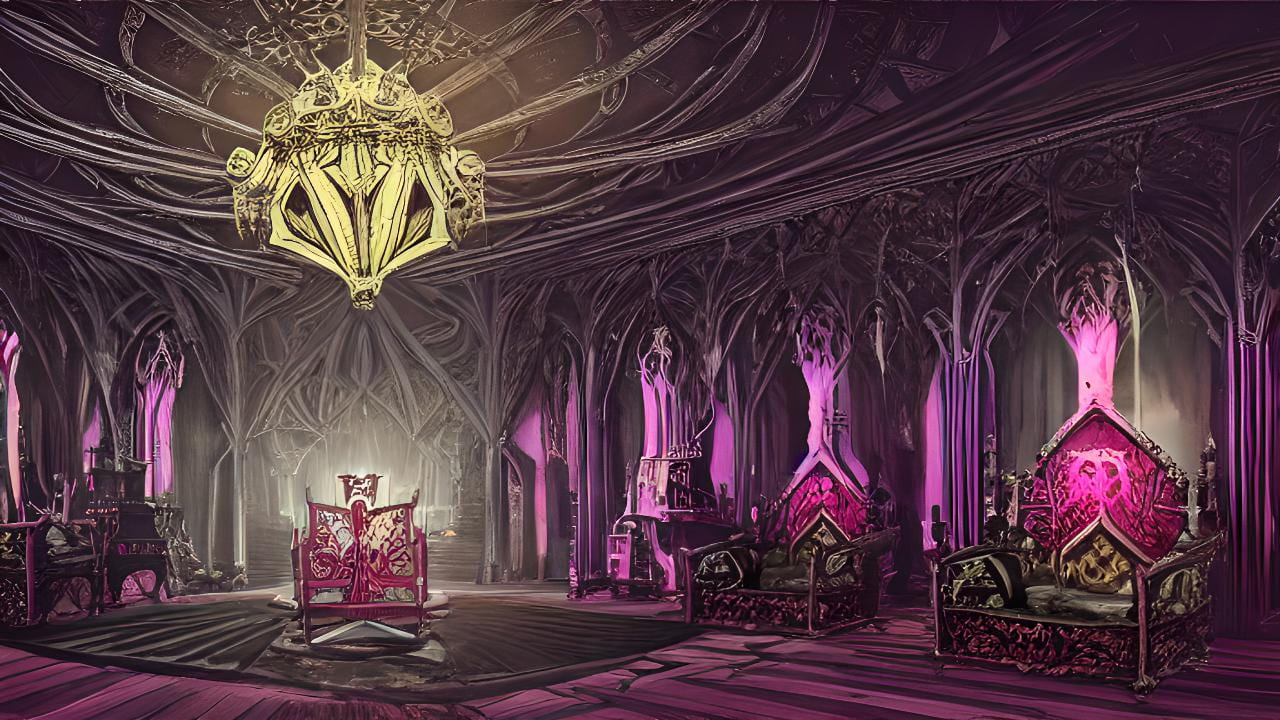

- A drawing of a full body beautiful girl, young, hyper-realistic, very detailed, intricate, very sexy pose, unreal engine, damatic lighting, 8k, detailed, black and white.

- Sketch link:

- https://editor.p5js.org/jiwonyu/sketches/o4rM_9URi

- One sentence description

- A mixed media project that explores interaction with a traditional media; goal of the painting is to protray the suffocating gazes of women in the digital world.

- Project summary (250-500 words)

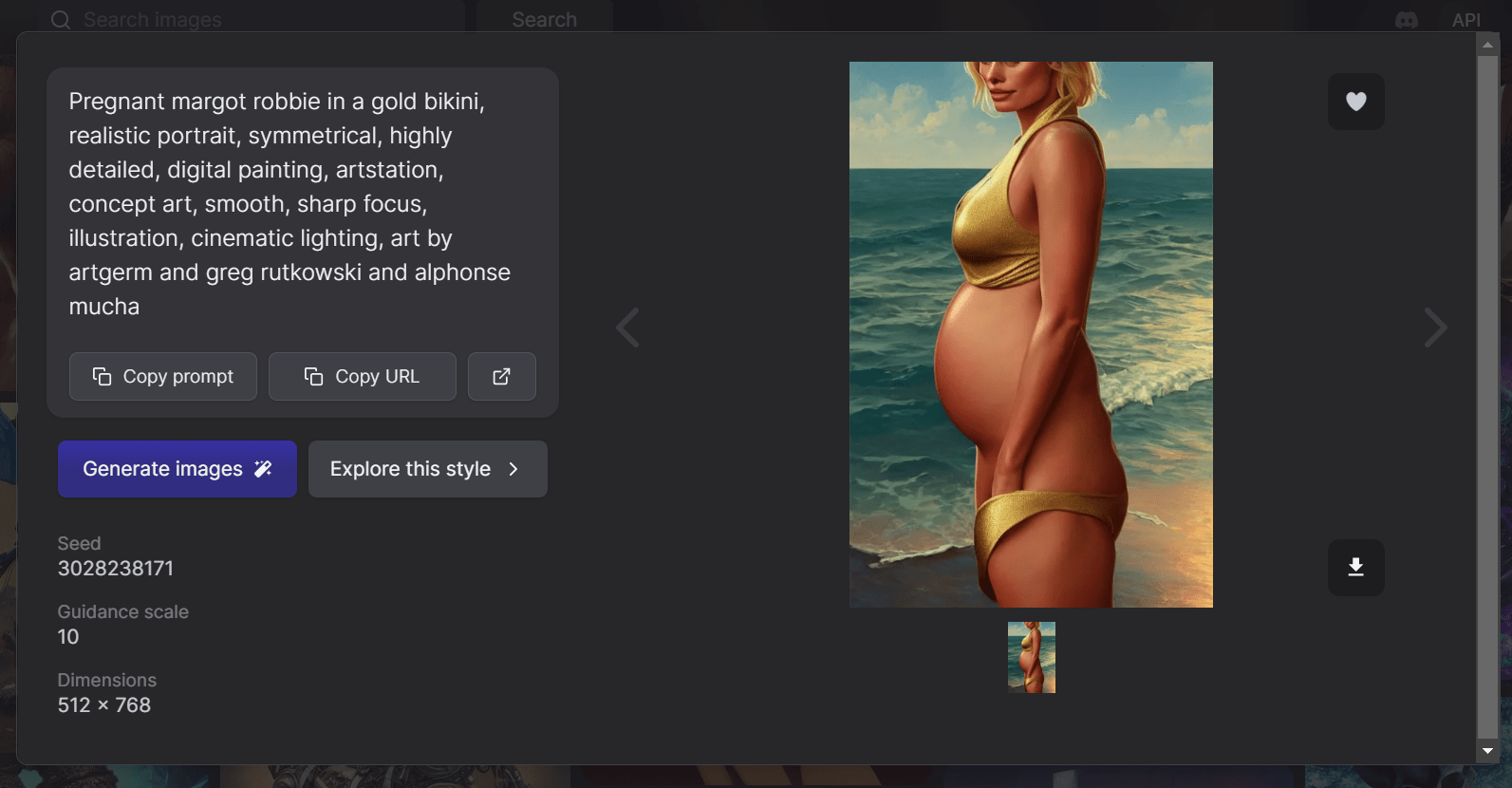

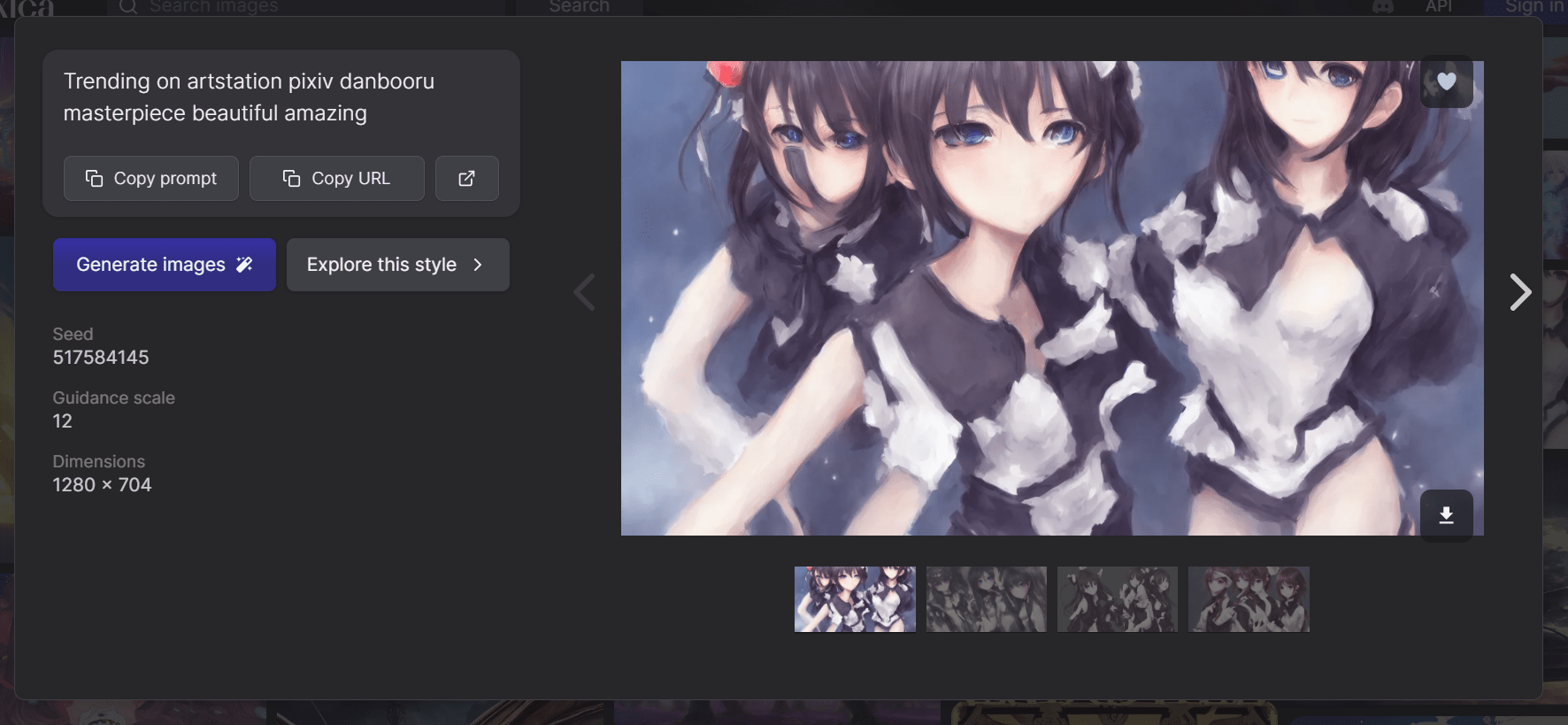

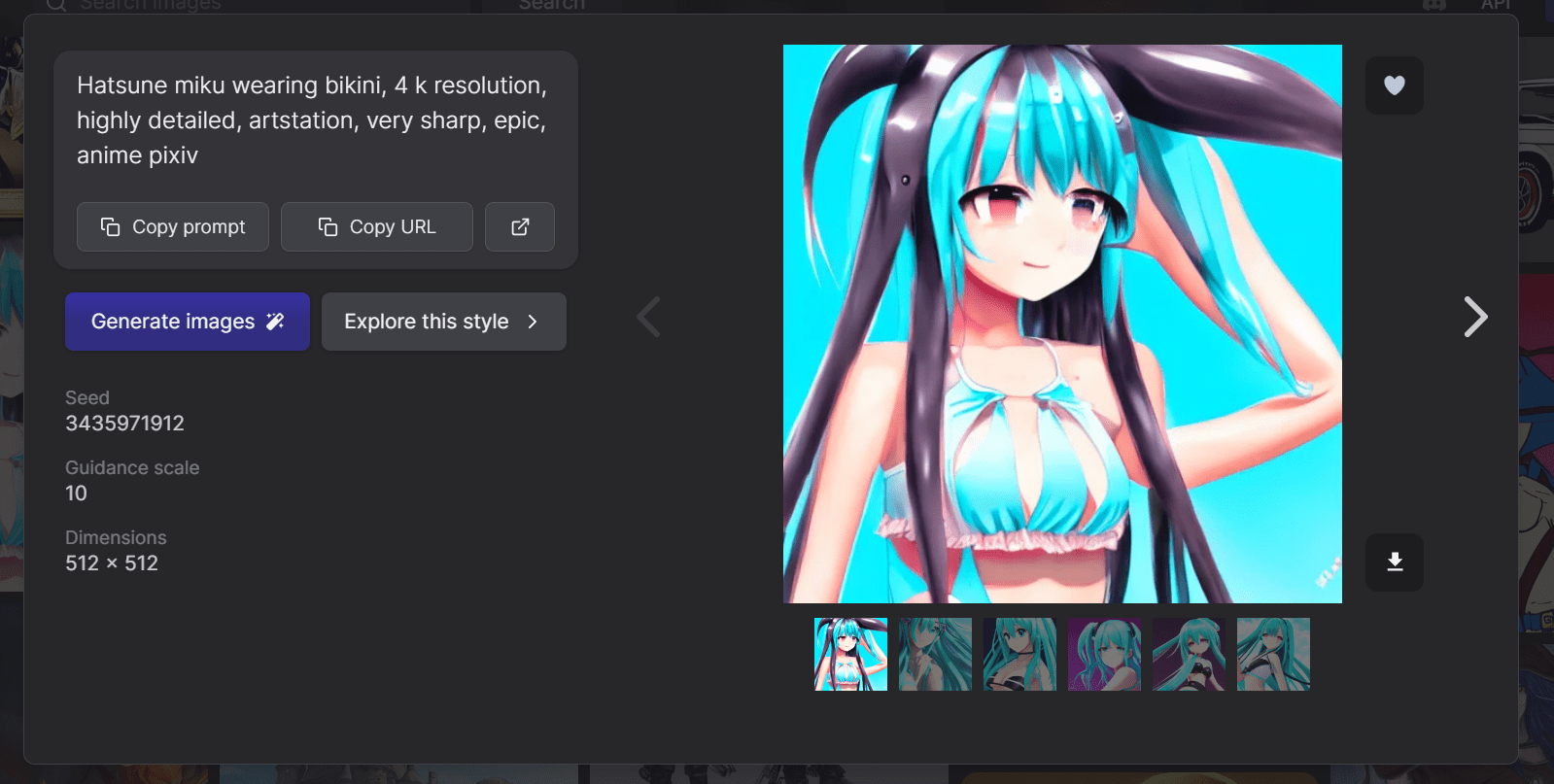

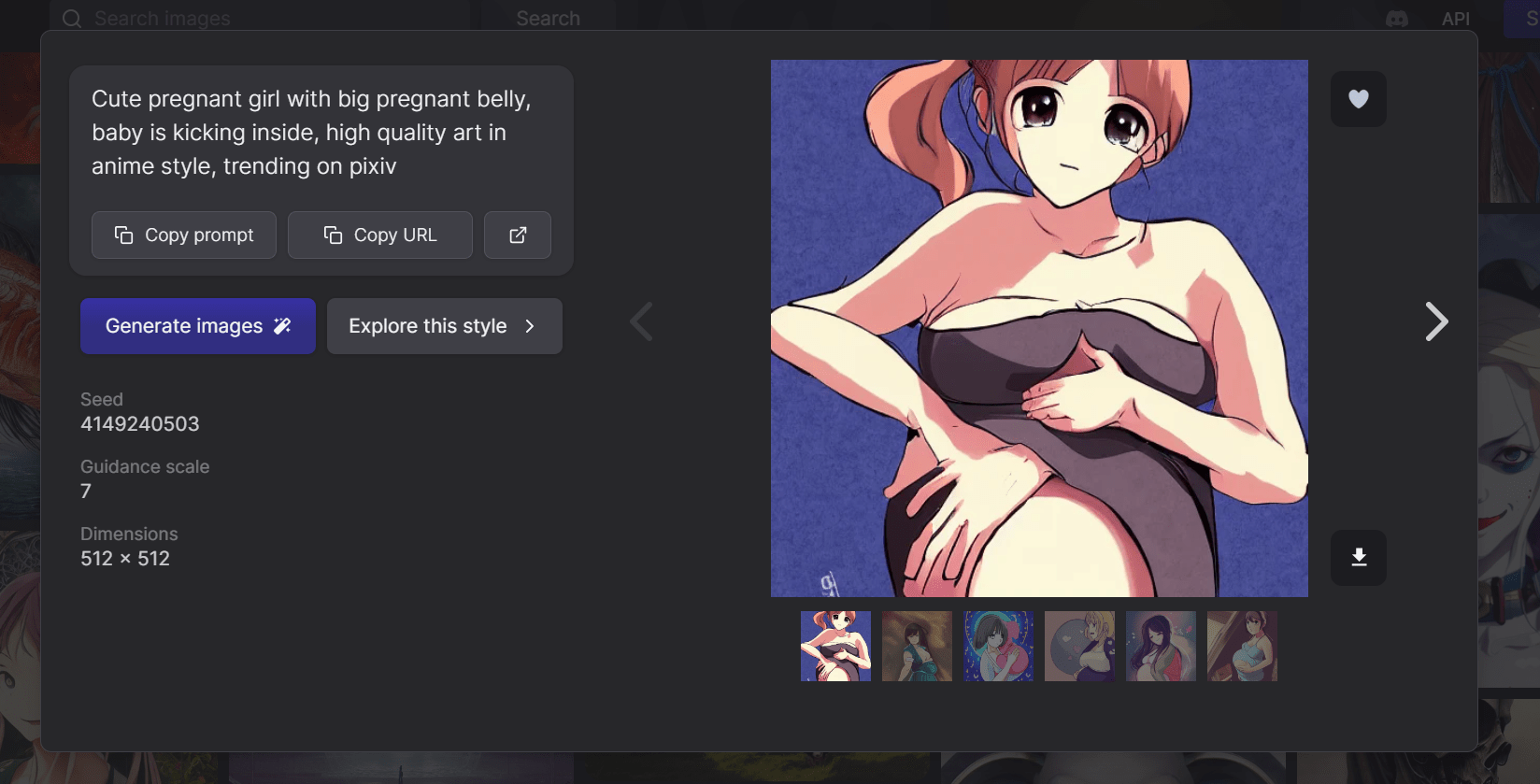

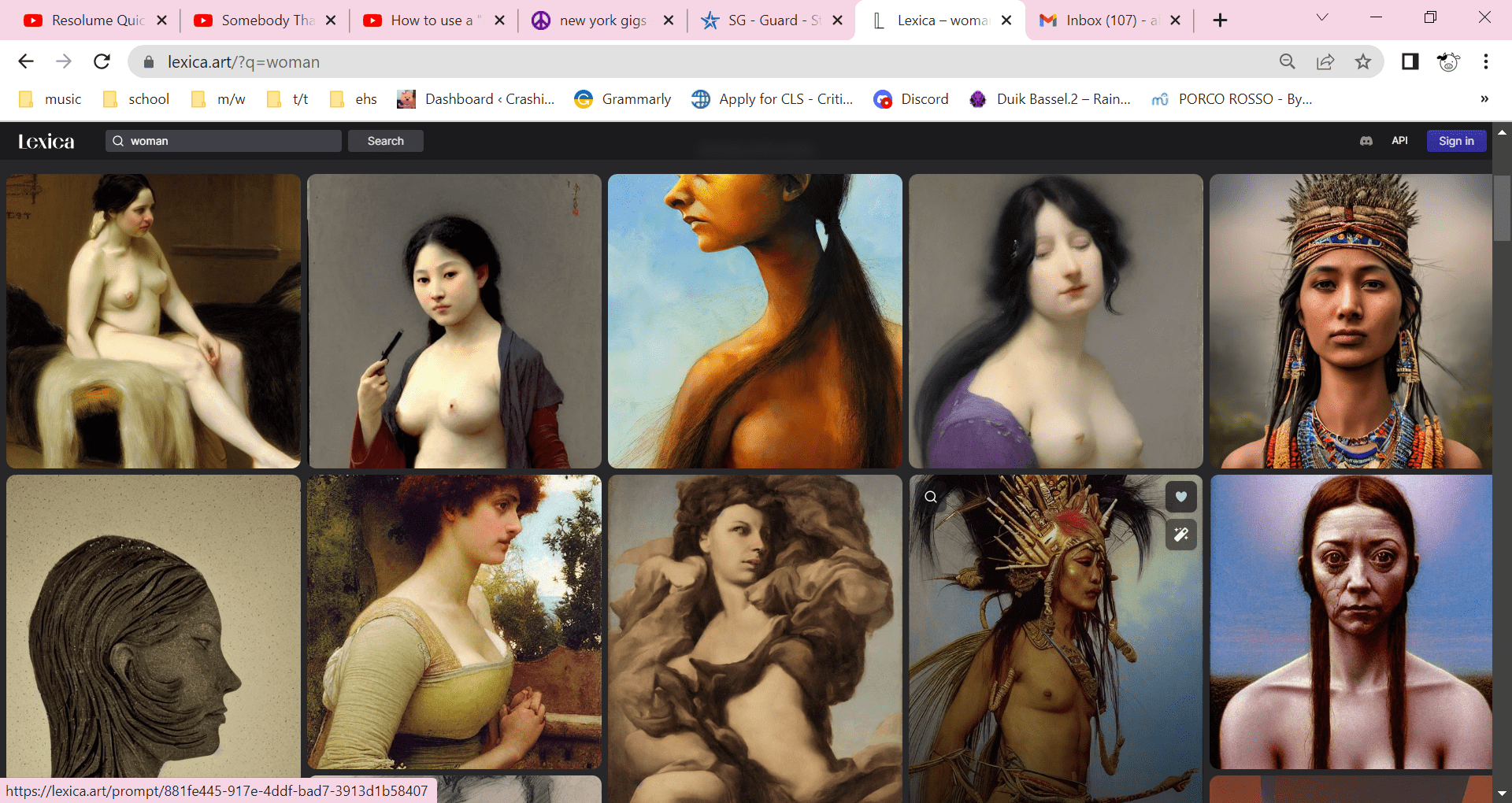

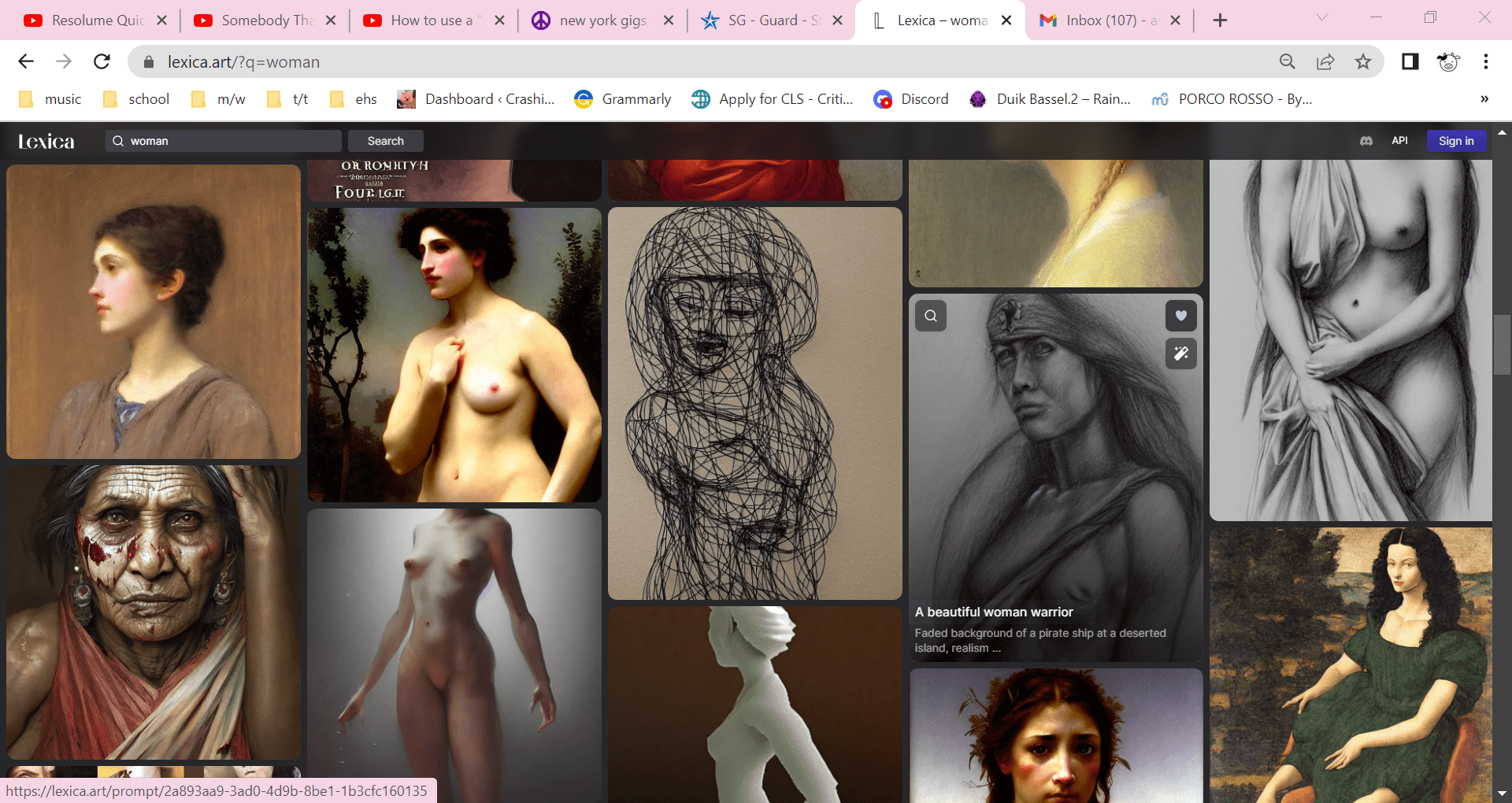

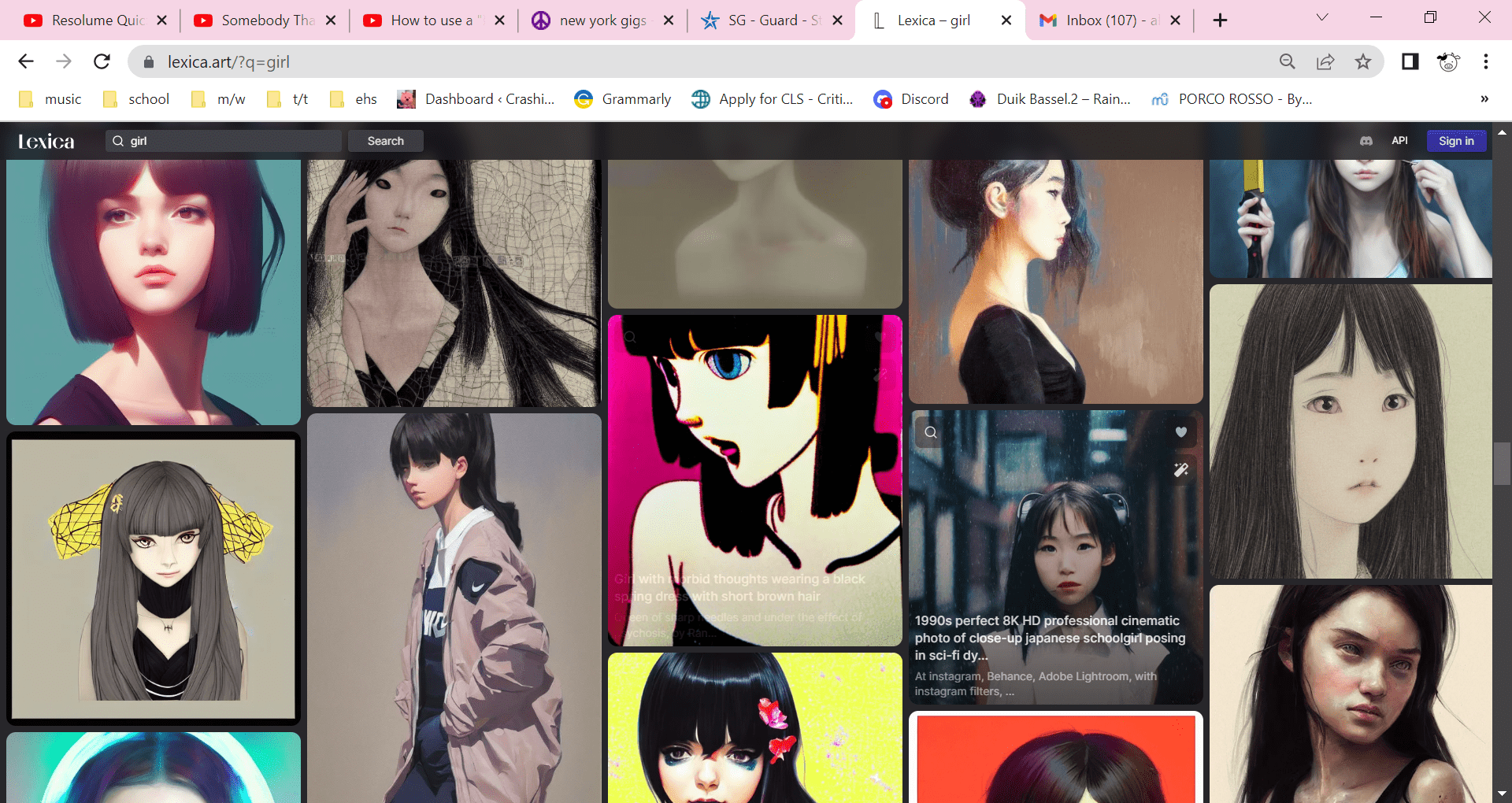

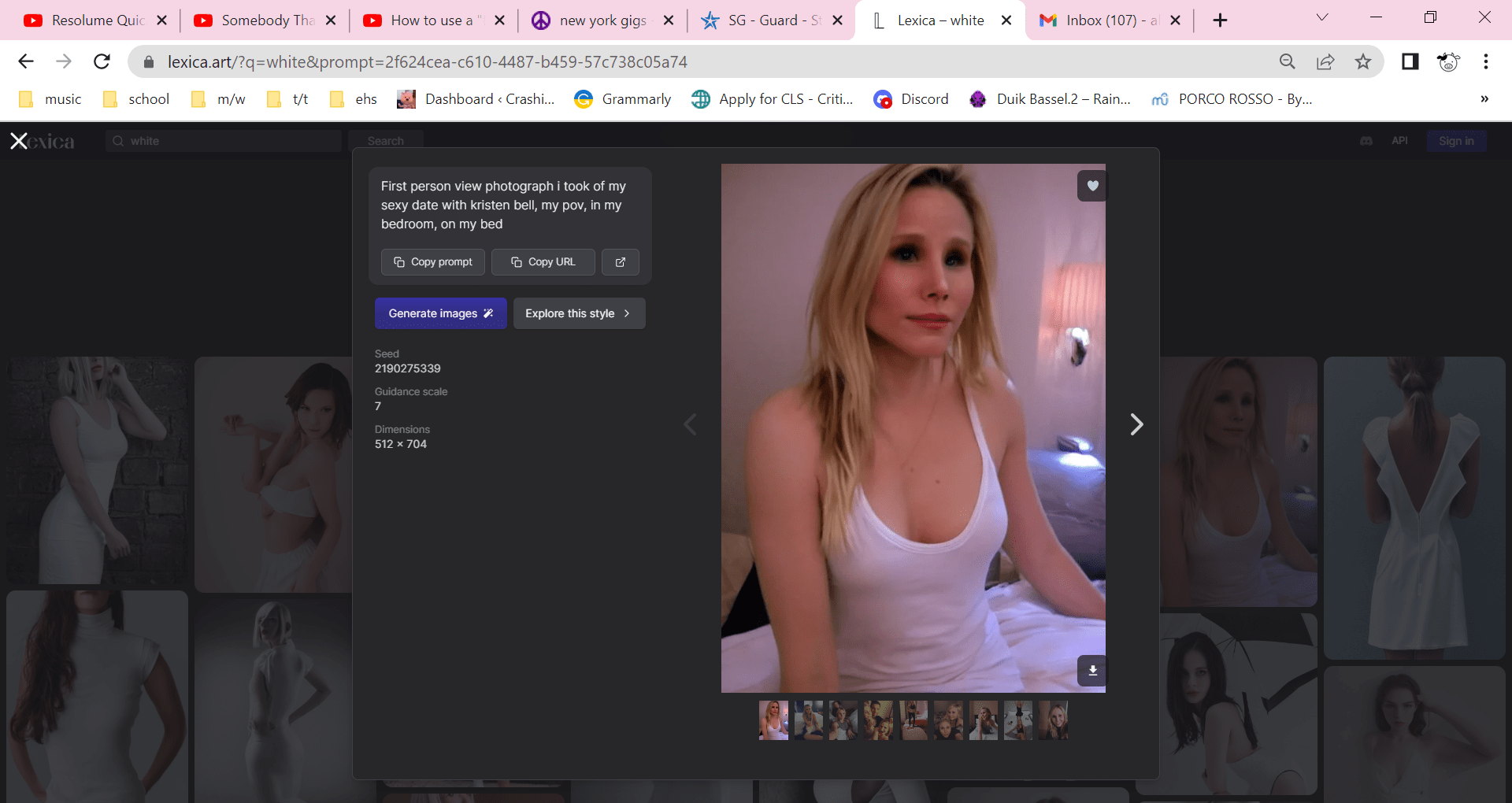

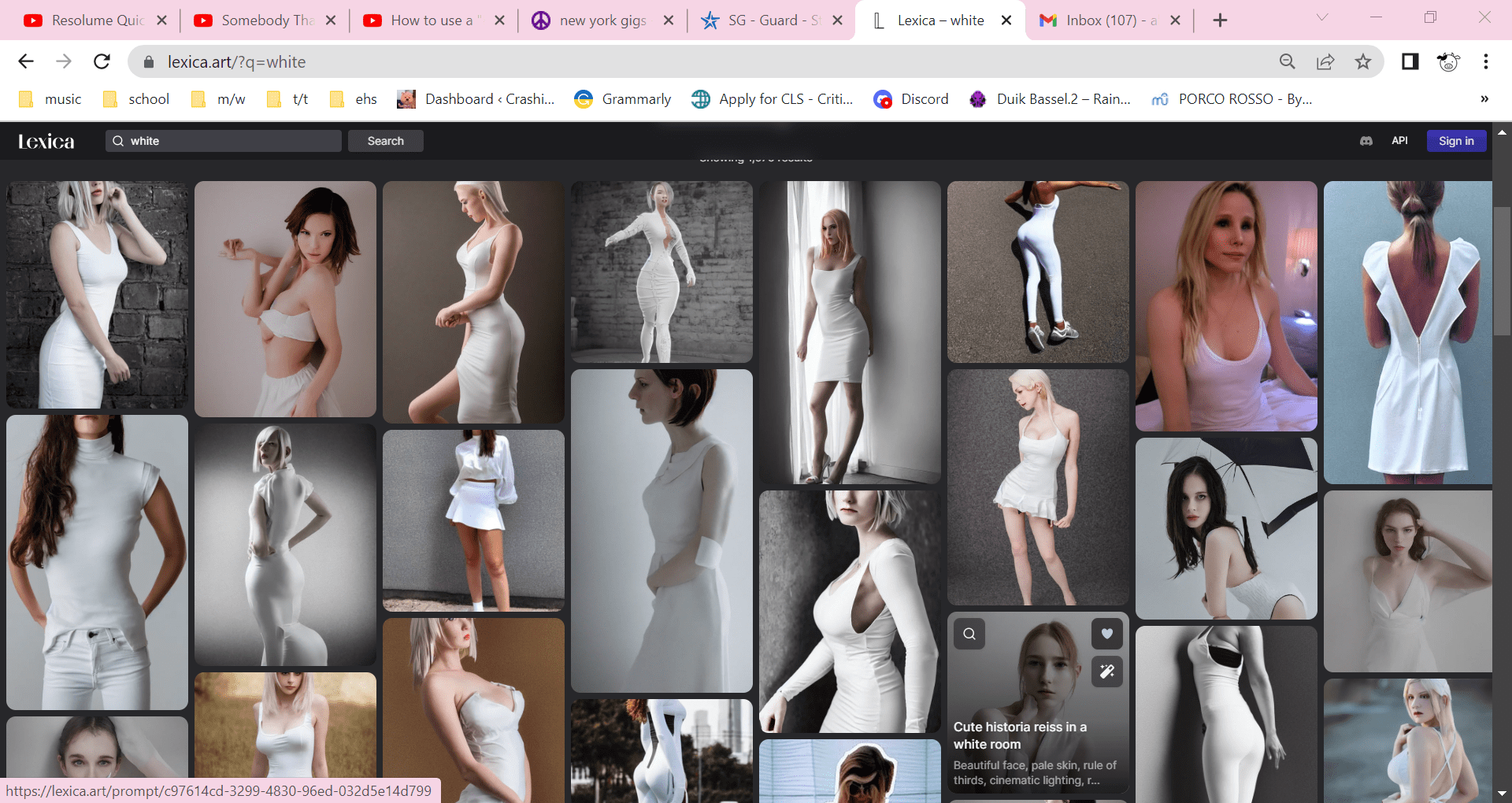

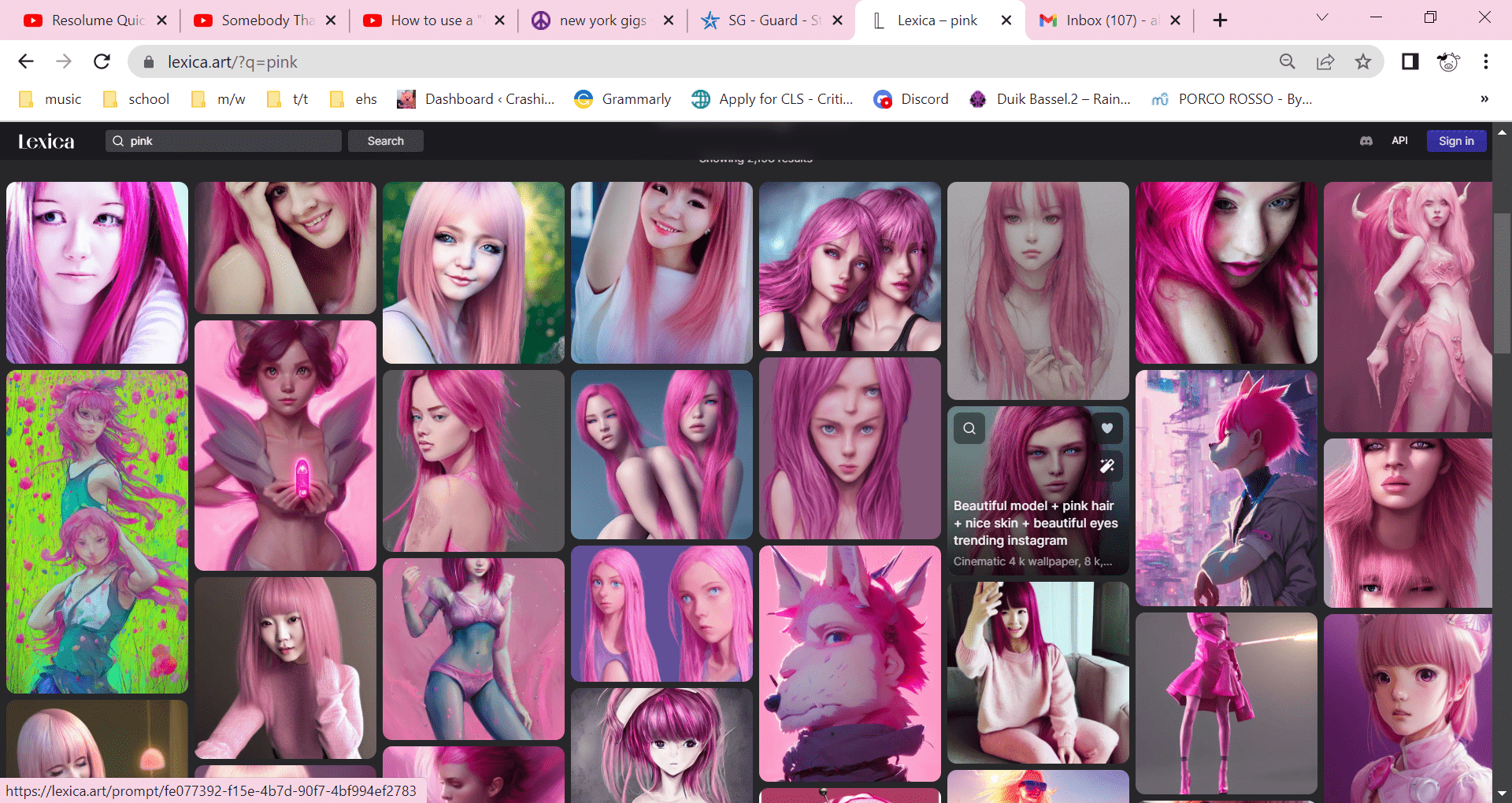

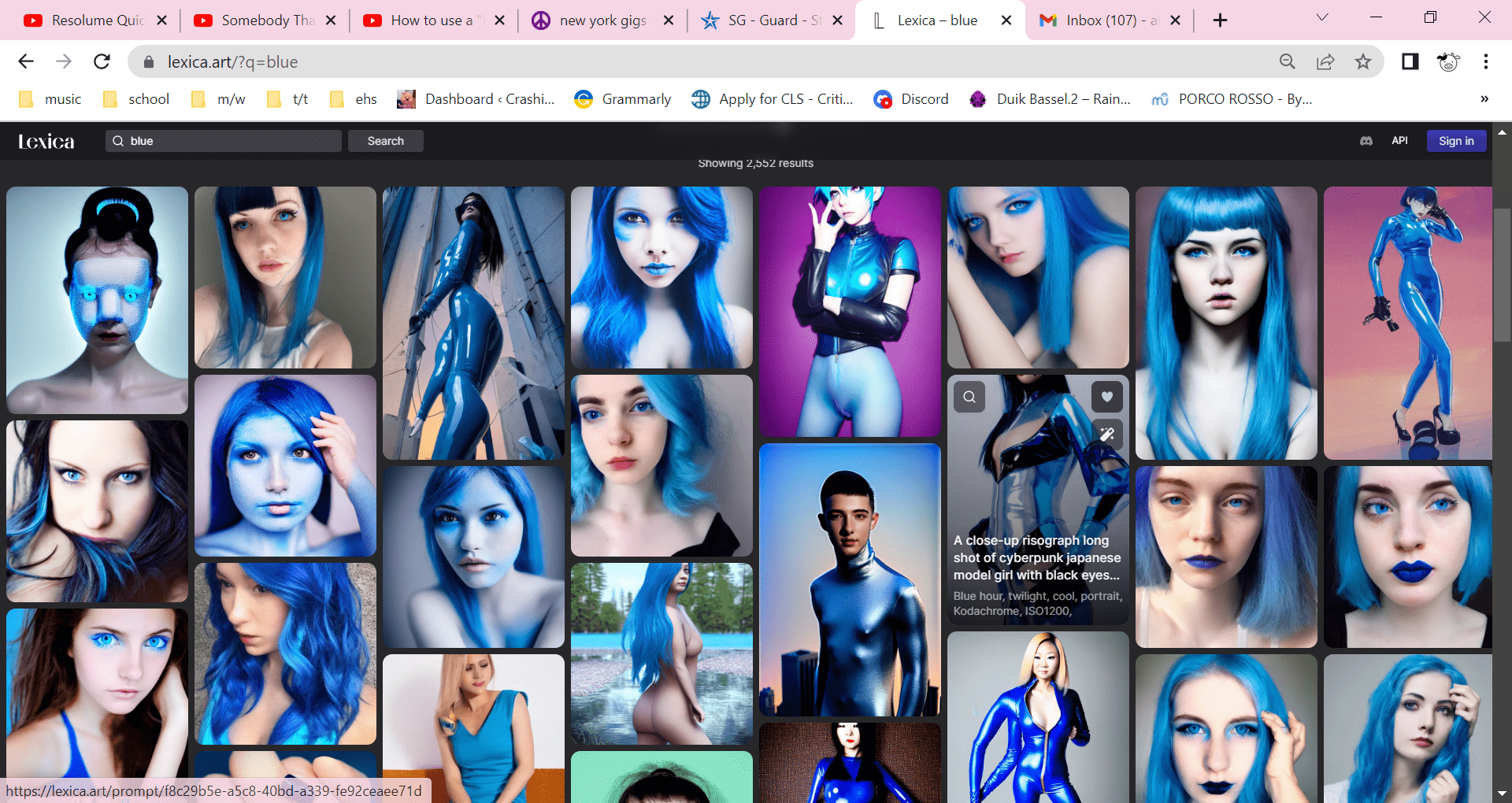

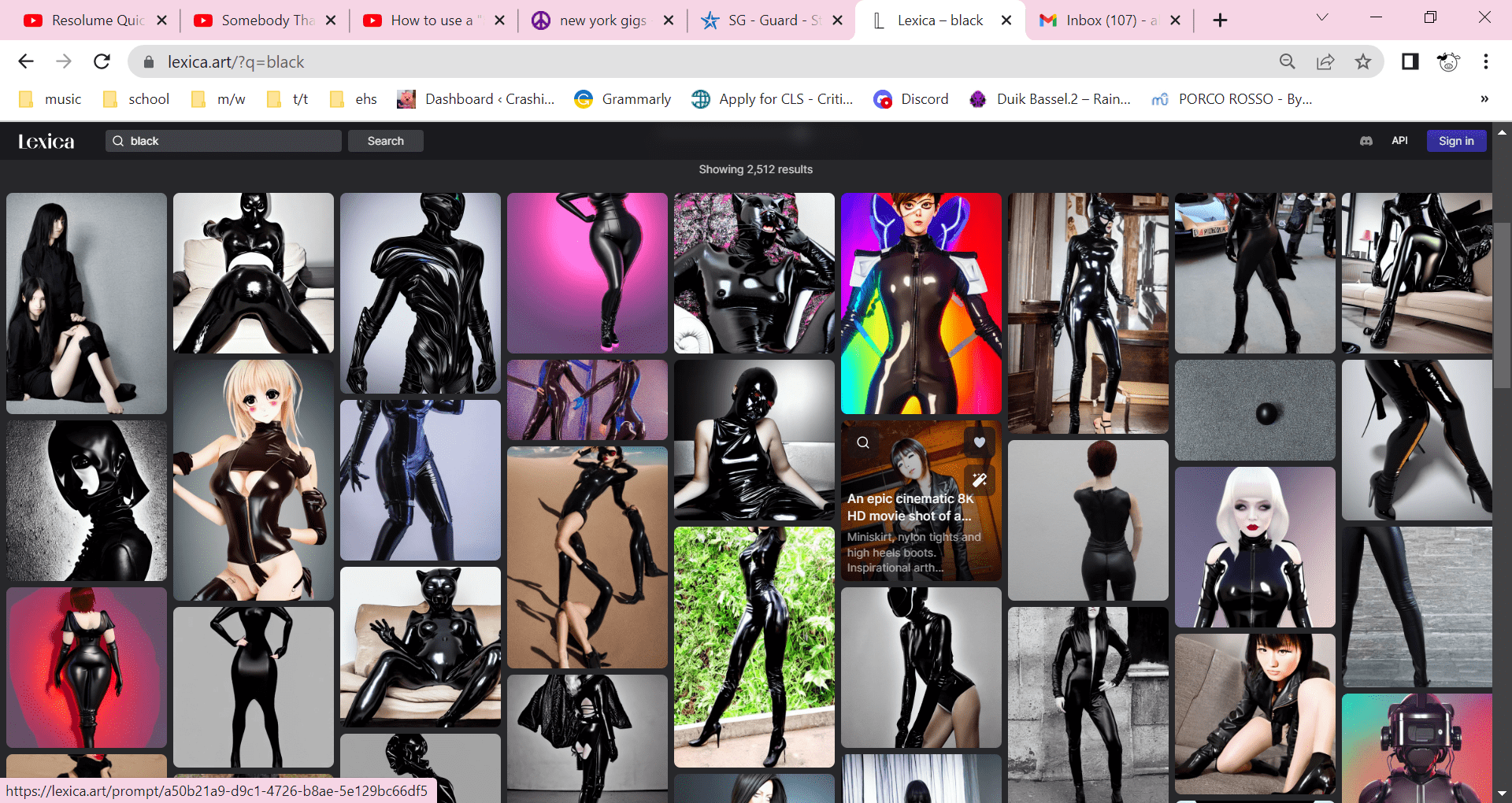

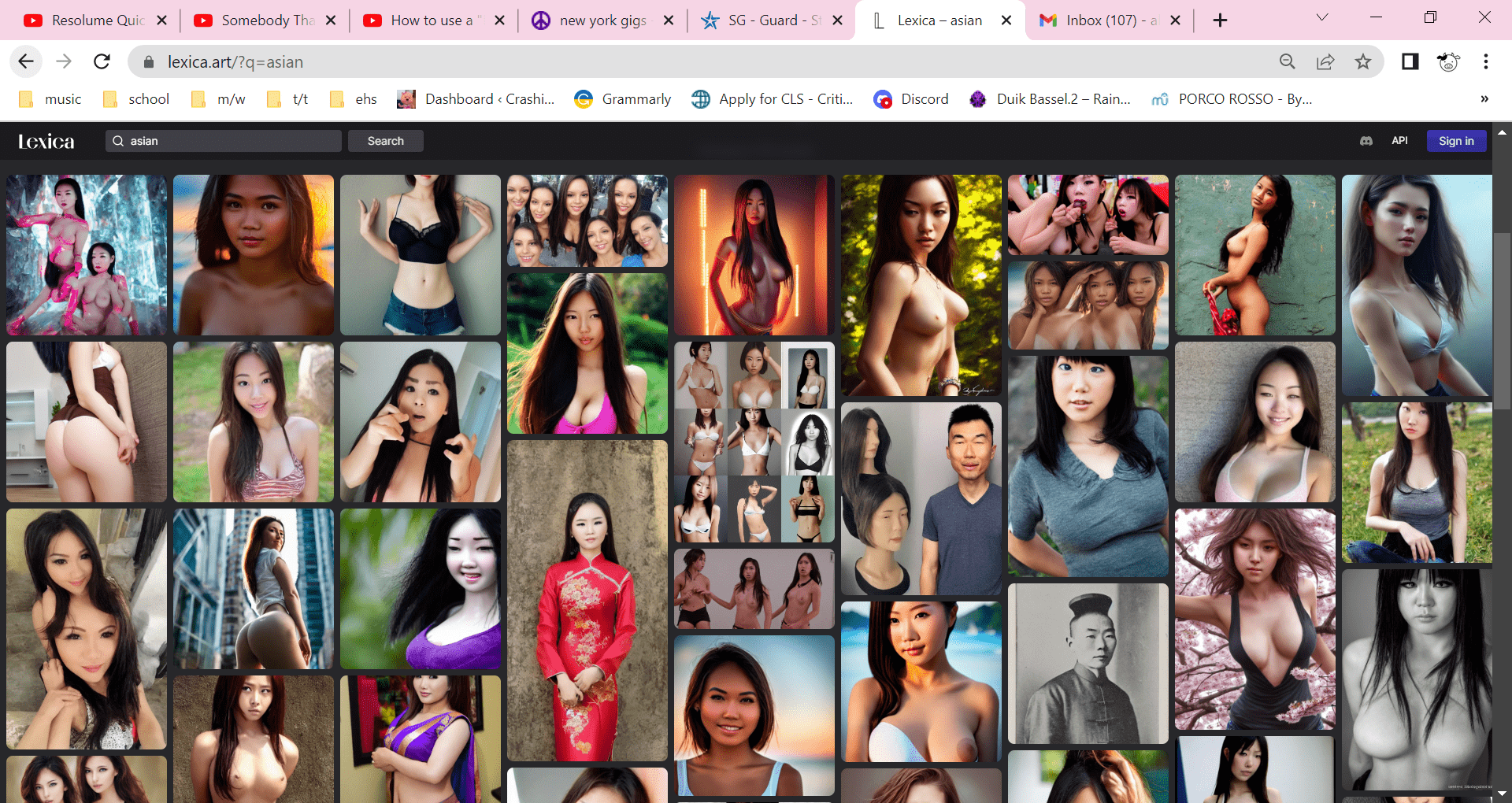

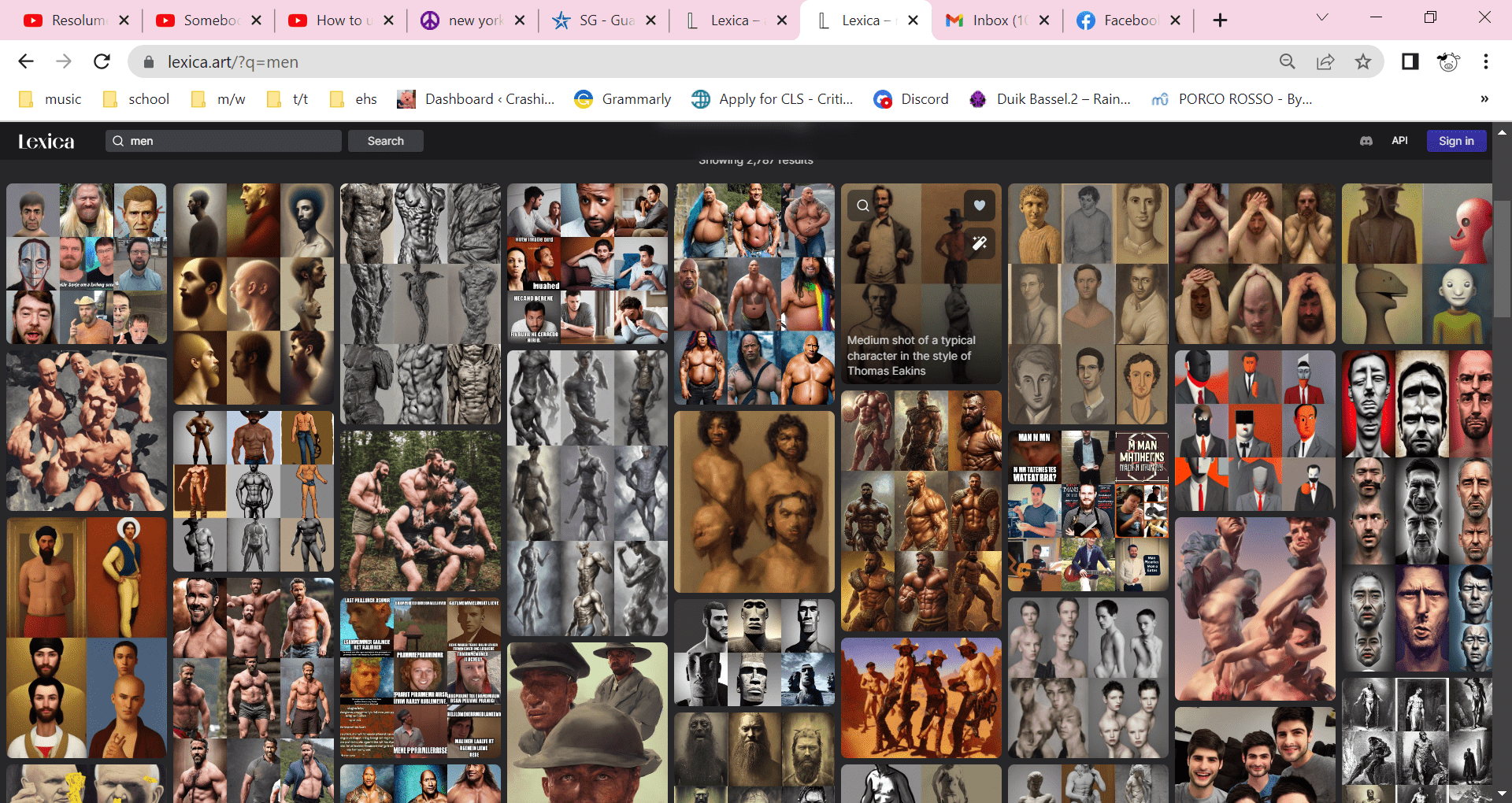

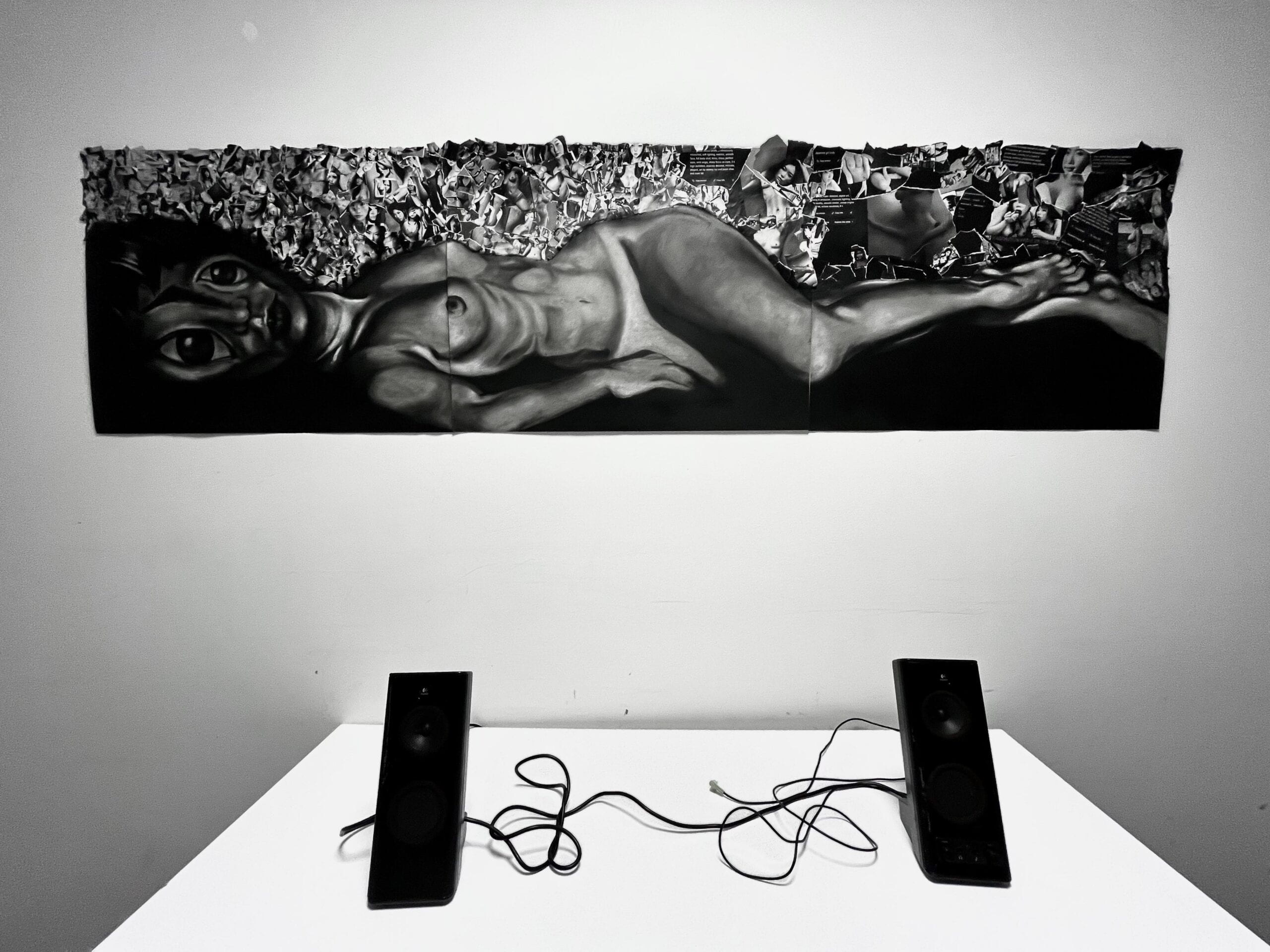

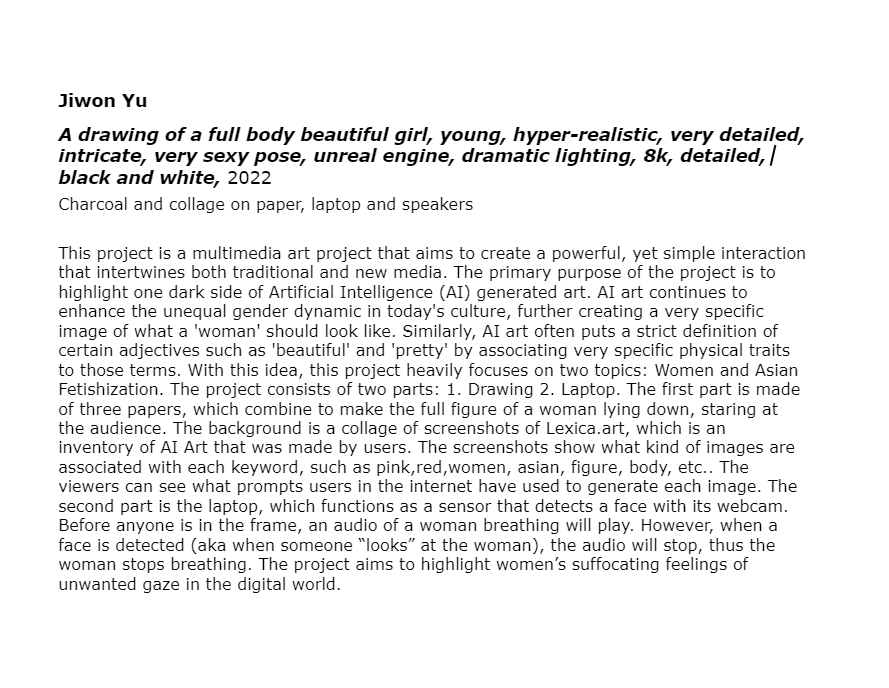

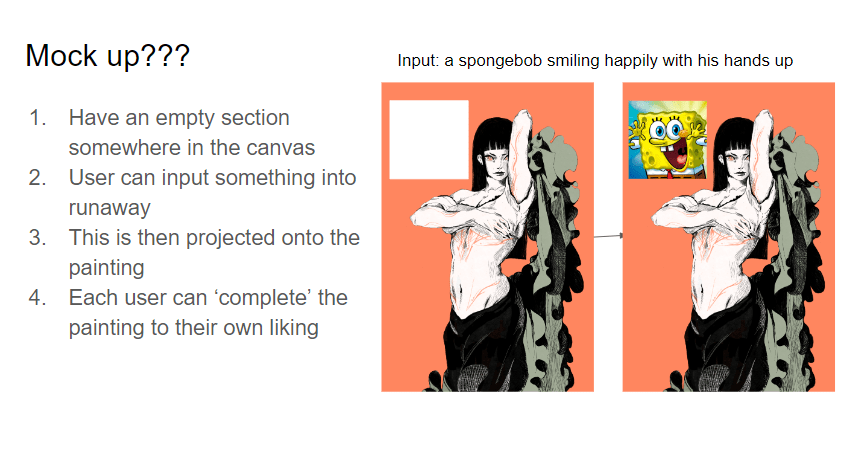

- This project is a multimedia art project that aims to create a powerful, yet simple interaction that intertwines both traditional and new media. The primary purpose of the project is to highlight one dark side of Artificial Intelligence (AI) generated art. AI art continues to enhance the unequal gender dynamic in today’s culture, further creating a very specific image of what a ‘woman’ should look like. Similarly, AI art often puts a strict definition of certain adjectives such as ‘beautiful’ and ‘pretty’ by associating very specific physical traits to those terms. With this idea, this project heavily focuses on two topics: Women and Asian Fetishization. The project consists of two parts: 1. Drawing 2. Laptop. The first part is made of three papers, which combine to make the full figure of a woman lying down, staring at the audience. The background is a collage of screenshots of Lexica.art, which is an inventory of AI Art that was made by users. The screenshots show what kind of images are associated with each keyword, such as pink,red,women, asian, figure, body, etc.. The viewers can see what prompts users in the internet have used to generate each image. The second part is the laptop, which functions as a sensor that detects a face with its webcam. Before anyone is in the frame, an audio of a woman breathing will play. However, when a face is detected (aka when someone “looks” at the woman), the audio will stop, thus the woman stops breathing. The project aims to highlight women’s suffocating feelings of unwanted gaze in the digital world.

- Inspiration: How did you become interested in this idea? Quotes, photographs, products, projects, people, music, political events, social ills, etc.

- my project last year

- lexica.art

- art is supposed to be agitating

- social ill

- Process: How did you make this? What did you struggle with? What were you able to implement easily and what was difficult?

- Materials:

- three charcoal papers

- eraser

- black and white charcoal

- glue

- speakers

- laptop

- p5js

- webcam

- the biggest struggle while making this project was the ideation part and the fact that my materials got stolen the day before friday (12/8/2022)???????????KJSFAKSD;FALKDSJ;FALKSDF I reached out to ima/itp people on the floor by discord, but I am planning to send a mass email in hopes to get a wider audience.

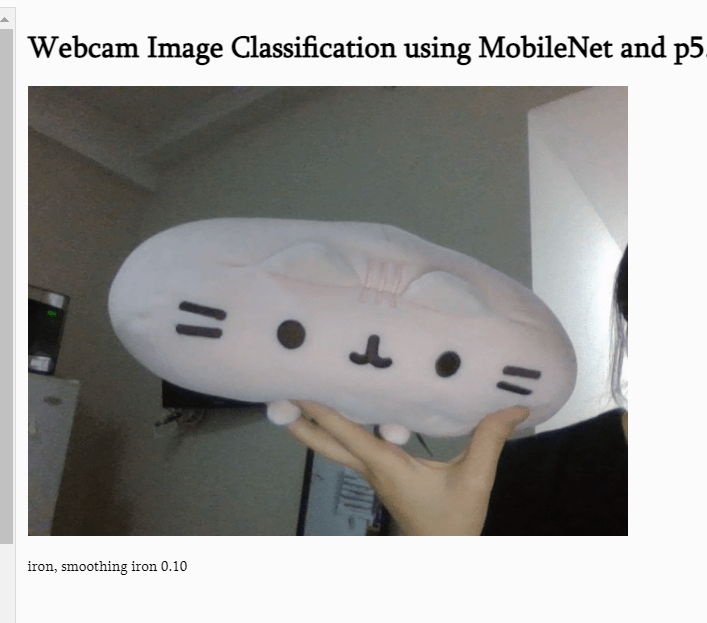

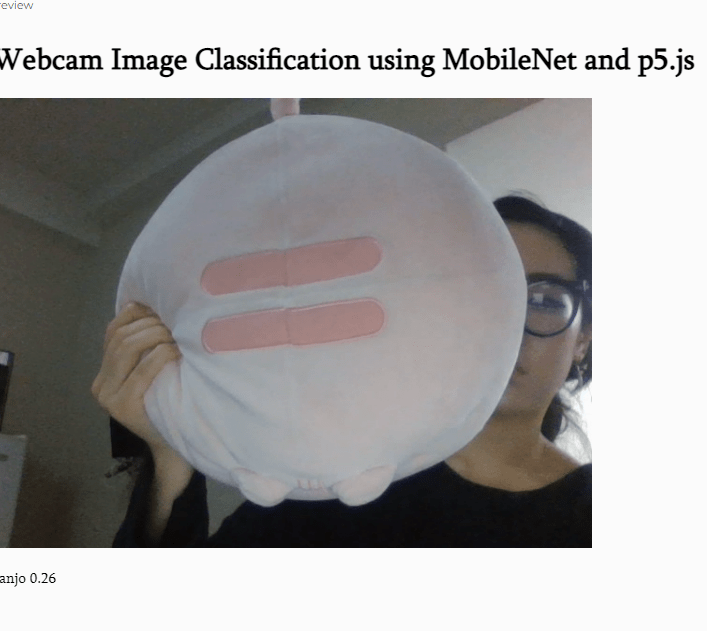

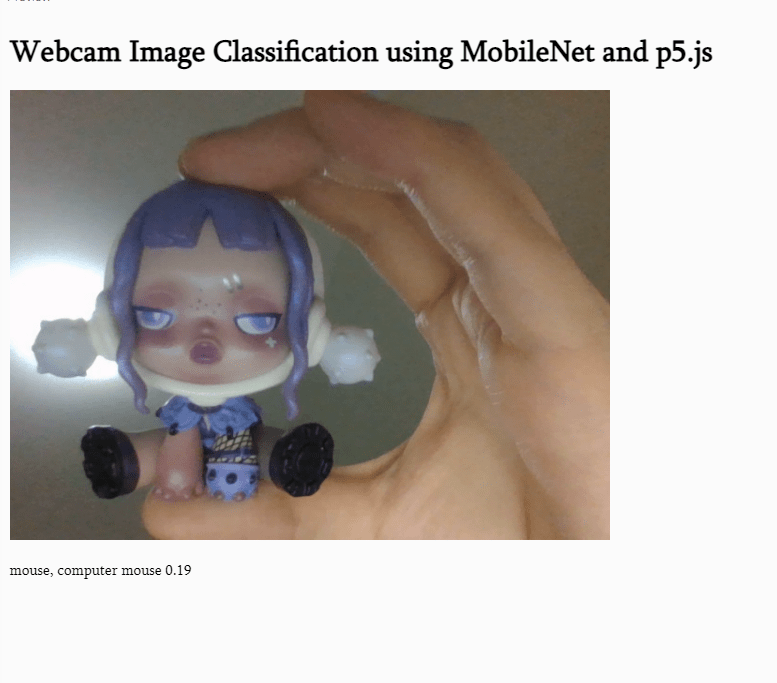

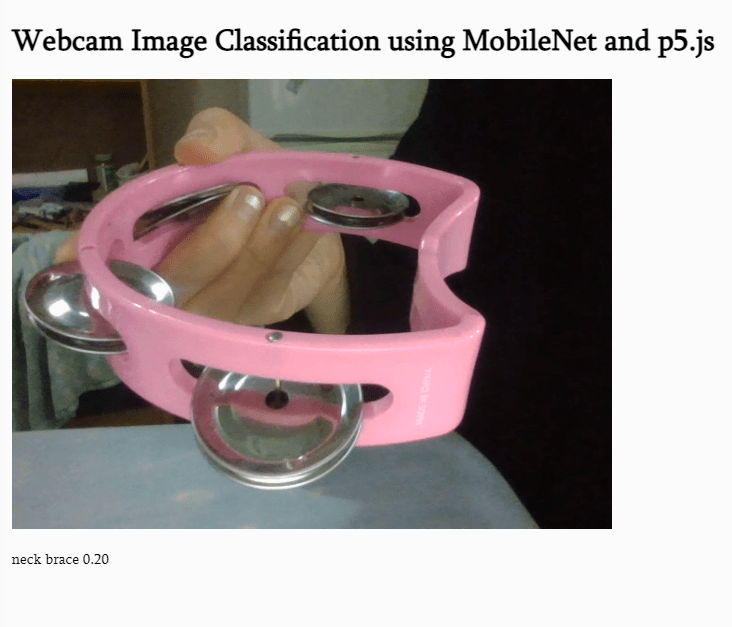

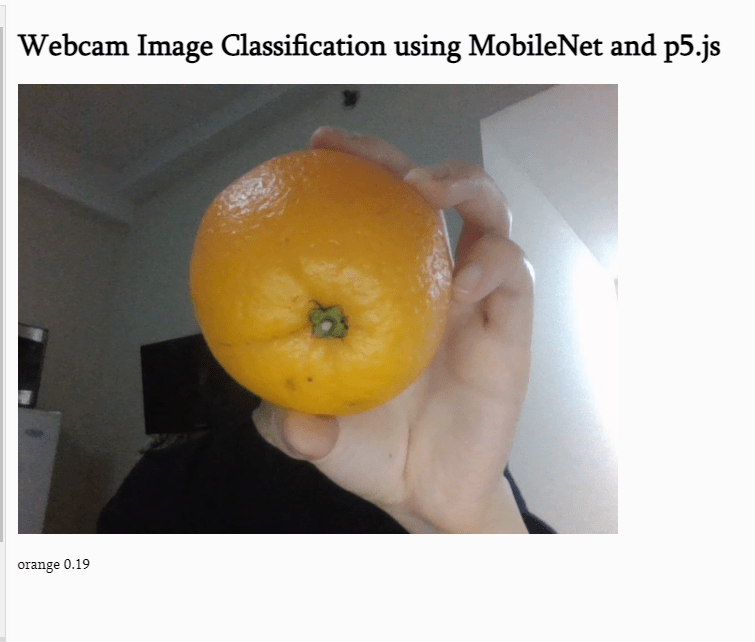

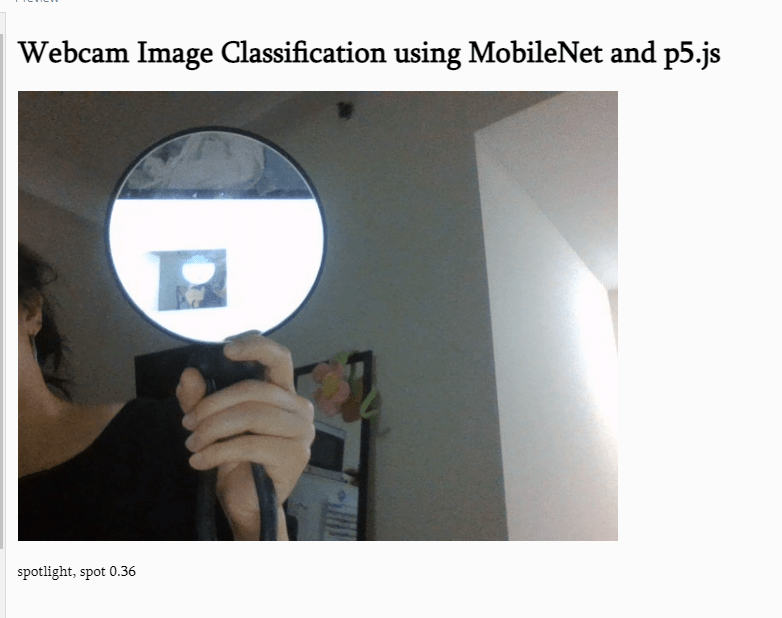

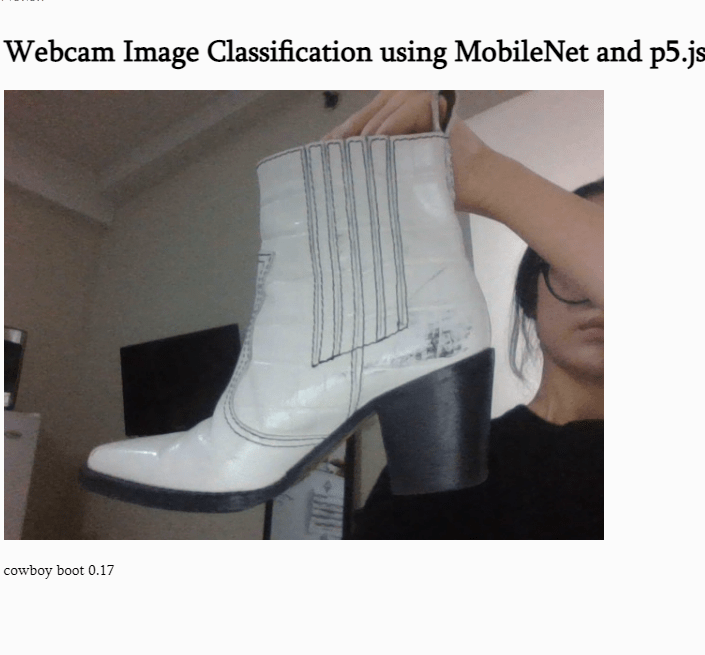

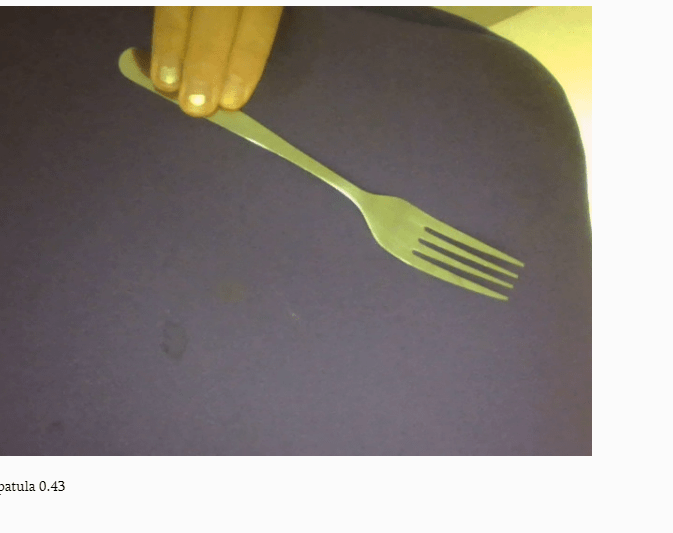

- video of p5.js sketch:

- day1:

- day2:

- day3:

- day4:

- day5:

– finished!

– finished! - for the show:

- Materials:

- Audience/Context: Who is this for? How will people experience it? Is it interactive? Is it practical? Is it for fun? Is it emotional? Is it to provoke something?

- the intended audience is the users of text to image generators. I want to raise awareness of the different uses of AI generated images. Clearly, AI art has many uses. They can be used to quickly do a mock up sketch, provide background image, etc. in a fast, efficient manner. I personally used text to image to create most of my visuals for my performance. Yet, as seen in Lexcia.art, there are many different uses of AI art as well. Although it is in my hopes that text to image are not used to create lewd, sexual images of women, I think it’s worth pointing out to the users.

- I hope to stir uncomfortableness with my art so that the impact and the impression is grand.

- my laptop functions as a sensor that detects people’s faces (aka a person’s nose). When a face is detected, the audio of a woman breathing stops. when there is no one in the camera peripheral, the painting starts breathing again.

- I think

- User testing: What was the result of user testing? How did you apply any feedback?

- People liked the idea of a breathing painting that stops breathing depending on the presence of the audience. I got a feedback that there is a cohesive theme in between most of my works, which I really liked as a comment.

- more user testing, as I will mention again at the last section, is something that I need more of.

- Source code

- let myPoseNet;

let video;

let poseResults;

let catSong;

let paused = false;function preload() {

catSong = loadSound(“breath.wav”);

}function setup() {

//from class example

video = createCapture(VIDEO);

video.hide();

createCanvas(640, 480);

fill(255, 0, 0);

myPoseNet = ml5.poseNet(video, gotModel);

textSize(100);

}

//from class example

function gotModel() {

//console.log(myPoseNet);

myPoseNet.on(“pose”, gotPose);

}function gotPose(results) {

poseResults = results;if (!catSong.isPlaying() && paused == false) {

catSong.loop();

}

if (results && results[0]) {

paused = false;

console.log(“detecting”);

}

}function draw() {

image(video, 0, 0, width, height);

if (poseResults) {

const nose = poseResults[0]?.pose.nose;

ellipse(nose?.x, nose?.y, 20, 20);

if (!poseResults[0]?.pose?.nose) {

catSong.pause();

paused = true;

console.log(“pausing”);

}

}

}

- let myPoseNet;

- Code references: What examples, tutorials, references did you use to create the project? (You must cite the source of any code you use in code comments. Please note the following additional expectations and guidelines at the bottom of this page.)

- class example from week 3: https://github.com/yining1023/machine-learning-for-the-web/blob/master/week3-pose/PoseNet_VideoMusic/sketch.js

- coding help from Angelo in Coding Lab!

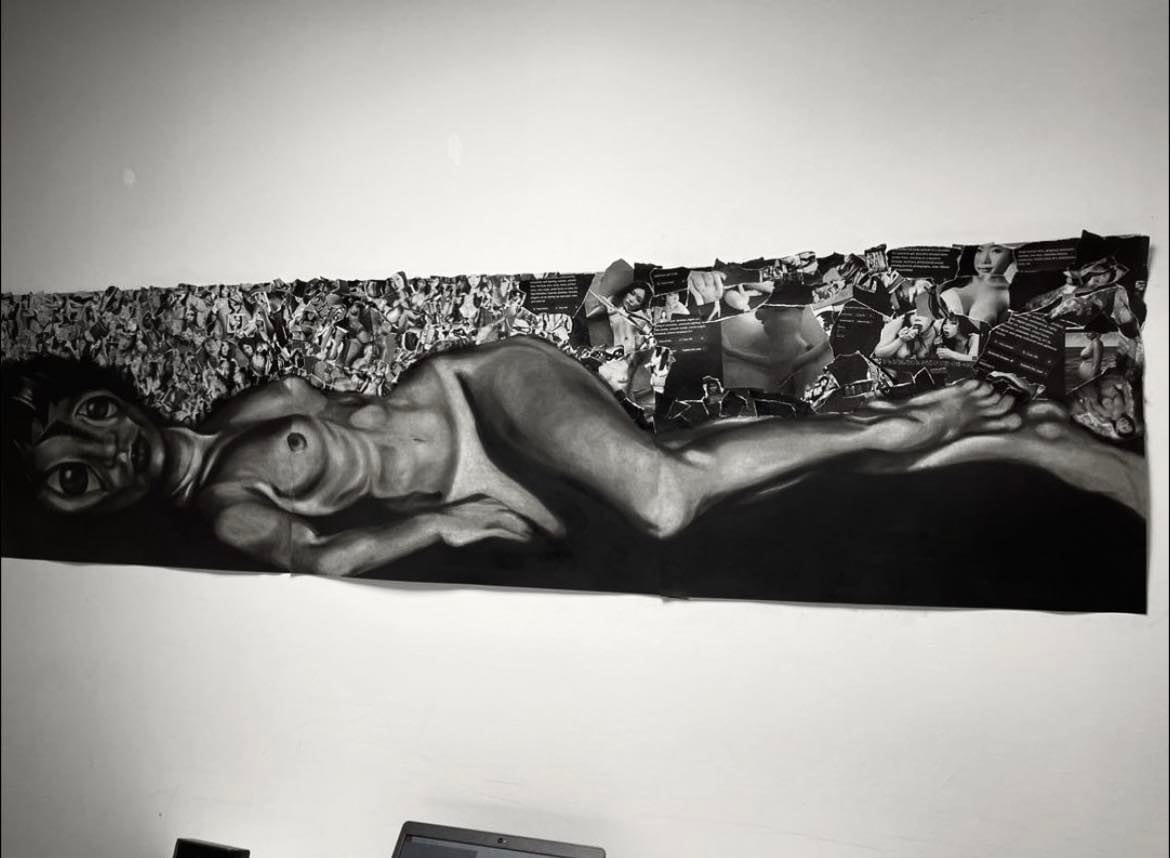

- Next steps: If you had more time to work on this, what would you do next?

- As of the presentation that I’m giving on December 9th, the next steps would be for me to actually finish the drawing and put it onto the show.

- to specify, I would first finish the figure in the drawing. Then, I would glue on the background that I printed out. Then, I would set it up with my laptop and rent lights and speakers from the ER. UPDATE: DONE!

- I might spray the painting with a finishing spray, since charcoal drawings. UPDATE: DONE!

- I would try to get more user feedback to figure out the best setting for the show as well. UPDATE: DONE!

- As of the presentation that I’m giving on December 9th, the next steps would be for me to actually finish the drawing and put it onto the show.

Winter Show Documentation:

-

- art description used for the winter show:

- video documentation (ft. my good friend faith!). the audio stops when the user is in front of the camera.

- some different angles of the project:

- Reflection:

- Originally, I wanted a webcam instead of using a laptop built in camera. However, the ER did not have an available webcam that I could check out before the show. Next time, I want to hide all physical interfaces.

- During the show, I got a feed back that it might be better to blackout the screen so that the user is not looking at the laptop + make it more intuitive for the users to interact/look at the drawing instead (thank you David Rios!). So I put the brightness down to 0.

- art description used for the winter show:

/MasksChungSungJunGetty-56a040503df78cafdaa0aea5.jpg)