This week, Sauda and I separated our work. Sauda worked on the physical computing part, while I worked on live visuals and texts to project on the frosted glass on the IDM floor.

Please find the program files of the WildWeb project from its GitHub repository.

Live Visuals: Hydra in Max

When using OBS Studio for Hydra visuals streaming, one problem is I cannot enable Max to control the scene switching in OBS. While there are popular plugins like Advanced Scene Switcher, that cannot grant Max control access.

Previously, the jweb object in Max could not grant the website access to my webcam. However, it suddenly works since this Friday. Maybe it is because now the webcam is not blocked by OBS or other running applications requiring it. Therefore, I use jweb object to handle the Hydra live visuals.

Texts Generation

We also need text to accompany the live visuals since we wanted the projection to look like a social media post. I found a tutorial for integrating ChatGPT into Max last week, but it turned out that my free credit for ChatGPT API cannot support a single run in Max.

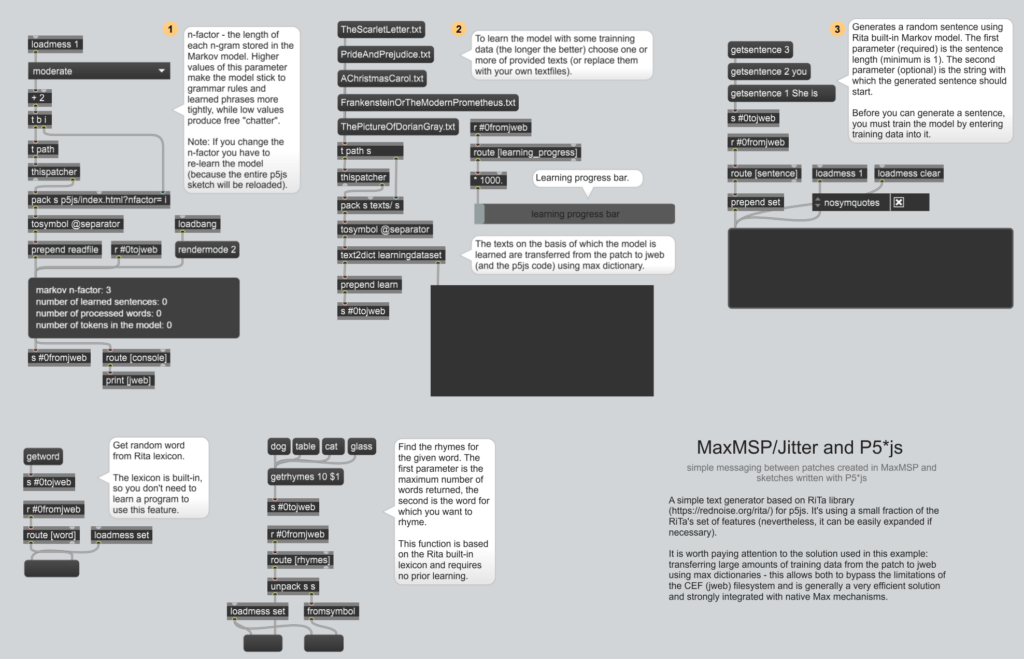

When fixing the live visuals issues, one of my plans was to try to put Hydra code into p5.js, so I searched for “Max p5.js” to see how people get it to work. I found a very inspiring forum post, in which the poster shares 4 Max patchers and the website for download. Among them, the “04_generative_text_with_rita” project happens to do text generation, which is also what we need for our project. The patcher realizes communications between Max and p5.js, and uses RiTa library in p5.js to achieve text generation.

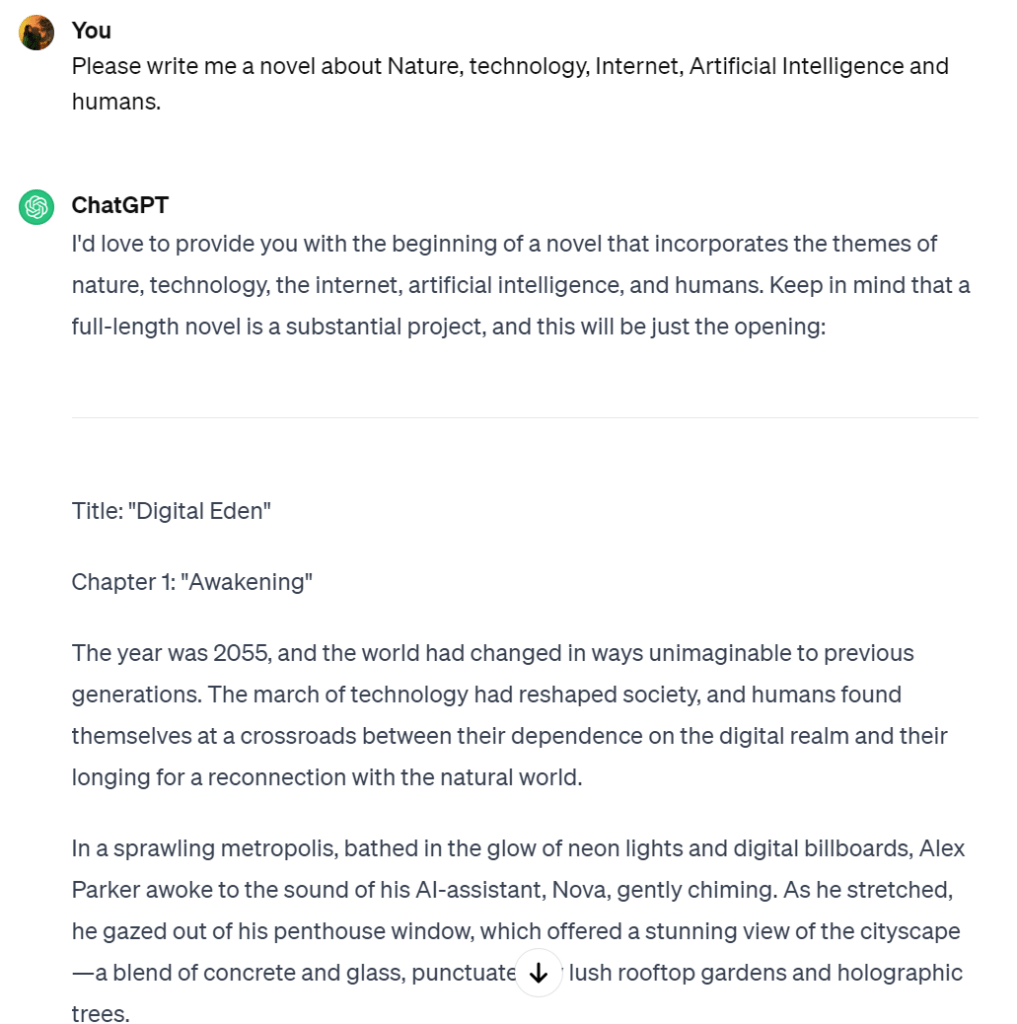

To customize the generative text, I asked ChatGPT for help in writing a sci-fi novel about Nature, technology, the Internet, Artificial Intelligence, and humans. Then, I pasted the novel into the “DigitalEden.txt” file, and saved it in the “text” folder in my project folder. This .txt file is for language model training, and all the generative text will grab words from it.

Demo

Below is a demo video of the program. The message and the integer objects are just for control and won’t appear in the final presentation mode. The space between the visuals and the text is reserved for physical buttons to react to the post.

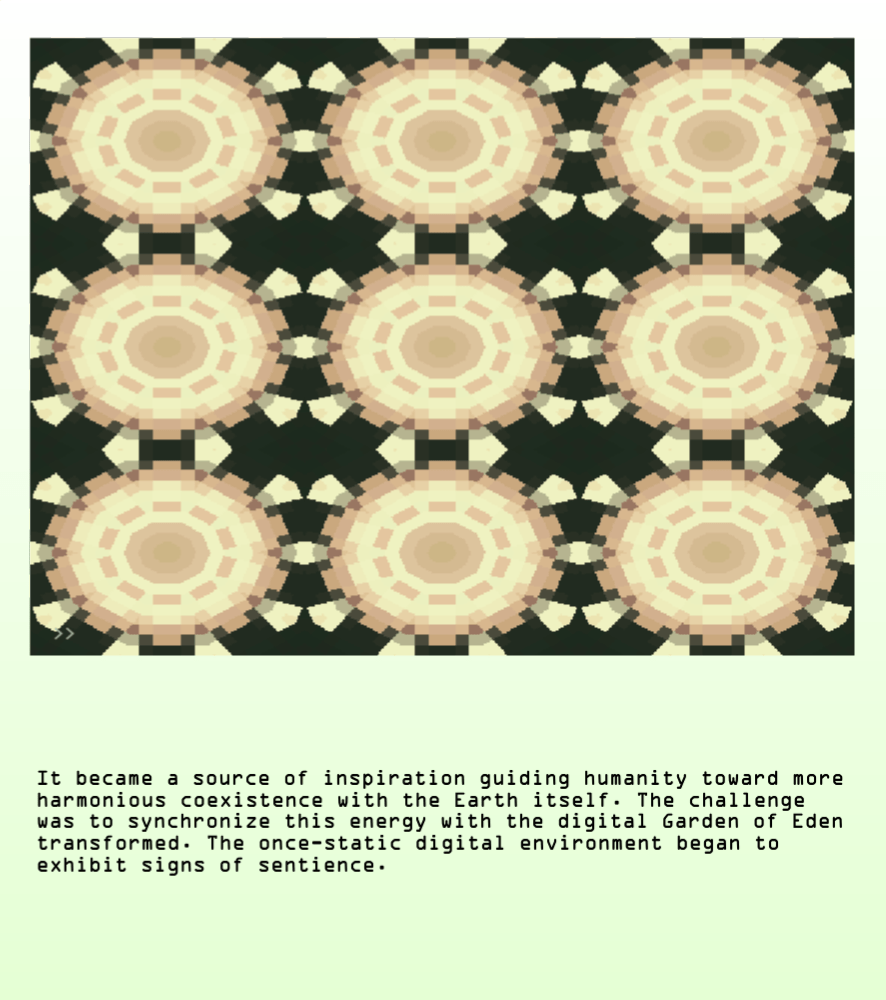

Below is an example image of how the projection looks.

Other Resource

Another Max patcher I found interesting is sentiment analysis, which can be downloaded from its GitHub page. Maybe I can integrate it into text generation later.

Next Step

On December 10, we will set up our physical environment and test the projection. We will also work together to create more artificial flowers to be controlled by Arduino.