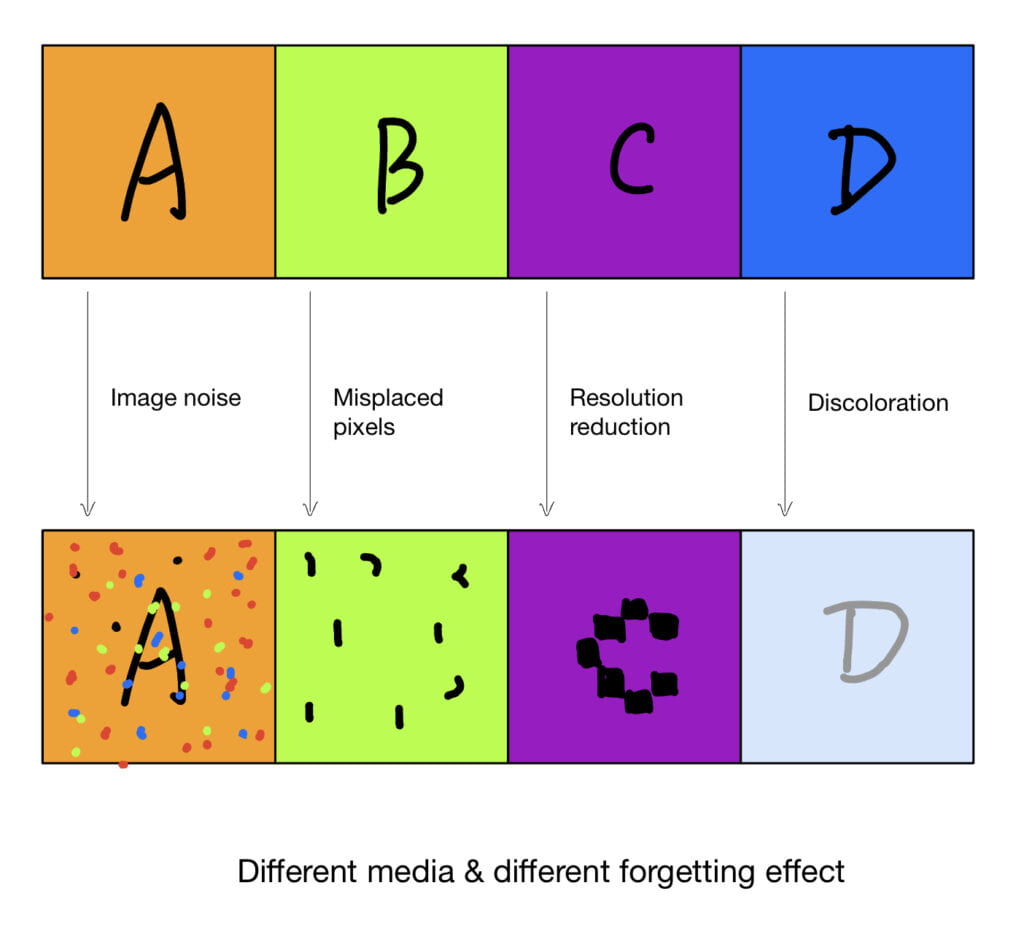

This week, I coded several visual effects and realized part of the interactions. I changed the form of my project from a lantern-shaped 4-screen projection to a single projection on a screen because the latter can achieve a similar interactive experience while making the setup much easier. This projection will still consist of 4 sections as illustrated in the previous post.

You may download my updated max program from here.

Since it is relatively easy to produce and select the media, I started with the hourglass video to create the visual effects.

Visual Effects

So far, I’ve created 3 effects: resolution reduction, image noise, and discoloration.

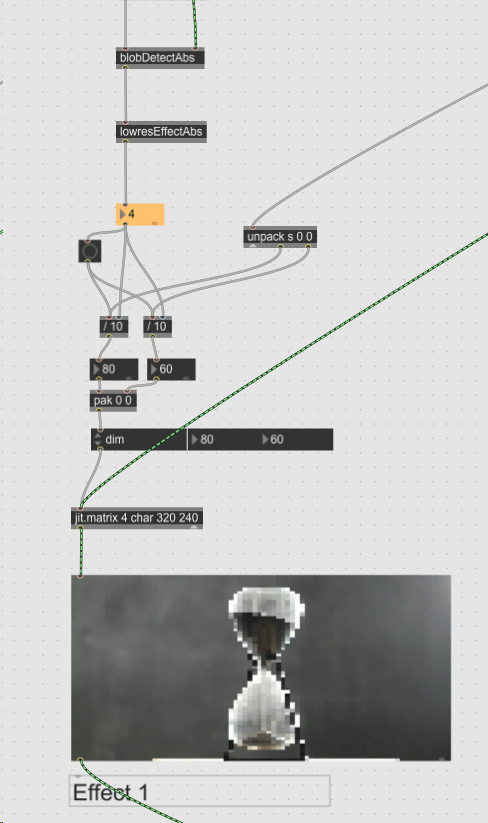

Resolution Reduction

To achieve the resolution reduction effect, I directly change the resolution of the input video. I divide the original video’s x-dimension and y-dimension by the time interval since the last detected presence of a person.

The time interval is calculated by the lowresEffectAbs, which will be introduced in the next section (Logics of Interactions ).

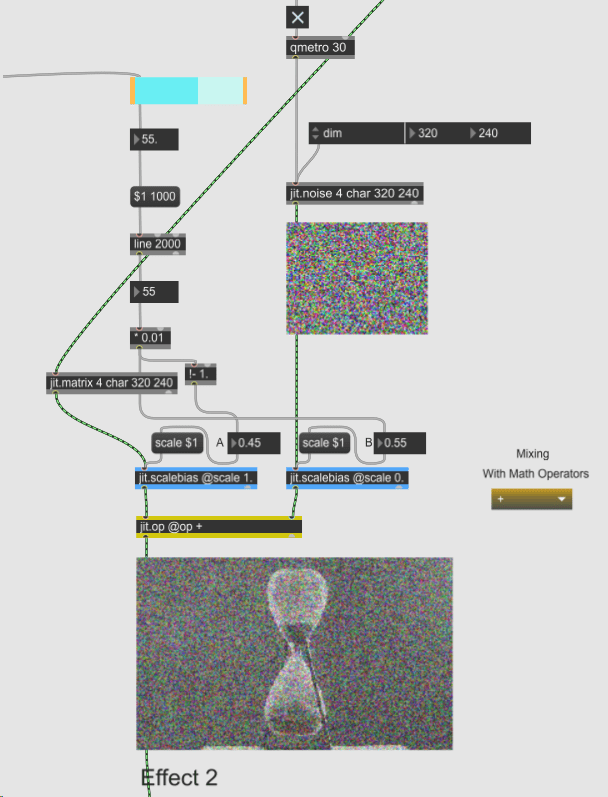

Image Noise

For the image noise effect, I add jit.noise object to the original media file and control the two matrices’ biases using a slider.

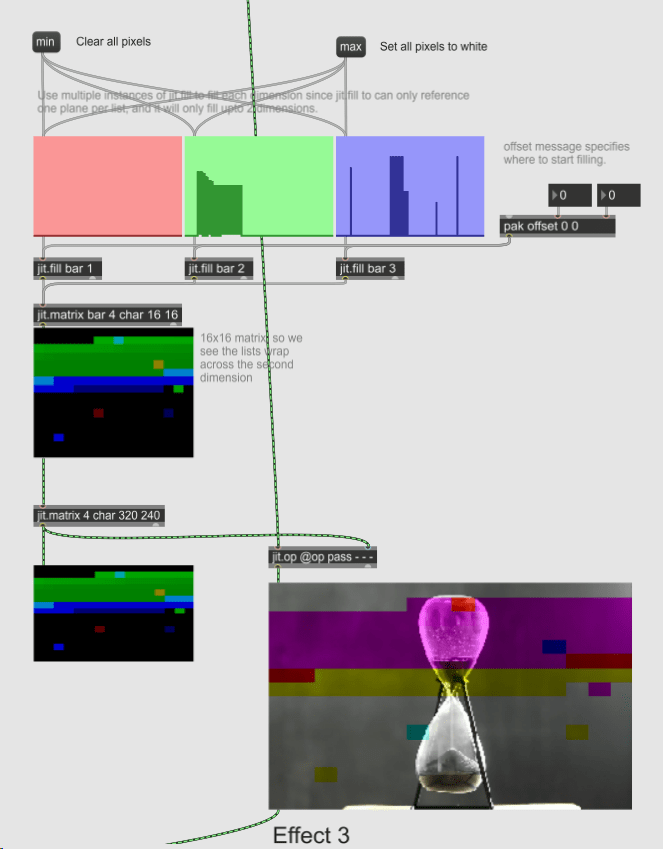

Discoloration

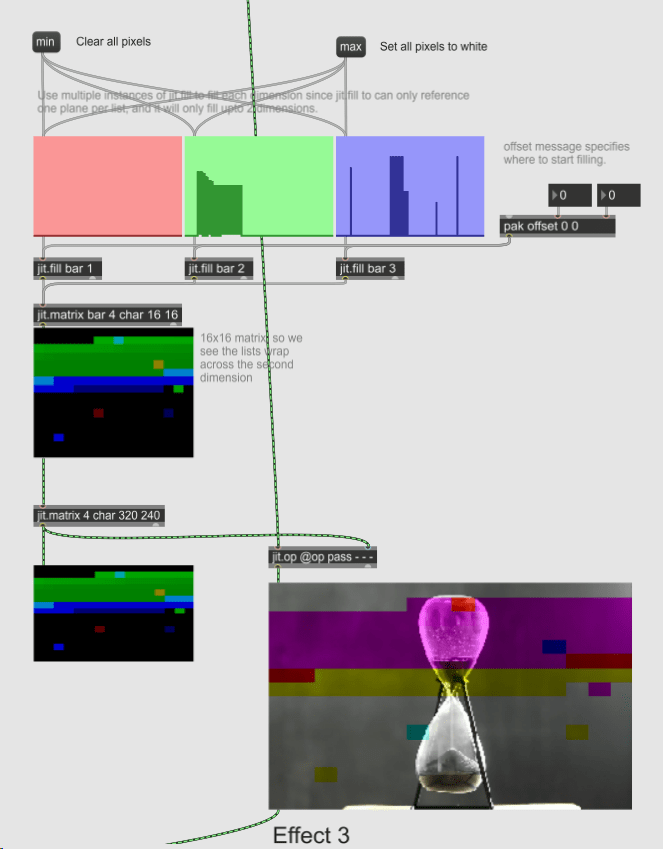

At first, I just wanted to do a simple discoloration of the video. However, when searching for solutions for the misplaced pixels effect, I encountered a set of objects that can make the discoloration effect more interesting:

This set of objects contains three multislider objects, which control the R, G, and B plane of a 4-plane matrix. A multislider outputs a list of numbers. Since a multislider object can handle only a list of not more than 256 numbers, I first send the three output lists to a 16*16 matrix. Then, before subtracting the RGB planes of this matrix from the hourglass video, I convert the 16*16 matrix to a 320*240 one because that is the dimension of the hourglass video. Only matrices of the same size can do addition and subtraction.

When all the channel values in all the multislider objects are at their maximum (255), the output video will become a black screen.

To Do: Misplaced Pixels

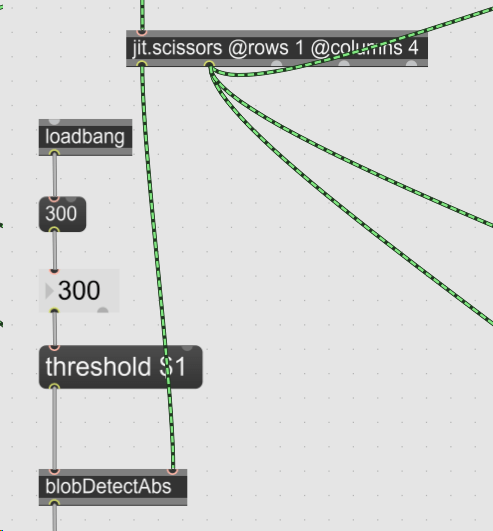

Currently, the easiest solution I can think of is to use jit.scissors object to segment the hourglass video, then use jit.glue to glue them together in a different order. However, when the number of segments gets bigger, it can be very tedious to place each segment to a random location on screen. I will continue to look for better solutions to this effect.

Logics of Interactions

Face Tracking or Blob Tracking?

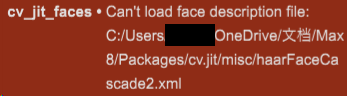

Initially, I wanted to use face tracking for the interaction. However, Max 8 couldn’t load the face description files even though they are in the directory. This is the error when I tried to use cv.jit.faces objects:

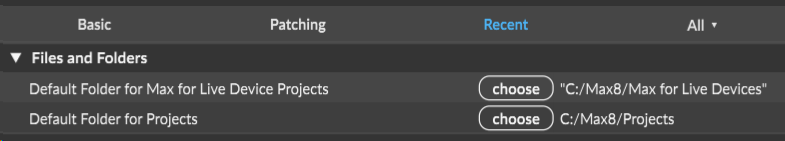

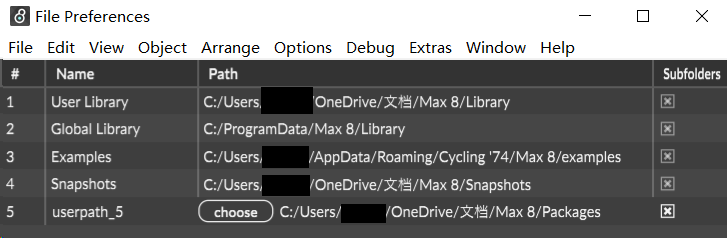

I think it may be due to the special characters in the path. However, I didn’t figure out how to change the search path of Max 8. I tried to change the paths in Options > Preferences and Options > File Preferences, but they didn’t impact the search path for the face description file.

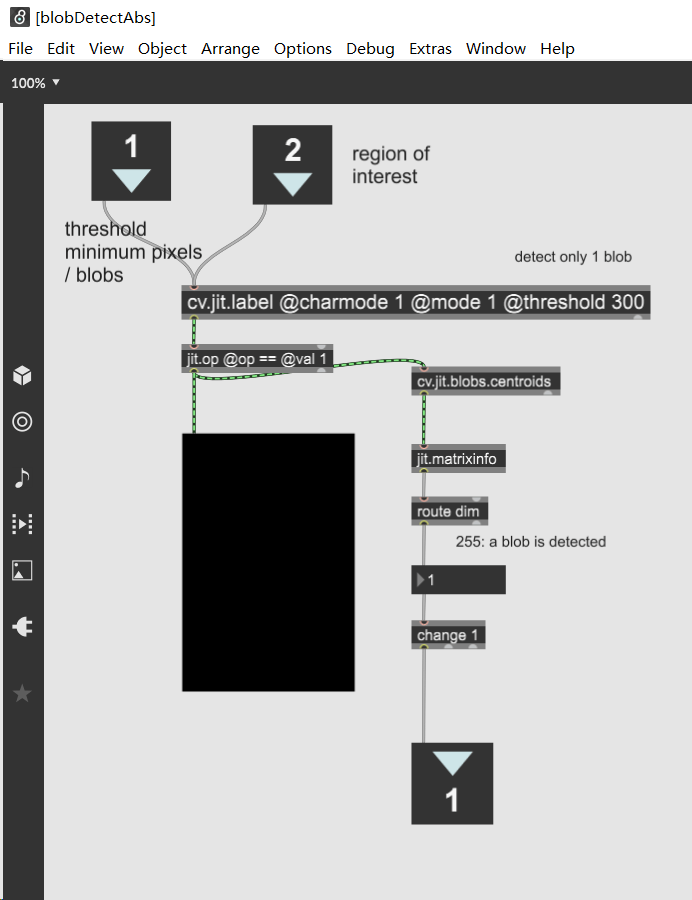

Since I don’t need precise facial tracking at this point, I used blob tracking instead and found it sufficient to detect the presence of visitors. I chose to detect the largest blobs within each of the 4 sections of the camera range so that my program would be more robust to noise.

I segment the camera range into four sections and feed each of them into blobDetectAbs for blob detection.

This is my blobDetectAbs.maxpat file.

Resolution Reduction Effect: lowresEffectAbs

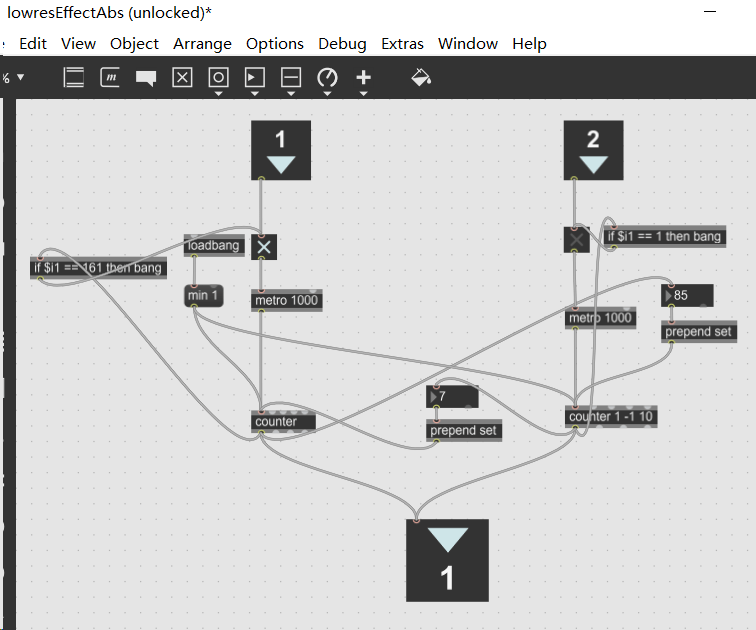

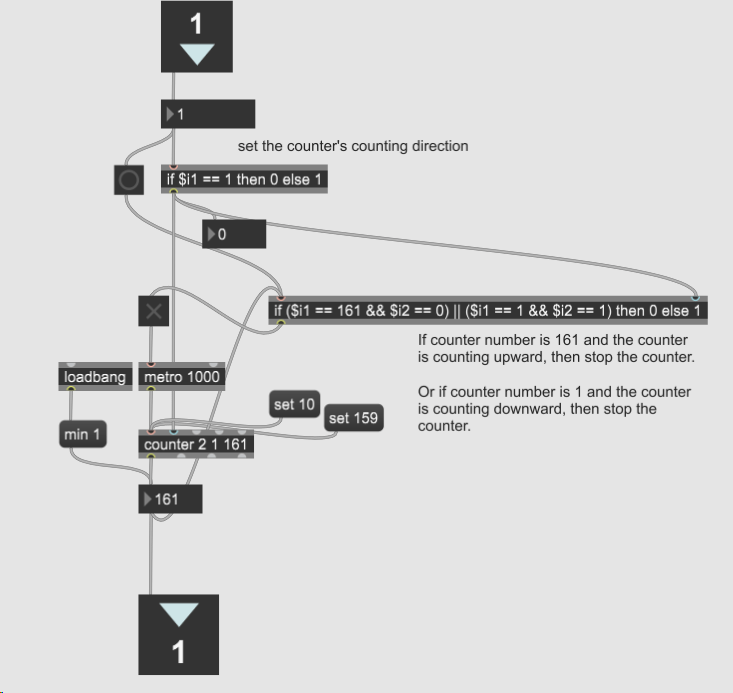

As mentioned in the previous section (Visual Effects – Resolution Reduction), I coded a lowresEffectAbs.maxpat to count the time interval since the last detected presence of a person. When no one is present in the region of interest of the camera range, the corresponding video’s resolution starts to reduce.

I initially planned to immediately restore the video’s original resolution as soon as a viewer shows up in front of it. However, when coding the resolution reduction, I felt that it takes quite some time for the video to become purely gray. Therefore, I decided to restore the video at the same low speed so that the viewer can take longer time to feel the impact of their presence.

The first version of my lowresEffectAbs.maxpat was very complex. Later I realized that the increasing and decreasing counting process is just a variation of a palindrome counter. Therefore, I coded the second version of lowresEffectAbs.

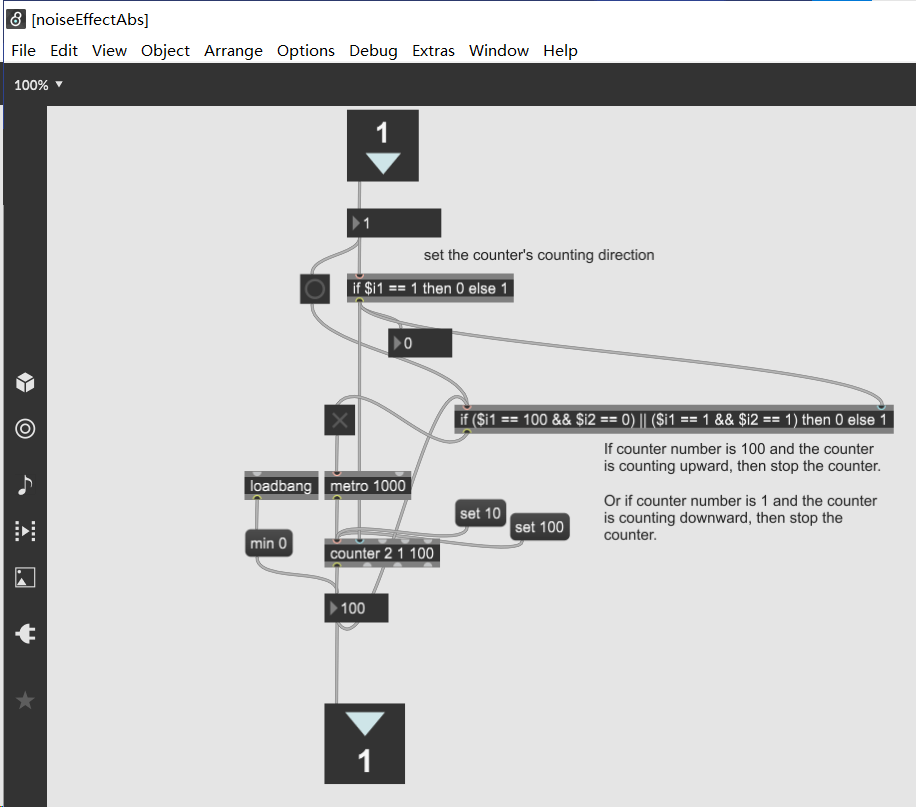

Image Noise Effect: noiseEffectAbs

After finishing the lowresEffectAbs, it is easy to adapt it to control the image noise effect. I just used the same structure but changed the counter’s maximum to 100, which is the same as the slider’s maximum value.

To Do: Discoloration Logic

Currently, I can manually draw red, green, and blue pixels of different alpha values onto the hourglass video by clicking on the three multislider objects. For the next step, I will automate the process, make it randomly draw pixels and make the number of pixels drawn related to the time interval since the last detected viewer presence in the corresponding region of the camera range.

About the Exhibition

Exhibition Space

My project works best with less noise in blob detection when in a dimmed environment. I went to the Media Commons this week to look for the space for my project, and I think one of the rooms among 370 Jay 221-224 is a good place for my project. However, if I fail to book a room there, I can just set up my project in one of the classrooms available on the 370 floor 3 and use its whiteboard for projection.

Equipment

- Projector * 1 (I plan to borrow from the Media Commons);

- Projection screen * 1, or just a white wall will do;

- Webcam * 1 (I will use my own one);

- I may not use a speaker, but will borrow one from the Media Commons if needed later.

Summary: Next Steps

- Find solutions for the misplaced pixel effects

- Code the discoloration logic

- Code the misplaced pixels logic