Our paper “Feature Learning with Deep Scattering for Urban Sound Analysis” has been accepted for publication at the 2015 European Signal Processing Conference.

Our paper “Feature Learning with Deep Scattering for Urban Sound Analysis” has been accepted for publication at the 2015 European Signal Processing Conference.

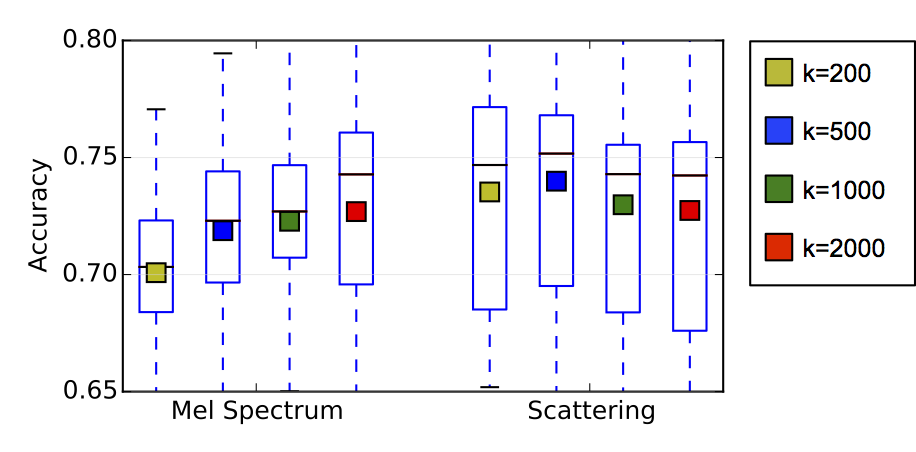

In this paper we evaluate the scattering transform as an alternative signal representation to the mel-spectrogram in the context of unsupervised feature learning for urban sound classification. We show that we can obtain comparable (or better) performance using the scattering transform whilst reducing both the amount of training data required for feature learning and the size of the learned codebook by an order of magnitude. In both cases the improvement is attributed to the local phase invariance of the representation. We also observe improved classification of sources in the background of the auditory scene, a result that provides further support for the importance of temporal modulation in sound segregation.

The full paper is available on the Publications page.