Project Title: Get Up Grandma

Designer: Calista

Instructor: Andy

Concept and Design

Many people in modern society have two “selves” in their hearts: one yearning to return home and another longing to travel far away. On one hand, we follow the appeal of distant places, dreaming of exploring a larger world. On the other hand, we have warmth and concern for our families that we cannot sever. My project tells the story of a girl raised by her grandma since childhood. When she was young, she was very naughty, but her grandma always tolerated her innocence. As she grew older, she left home. Despite still loving her grandma dearly, the distance between them grew, and they could never return to the intimate relationship they once shared. So, she made this alarm clock as a gift for her grandma, hoping that on the days when she was not home, the alarm clock could accompany her every morning, waking her from sleep with the songs they sang together, the games they played, and the warm memories they shared.

final product

I drew inspiration from this video game called One Hand Clapping, which is an inspiring puzzle 2D platformer that anglyzes players’ vocal input to progress through its vibrant world. By singing or humming into microphone, players find confidence in the power of voice as it changes the world around them.

This feature inspired my design of voice analysis so that my users can get the chance to sing a song with their pitch visualize on the screen. This visualization is a metophor of grandma’s ball of yarn rolled slowly across the sky of memory, also serving as the central interction of my project. Through this user interaction, I hope to bring back memories of the old days when grandma was knitting in the afternoon sunlight, and the girl sat by her side leisurely humming a little song. This is a typical scene that haunts my memories, and I think it might also be a shared childhood experience for many people.

screenshot from my project

Here I want to briefly explain how my voice visualization works technically. As the code shown above, I first got the frequency spectrum of the microphone input, which is an array of amplitute values at 1024 frequecies. Then I iterated a small range of them (to simplify the problem, the song I chose only involve note C to A, so I manage to determine this range which has the best sensitivity), and find the peak amplitude value, whose index is just the frequency value I want.

void findPeakFrequency() {

for (int i = 5; i < 45; i++) {

if (freqAnalysis.spectrum[i] > freqAnalysis.spectrum[peakIndex]) {

peakIndex = i;

}

}

peakFreq = freqAnalysis.spectrum[peakIndex];

if(peakFreq<0.01){

check = false;

}else{

check = true;

}

}

testing the feasibility of the frequency analysis code

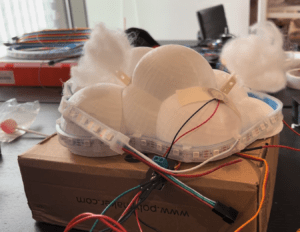

I got a lot of useful and constructive suggestions from the user test. In my original design, users need to pat the cloud the to start the journey (I used a press sensor). Then professor Andy suggested me add a clue, like having a LED blinking. I adopted this sugggestion because I agreed that I need something to indicate user what I want them to do, and it’s better not in the form of a written instruction but through certain visual clue. It didn’t help a lot in IMA because the surroundings were to bright for anyone to notice the blinking LED. But it was okay when I was testing it in a darker room.

the blinking led indicating the press sensor

I also got some feedback from user test that my physical alarm clock was made into the shape of a cloud but it’s not quite related to my theme of childhood memory with gramma, which could be somewhat confusing. Therefore, Kevin sugested that this page can also have some clouds. After the user test, not only did I add cloud as a visual element but also tried to animate it, which effectively matched with my stroytelling.

Fabrication and production

Every project is completed through trail and error, and it’s through all these experiments and iterations that your work finally took on a satisfactory outcome. I’m going to go through some iterations I had in my fabrication and production:

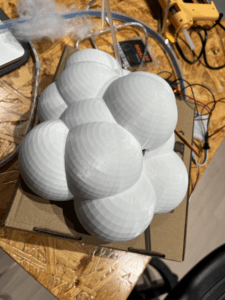

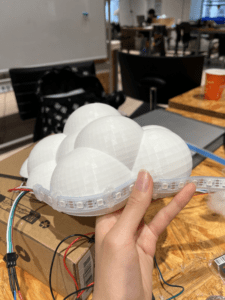

- The cloud alarm clock

- 3D printing the cloud modle

( I tried to modle it my self by combining several spheres, but I found that the stl I downloaded from the website Printables worked better)

2. stick neopixel leds onto the cloud

( Applying hot glue to the back of the strip doesn’t work well because the glue sticks to the 3D printed material much more than the strip material. So I changed it to hot glue rings like this.)

3. add servos

(At first I used delay when coding the servos, but that caused problems to the fastleds. Professor Rudi pointed out that it’s because leds require very fast signal transmission, so I can’t slow down the pace with delay, instead, I should use millis to control servo)

(At first I used delay when coding the servos, but that caused problems to the fastleds. Professor Rudi pointed out that it’s because leds require very fast signal transmission, so I can’t slow down the pace with delay, instead, I should use millis to control servo)

IMG_2205

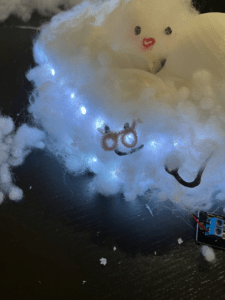

4. customize the design

4. customize the design

The two small clouds represent the two moods of the girl, indicating that the girl likes to stick to her grandma (the big cloud) whatever mood she is in.

5. some more decoration

- The voice pitch visualization interface 1. As I mentioned before, I tried to add anitations to this page and there were also some challenges in achieving this technically. Unlike static images like my cloud and bachground which is easy to animate, each point of this line is drawn with a dynamically changing array based on the real-time data collected from the microphone, which increased the difficulty. I thought for a whole day before I came up with the solution, and of course I was grateful to my friend Daisy for her help and inspiration. Basically, I don’t need to change how I store the the value in dot.y array every frame, I just need to scatter dotx to three times their original length and have every one of them move to the left at a fixed speed, just as the code shown below.

dotx.add(cx); doty.add(smoothed); for (int i = 1; i < dotx.size(); i++) { stroke(25,7,94); strokeWeight(5); float newValue = dotx.get(i) - 2; dotx.set(i, newValue); line(dotx.get(i-1), doty.get(i-1), dotx.get(i), doty.get(i)); stroke(13,100,9); strokeWeight(3); line(dotx.get(i-1), doty.get(i-1)+5, dotx.get(i), doty.get(i)+5); line(dotx.get(i-1), doty.get(i-1)-5, dotx.get(i), doty.get(i)-5); }

- What’s more, I smoothed the y value to make it no only more pleasant to the eye, but also simulate the yarn more vividly.

cx += 2; cy = height - peakIndex2*30; smoothed = smoothed * (1.0 - smoothing) + cy * smoothing;

- Many users said that their musicality is not strong, and can not sing in a key with the original song (that is, the piano prompt). Therefore, I added the numbered musical notation at the bottom of the page, which has the added benefit that as long as the user can match the notation, then his rhythm can easily match the song.

This page is improved a lot compared to its first version:

before

after

Conclusion

the final version of my documentation video

“Get Up Grandma” combines technology and emotion to bridge the gap between a girl and her grandmother. This interactive alarm clock uses voice analysis, combined with visual elements like ball of yarn, to bring back memories through songs they used to sing together. The final product includes a 3D-printed cloud, LEDs, and animated voice pitch visualization, all working together to create a warm and nostalgic experience. In essence, “Get Up Grandma” is a heartfelt gift, ensuring the grandmother feels the granddaughter’s love and presence every morning, even when they are apart.

Video recording on the IMA show

On IMA show, although the singing part turned out to be too complicated for these children to understand, they still had a lot of fun playing with my project! Their parents are interested, too. This result made me realize the importance of audience to project design. Although my fellow students, as my targeted audience, can easily understand what my project is doing, my design is not very suitable for children. This also inspires me to take the audience more as the center when designing interactive experience in the future, and to think about my design from their perspective.

If I have more time to improve my project, I will optimize my code to keep it run more smoothly. I may also add a second stage as I imagined which is a body warm-up exercise where users can relive the memories of practicing Wuqinxi with Grandma. ( a warm-up exercise a little bit similar to Taichi but more popular in Chinese elderly group.

Disassembly

Appendix

my code from Arduino and Processing

wiring diagram (made with TinkerCad Circuits)

Cloud 3D Model by creality_creator_1510. Retrieved from Printables under Creative Commons Public Domain License. Available at: https://www.printables.com/model/334549-cloud

Some images used in this project were generated using DALL-E, an AI model developed by OpenAI. These images were created to enhance the visual elements of the project.

Sepecial thanks to professor Andy, Gottfried and Rodolfo for their kind guidance and support and to IMA community fellows who have assisted with this project and its ducomentation.