Project name: Puppet

Conception and Design

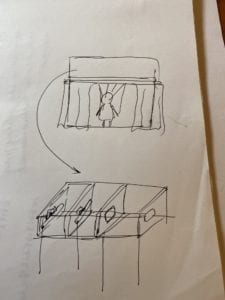

The final goal of our project was decided at the beginning, which is to show the theme of “social expectation”. The original thought was to let the user play the role of the various forces in the society that force us to behave in a certain way, and the puppet is the representation of ourselves, who are being controlled. Therefore, the original plan was to let the user set a pose for the puppet in Processing, and thus data of the pose would be sent to the Arduino part. The real puppet on the miniature stage would first change several random poses, and finally stay at the pose that the user set before. In the first week of the process, we started to prepare the materials needed for the project with the original plan in mind. The most important part in our project is the puppet. We tried to search for one that is not so funny or childish to make our theme more distinctive, and finally decided to buy the vintage puppet that has 30 years history. We expected that the final stage may have a quite large size. If we use laser cutting to build the stage, then the materials may be insufficient. Therefore, we finally decided to use cartons as replacement. To add some dramatic atmosphere of our stage, we bought some velvet, expecting to stick them to the stage surface. In addition, we bought a mini tracker lamp to be attached to the top of the stage. For the Arduino part, we decided to use four servos connected with strings to rotate the arms and legs of the puppet. To make it more stable, we decided to use 3D printing to print some components and stick them to the servos with hot glue. In addition, we used some red velvet to make the stage curtain. Since it requires professional skills, we sent the velvet to a tailor shop, and finally got a satisfying result.

Fabrication and Production

To create the image of the puppet in Processing, I tried to draw a cartoon version of the puppet by coding at the beginning. But I finally gave up since it was too complicated and the final result may even not be satisfying due to the limitation of coding. Therefore, I decided to draw the image in a digital painting app name as Procreate. I can draw different parts of the puppet’s body in different layers of the painting screen, and thus we can directly load the images into Processing and rotate them. We first chose to use keyboard and mouse interaction to let the user control the movement of the digital puppet, and we finally finished the code. However, when we shared our thoughts with the IMA fellows, they pointed out that it would be hard for the users to see our theme of social expectation with such a simple process. Besides, it may not make sense to control the puppet with Processing instead of directly controlling it. The digital puppet and the physical puppet are presented to the user at the same time, and it looks a bit competitive. From our own perspective, we also felt that the interaction in our project was a bit weak, and the theme seemed to be vague. Therefore, we modified the plan. We planned to make our stage curtain an automatic one. We could use the motor to twine the string connected to the front of the curtain, thus opening it. Besides, I changed the color of the image in Processing to black and white tone. We could cast it on the wall with projector and it would look a huge shadow hanging over the real puppet.

However, our plan changed again after user testing. Professor Marcela also pointed out the problem that our theme seemed to be very vague to her, and we also shared our worries with her. She gave us several valuable suggestions. She suggested us to use the cross, which is part of the real puppet, to let the user control the movement of the puppet directly. Besides, she suggested that we could use webcam to capture the user’s face, and finally put their faces in the head of the digital puppet, so the logic could be clear that the user is actually also being controlled. In addition, we also received a suggestion that we can add some voice of the puppet, to let it say something. These suggestions were extremely precious to us, and we started to change almost everything of our project after user test.

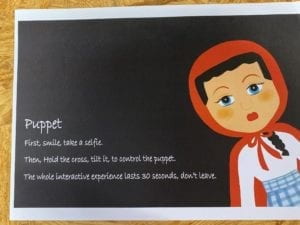

First of all, we asked the fellows which sensor we can use to control the movement of the puppet directly. They suggested that we can use the accelerometer. The angle of the rise and fall of the puppet’s arms and legs would change with the angle that the user leans the cross. In addition, since it is hard to capture the users’ face when they are moving, Professor Eric suggested us to take a picture of the user at the beginning. He helped us with the coding and finally we made it like a process of asking them to take a selfie. I wrote a script and recorded my voice to be the puppet’s voice. The lines include, “What should I do?”, “What if they will be disappointed at me?”, “What if I cannot fit in?”. The last sentence is, “Hey friend, do you know, you are just like me.” After this last sentence, the image that the user’s face is in the head of the digital puppet will be shown to the user, so that we can show the logic that while we are controlling others, we are also being controlled. However, there were some problems with the Arduino part. The night before presentation, we were testing the accelerometer, hoping that everything could work well. However, we could not even find the port connected to the computer. Besides, in our previous testing, we found that the accelerometer is quite unstable and sensitive, making it hard to control the movement of the real puppet. Professor Marcela suggested us to change the accelerometer to tilt sensors, which are more stable. We took this advice and changed the code again. Tilt sensor functions as a button, if we lean it, a certain behavior could be triggered. In our case, we used two tilt sensors to control the movement of the arms and legs respectively. And the logic is, if the left arm rises up, the right arm would fall down, vice versa. Since tilt sensor only has a function as on or off, it is also easier for us to send the data to Processing. The digital image in Processing would change with the real puppet, following its poses. After we got everything done, I made a poster, on which I wrote the instructions and also the explanation of our project theme.

Conclusions

Our project aligns well with my definition of interaction. In my preparatory research and analysis, I wrote my personal definition of a successful interaction experience. In my opinion, the process of interaction should be clear to the users, so that they can get a basic sense of what they should do to interact with the device. Various means of expression can be involved, such as visuals and audios. The experience could be thought-provoking, which may reflect the facts in the real life. My partner and I have created a small device as a game in our midterm project, so this time we decided to create an artistic one as a challenge. Our project aims at those who intentionally or compulsively cater to the social roles imposed on them by the forces in the society. We showed the logic that while we are controlling others while also being controlled by the others. In fact, it is hard to show a theme in an interactive artistic installation, and it was hard for us to find the delicate balance, the balance that we can trigger the thoughts of the user without making everything too heavy. The visual effect of our project is satisfying, and we also use music and voices to add more means of expression. The user’s interaction with our project is direct and clear. Instead of touching the cold buttons on the keyboard, they can hold the cross, listen to the monologue of the puppet, and thus build an invisible relation of empathy with the real puppet. After the final presentation, we have also received several precious suggestions. If we have more time, we would probably try to make the whole interactive process longer with more means of interaction, so that the user can be given more time to think more deeply about the theme. There are many ways to show our theme, but the results could be entirely different. We are given possibilities but may also get lost. The most important thing that I have learnt in this experience is to always be clear about what I am trying to convey and what the goals are at the beginning. Without a clear theme in mind, we are likely to lose directions, and the final work could be a mixture of various disordered ideas.

Video of the whole interactive experience:

Arduino Code:

#include <Servo.h>

Servo myservo1;

Servo myservo2;

Servo myservo3;

Servo myservo4;// create servo object to control a servo

int angleArm = 0;

int angleLeg = 0;

const int tiltPin1 = 2;

const int tiltPin2 = 4;

int tiltState1 = 0;

int tiltState2 = 0;

void setup() {

Serial.begin(9600);

myservo1.attach(3);

myservo2.attach(5);

myservo3.attach(6);

myservo4.attach(9);

pinMode(tiltPin1, INPUT);

pinMode(tiltPin2, INPUT);

}

void loop() {

//reasonable delay

delay(250);

tiltState1 = digitalRead(tiltPin1);

tiltState2 = digitalRead(tiltPin2);

if (tiltState1 == HIGH) {

angleArm = 90;

} else {

angleArm = -90;

}

if (tiltState2 == HIGH) {

angleLeg = 30;

} else {

angleLeg = -30;

}

// Serial.println(angleArm);

// Serial.println(angleLeg);

myservo1.write(90 + angleArm);

myservo2.write(90 - angleArm);

myservo3.write(90 + angleLeg);

myservo4.write(90 - angleLeg);

Serial.print(angleArm);

Serial.print(","); // put comma between sensor values

Serial.print(angleLeg);

Serial.println(); // add linefeed after sending the last sensor value

delay(100);

}

Processing Code

import processing.sound.*;

SoundFile sound;

SoundFile sound1;

import processing.video.*;

Capture cam;

PImage cutout = new PImage(160, 190);

import processing.serial.*;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

PImage background;

PImage body;

PImage arml;

PImage armr;

PImage stringlr;

PImage stringar;

PImage stringal;

PImage legl;

PImage stringll;

PImage legr;

float yal=100;

float yll=0;

float yar=0;

float ylr=0;

float leftangle=PI/4;

float rightangle=-PI/4;

float leftleg = 570;

float rightleg = 570;

float armLerp = 0.22;

float legLerp = 0.22;

float pointleftx =-110;

float pointlefty =148;

PImage body2;

boolean playSound = true;

void setup() {

size(850, 920);

setupSerial();

cam = new Capture(this, 640, 480);

cam.start();

background = loadImage("background.png");

body=loadImage("body.png");

arml=loadImage("arml.png");

stringal=loadImage("stringal.png");

armr=loadImage("armr.png");

legl=loadImage("legl.png");

stringll=loadImage("stringll.png");

legr=loadImage("legr.png");

stringar=loadImage("stringar.png");

stringlr=loadImage("stringlr.png");

body2 =loadImage("body2.png");

sound = new SoundFile(this, "voice.mp3");

sound1 = new SoundFile(this, "bgm.mp3");

sound1.play();

sound1.amp(0.3);

}

void draw() {

updateSerial();

printArray(sensorValues);

if (millis()<15000) {

if (cam.available()) {

cam.read();

}

imageMode(CENTER);

int xOffset = 220;

int yOffset = 40;

for (int x=0; x<cutout.width; x++) {

for (int y=0; y<cutout.height; y++) {

color c = cam.get(x+xOffset, y+yOffset);

cutout.set(x, y, c);

}

}

background(0);

image(cutout, width/2, height/2);

fill(255);

textSize(30);

textAlign(CENTER);

text("Place your face in the square", width/2, height-100);

text(15 - (millis()/1000), width/2, height-50);

} else {

if (!sound.isPlaying()) {

// play the sound

sound.play();

}

imageMode(CORNER);

image(background, 0, 0, width, height);

image(legl, 325, leftleg, 140, 280);

image(legr, 435, rightleg, 85, 270);

image(body, 0, 0, width, height);

if (millis()<43000) {

image(body, 0, 0, width, height);

} else {

image(cutout, 355, 95);

image(body2, 0, 0, width, height);

sound.amp(0);

}

arml();

armr();

//stringarmleft();

image(stringal, 255, yal, 30, 470);

image(stringll, 350, yll, 40, 600);

image(stringar, 605, yar, 30, 475);

image(stringlr, 475, ylr, 40, 600);

int a = sensorValues[0];

int b = sensorValues[1];

float targetleftangle= PI/4 + radians(a/2);

float targetrightangle= -PI/4 + radians(a/2);

float targetleftleg= 570+b*1.6;

float targetrightleg= 570-b*1.6;

leftangle = lerp(leftangle, targetleftangle, armLerp);

rightangle = lerp(rightangle, targetrightangle, armLerp);

leftleg = lerp(leftleg, targetleftleg, legLerp);

rightleg = lerp(rightleg, targetrightleg, legLerp);

float targetpointr = -100-a*1.1;

float targetpointl = -120+a*1.1;

float targetpointr1 = -50+b*1.3;

float targetpointr2 = -50-b*1.3;

yal= lerp(yal, targetpointr, armLerp);

yar = lerp(yar,targetpointl,armLerp);

yll= lerp(yll,targetpointr1,legLerp);

ylr = lerp(ylr,targetpointr2,legLerp);

}

}

void arml() {

pushMatrix();

translate(375, 342);

rotate(leftangle);

image(arml, -145, -42, 190, 230);

fill(255, 0, 0);

noStroke();

popMatrix();

}

void armr() {

pushMatrix();

translate(490, 345);

rotate(rightangle);

image(armr, -18, -30, 190, 200);

popMatrix();

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 11 ], 9600);

myPort.clear();

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), ",");

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}