Background

I knew going into this project that I wanted to create something related to human vs. computer vision. Instead of trying to contrast the two though, I thought it could be interesting to try to create a visual dialogue between them using videos and images. What came out of these efforts was object[i].className==”person”, an art/photography booklet that deals with human subject matter, or objects classified as “person.”

The theme of human vs. computer perception is something I’ve tried to explore throughout the course of the semester. Initially, I was interested in What I saw before the darkness by AI Told Me, which deals with computer vision when shutting off neurons of a neural network. I was greatly inspired by the duo Shinseungback Kimyonghun, specifically the works FADTCHA (2013) and Cloud Face (2012), which both involve finding human forms in nonhuman objects.

Methodology

I first started by using the ml5.js bodyPix() and poseNet() models to see where points of the body could be detected in outdoor spaces.

This was fine as a test, but didn’t provide any interesting or clear data that I felt I could use moving forward. I decided to switch to using the ml5.js YOLO() model to capture full, bounded images that could be used as content. To go along with this, using the webcam feed provided very low quality images as well; to improve this, I used video taken on a DSLR as input. This fixed issues with the image quality, but presented new issues with the bounding and image detection in itself–because the model couldn’t process at the same speed of the video, it would run at a delayed speed, resulting in inaccuracy. Instead, I slowed down the videos to 10% speed, and then it functioned much more smoothly.

I ran into some challenges with the code as well, mostly with how to get and save the images. It was fitting that the solution ended up being using a combination of the get() and save() functions. The final code was relatively simple.

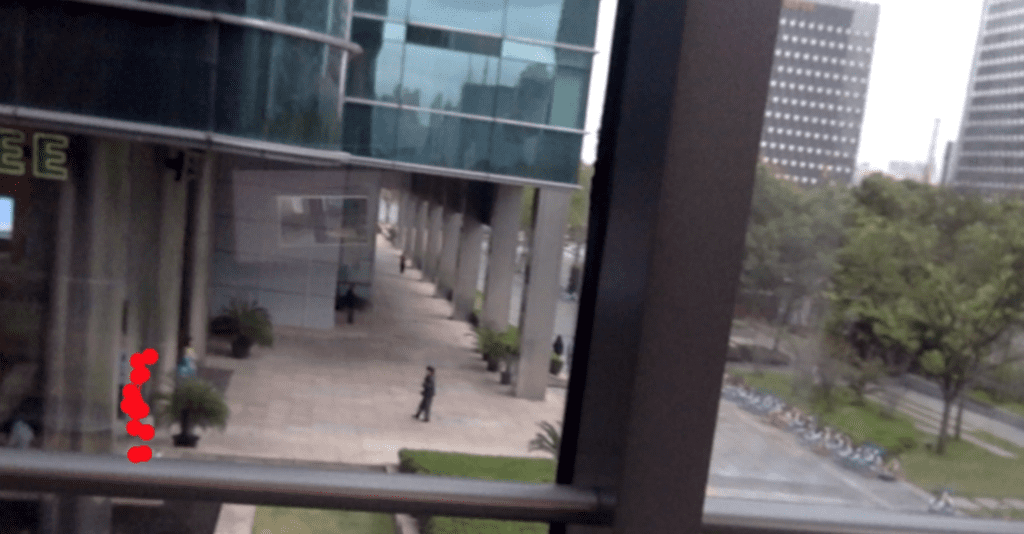

What came out of this was a lot of very interesting images. A few of these:

I then used my own vision and decisions to pull these images together into various compositions to form a book.

further development

I was really interested in this project and would love to continue to work on it. I think what would benefit the project the most would be to continue to enable this conversation between human and machine on the topic of perception, however that may be. One way would be to gather opinions or thoughts on this from other people and to incorporate those words. Since I don’t want this to have an argument or a theory to prove, but rather to be more of an experiment, I don’t think I would be too strict on which content to include or to not include, as long as it relates to this topic of perception and vision. After that, it’s difficult to say what I would do, since each step relies heavily on what results come previously.