Inspiration

As stated in my midterm proposal, my project mainly focuses on the phenomenon of imprinting, a behavior prominently found in birds during their first stages of life. The aim was to have the robot mimic the basic tendencies of these infant animals, namely, following a moving subject and adjusting its direction accordingly.

Imprinting was first recorded in domestic chickens, and has since been used by mankind for centuries in order to control animals for agricultural and other daily uses. In rural China, farmers imprinted ducklings to special ‘sticks’, which they would then use to lead the ducklings out to the rice paddies to control the snail population.

It seems that imprinting is pre-programmed in these animals through natural selection, and the main reason is because in the first moments of the animal’s life, it is vital that it follows a nearby moving organism to ensure its safety. There is a critical period for imprinting however, which typically spans the first 36 hours from the time the infant is birthed. Once the animal is imprinted on an object/organism, it is said to be ‘fixed’, meaning that it will be nearly impossible for the baby to unlearn this association, even in adulthood. In this case, the phenomenon is known as ‘damage imprinting’.

Here is a video of imprinting in action:

From this video, you can see that these ducklings will continue to follow the cameraman as he moves around in various directions, even moving their heads to look at him as he loops around the room.

Despite this being a rather simple behavior, I feel that it’s something we all experience as living creatures; our pet dogs will run to us when it sees us appear, and small children will recognize and follow trusted family members or friends. To have a robot follow a human around means that it is registering the individual as something, regardless of whether or not it knows what it is, and there is something quite lifelike in this behavior.

Implementation

My first step was to have the robot detect objects in front of it, and move towards it accordingly. This was easy; with the use of the ultrasound, the bot could “see” objects and adjust the motor speed. However, the turning behavior of the robot posed a huge issue, as the kittenbot’s turns were very delayed and robotic. Therefore, I had to find another way to have the bot detect objects and turn towards it.

Attraction behavior using one ultrasound sensor:

After thinking about the problem for quite a bit of time, I had a breakthrough moment after looking through images of insects, specifically the spider (I was trying to find other ways of sensing because the ultrasound was being finicky). Most spiders have eight eyes, positioned across their ‘head’ to increase their field of view. I wondered if I could simply just have two ultrasound sensors on both sides of the bot, and connect each sensor to one motor. Therefore, if one sensor detects on object, and the other sensor does not, I could have the motor controlled by the non-detecting sensor move, propelling the bot to turn towards the object positioned in front of the sensor that did detect it. In other words, the process looked something like this:

(turning right)

OBJECT

sensor 1 (no object) sensor 2 (detects object)

^^^^^^^^ ^^^^^^^^

motor 1 (move) motor 2 (don’t move)

(turning left)

OBJECT

sensor 1 (detects object) sensor 2 (no object)

^^^^^^^^ ^^^^^^^^

motor 1 (don’t move) motor 2 (move)

(moving straight forward)

O B J E C T

sensor 1 (detects object) sensor 2 (detects object)

^^^^^^^^ ^^^^^^^^

motor 1 ( move) motor 2 (move)

First Prototype:

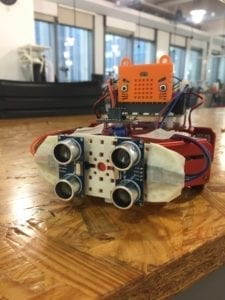

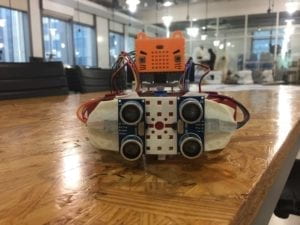

As you can see, I removed the original kittenBot ultrasound, and equipped two Arduino ultrasound sensors to both sides of the bot.

Here is the test run of each bot’s individual ‘motor-to-ultrasound’ connection:

From this video, we can see that each motor is indeed connected to their respective ultrasounds, which is the basis for achieving smooth, quick turns. However, I realized that my current setup of taping the ultrasounds to the bot was not very stable, as they would occasionally jitter. Therefore, I added a plastic plating to the front of the bot, and securely taped the ultrasound sensors to the plating:

After everything was securely fastened to the bot, and both motors and sensors were working properly, it was time to test out one bot to see if it would ‘imprint’ on objects and follow them in a quick, reactive motion.

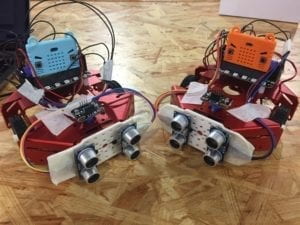

I was quite happy with the results of this, so I just duplicated the steps to create a second robot.

Judging from the behavior of the first robot, I expected the second robot to be able to follow the first bot with no problems. The only issue I could imagine would be the speed of the two robots, where the ‘leader’ bot would either be too slow or too fast for the ‘follower’ bot.

Here is the result of trying to recreate the duckling squad imprinting effect:

Interestingly, the bots managed to create a ‘congo-line’ effect quite well; the only issue I saw was that the follower bot would sometimes lag behind the leader and then lose the detection due to the first bot moving outside of the distance threshold.

Reflection

I think that the phenomenon of imprinting is very intriguing in that it is a pre-programmed behavior in living organisms. In a way, this is an example of robotic behavior within non-robotic organisms (following a designated point from start to finish). However, to have something react in real time to sudden changes in direction or movement, and even follow specific organisms is also a good marker for lifelike behavior. It would be interesting to have my ‘leader’ bot programmed to exhibit more randomized behavior, and have the ‘follower’ bot mimic these actions as well.

Sources

“My Life as a Turkey.” PBS, Public Broadcasting Service, 21 Oct. 2014, www.pbs.org/wnet/nature/my-life-as-a-turkey-whos-your-mama-the-science-of-imprinting/7367/.

T.L. Brink. (2008) Psychology: A Student Friendly Approach. “Unit 12: Developmental Psychology.” pp. 268 [1]