Introduction

In an effort to stay true to the title of the class and create something interactive with techniques covered in class, I explored using style transfer. At first the project started off as an extension of my emotion classifier / style transfer midterm. However, I decided to remove the emotion classifier due to performance, and instead focussed on building an interactive experience around the style transfer. Throughout the class, we’d studied artists and painters so I thought it would be an interesting idea to explore how some artists might’ve perceived the world around us. The name of the concept is also partially inspired by aven’s github repo named “what_would_monet_see”. The idea is to perform a style transfer on an input image and use the output as the backdrop of the canvas. A sketch is placed over to represent what an artist might have started with before painting.

Concept

For the user interface, I wanted to provide several options for artist styles, as well as a way to select different images. This required quite a bit of logic to enable users to select a new image, reset any drawings, and select new styles. An example of the UI layout is shown below.

When the user first loads the page, they see several options for painting styles and an input image.

The user must select a painting style before starting to paint. Once a style is selected, a sketch of the image is projected and the user can begin to use their mouse to paint.

The user is also able to cycle through and load different painting styles derived from trained neural style transfer models.

Finally, the user is also able to select different input images to test painting styles. I also included some examples of paintings from several artists to explore how other artists may have painted a similar subject.

Here’s how Francis Picaba may have painted a starry night

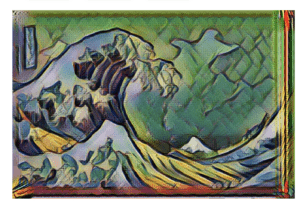

or how Picasso might have painted the great wave

Challenges & Considerations

Since neural style transfers are a relatively new publication, there are limited pretrained models that can be used. I used the neural style training process we established in class to train several models. I also used some technique to dynamically load models to conserve computational footprint while exploring the page. The biggest challenge for displaying and inferencing images is the limitation on client resources. Client based neural style transfer is limited to roughly 300,000 pixels (around 500×600). Therefore a limit on the height and size of images is used to prevent overload.

Future Work

In the future, I’d like to implement several features. The first of which is the ability for users to upload their own images to see how artists may have painted them. In order to do this, a binarization algorithm would need to be developed to create the sketch effect. I’d also like to add a method to train new painting styles. While this could take hours, implementing some kind of queue for doing such would enable far more painting styles. The last feature would be to add another layer of interactivity using mobileNet so that users to use their hands to paint instead of a cursor. I imagine that something like this would work well as an art installation at a museum.