Title: When The Mind Meets The Eye

Professor: Eric Parren

Producer: Isaac Schlager

In the very beginning stages of our final project, despite knowing very little about which direction to go in, my partner and I knew that we wanted to create some form of interaction centered around art. As we each conducted further research, we discovered one common problem that persisted in art museums worldwide: a lack of connection or interaction between attendees and the art pieces, particularly popular ones. One specific article I remember approaching referenced the Mona Lisa and how many people who went to the Louvre to visit her left disappointed. I referenced this specifically in my project proposal documentation and how our goal is to mitigate this problem through a new form of interaction we create using Arduino and Processing. Both of us can attest that we never realized just how far this project has come since its planning stages and are so grateful for all the knowledge we acquired while working on it.

The foundation for our interaction again centered around what we deemed as an “elevated” experience between the user and art. Of course we needed to extrapolate on what “elevated” means, so we decided to take some time to brainstorm ideas before our next meeting. The inspiration for the direction we decided to go in actually centers around the common trend of taking selfies in front of works of art in the museum, particularly the Mona Lisa. We thought a lot about why one takes a picture with a work of art and determined that one of the reasons was to create a personal connection and a personalized image of you with the piece. Therefore, we wanted our project to have the user interact with a piece of recognizable art and insert themselves into the piece by creating their own version. In order to do this, we wanted to go beyond just a user creating what they wanted. We intended to add a surprise factor and have the user influence and create their own personalized version without knowing what the turnout would be. To ensure this process, decided to use questions in which users would be able to have a slew of answers to choose from and based off of their answers, an image would be drawn. Instead of drawing something completely unique for our design process, we decided to stick with something relevant and keep the template of the Mona Lisa itself because of how widely known the painting is. One thing we learned from this decision was just how impactful, hand drawing or painting things can be if imported into your project. Originally, we were contemplating merely creating a collage of images from the internet but then became aware of the copyright issues as well as the lack of creativity that this would take. Therefore, we decided to draw the Mona Lisa in Illustrator as well as all of her different changes. The changes we decided to create, otherwise known as the edits the user could make to the Mona Lisa, were branched into three categories: the eyes, the mouth, and accessories. Our reasoning for omitting a nose is that many emojis do not have a nose feature and therefore, the nose is not critical for expressing or reflecting different emotions or personalities.

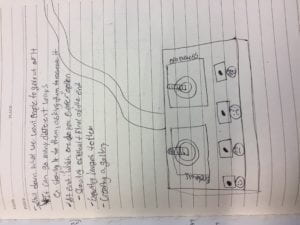

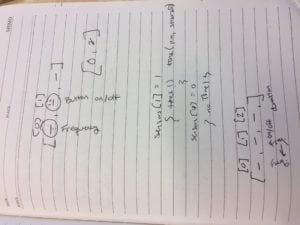

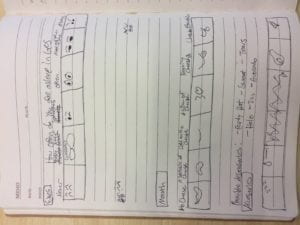

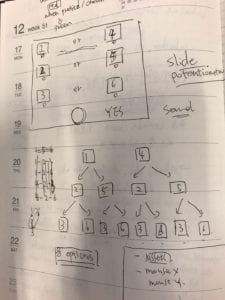

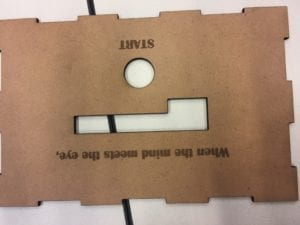

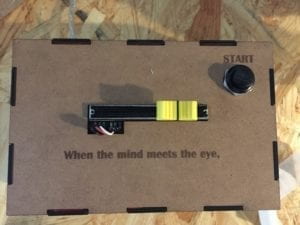

Below are some pictures of our planning process.

The idea to provide the users with options as well as have their responses to questions reflect the emotions of the Mona Lisa lead us to make the decision to use potentiometers that the user would interact with in order to record their answers. We decided to specifically use a slider potentiometer because of the visual effect and scale that we were trying to reflect in the question answer options. We also decided to create a console where we would have one potentiometer and a start button. Creating the console taught us that sometimes simplicity is better than flashiness, or that quality is better than quantity. We originally planned to have a potentiometer to answer each of the questions, but we realized that having more than one potentiometer could confuse the user, so we decided to keep our design simple. We digitally fabricated the console we wanted to create out of wood because wood was light, yet sturdy and hard, yet malleable. We determined that creating a console that used the mold of a box would be much easier to work with and make edits to and test than 3D printing one.

Below are images of the resources we decided to use as well as the different parts of the console. We had two different designs made but decided to go with the one where the start button was in the top right corner.

Another aspect of our project that was emphasized more than my midterm project was theming. Because we wanted to provide an artsy aesthetic, we found a tan fabric that we ended up draping over parts of the laptop that we were using as well as a piece of cardboard, which provided a renaissance vibe.

By the time user testing came around, we accomplished many significant steps in our production process. First and foremost, we had the console constructed and ready to use. We also had all of the different versions or “moods” of the Mona Lisa drawn as well as any accessories. Our code was created to the point where three questions would appear on the screen as well as each of the possible answers. A user could log in each of their answers to each question and it would be read in an array and the corresponding features would then be drawn on the Mona. In total, we had six different pairs of eyes, six different types of mouths, and 4 different features to choose from. The mouths and the emotions they portrayed ranged from happy to sad to scared and other emotions. The same thing was the case with the eyes. The accessories on the other hand referenced emotions as well as other things that current pop culture or art included. For example, we had an avocado, party hat, orb, and sweat mark that were all options that the user could choose. We had each question relating to a specific type of feature and decided that it would be best that way because then things were loosely related to one other. We wanted the user to eventually figure out how they could change the features but without giving them any hints and still making things seem random or unknown. Below are two pictures of our current setup at the time.

I believe that the feedback we received during user testing was imperative to any success we had during the final presentation as well. There were key aspects of interaction that were neglected which surfaced during user tested and we definitely learned a great deal from the experience. The first large issue that we encountered was that the users were having difficulty reading the questions and answers in time to figure things out and answer the beginning question. They knew how to use the start button, but when it came to the potentiometer, things were much more difficult. To remedy this issue, my partner and I created another separate colored ellipse that would float over the corresponding answer as the user moved the potentiometer from left to right. We also printed clear directions on the title screen in addition to the start button, which made things much more clearer for the user. Here, we learned that users are not always as smart as we think they are. I do not mean that in a disrespectful manner, but I mean to say that things are not always as intuitive as they seem. The user testing really exposed just our own biases towards the project and showed that even though things may seem obvious to us, they are not always obvious to others. Another significant change we made as a result of the user testing session was the content of the questions being asked. Even though people may have enjoyed our initial project, they voiced their concerns around its purpose and would consistently show their confusion with its message. One aspect that was particularly confusion were the questions being asked. Initially, my partner and I intended to have our questions be finally and relatable to the NYU Shanghai user, while still measuring some aspect of the user’s personally. Yet, in making these questions comical or relatable, they had very little to do with the art aesthetic and content we were working with. Therefore, we decided to change all three questions and make them centered around art, which was a change made for the better. Another key feature of our project that we decided to add after user testing was a black screen with a white outline of the Mona. With these, the user had more of a visual message that something was going to happen to her after they answered their series of questions. It also was more visually appealing at the end for the user once everything was drawn and presented in color. The last major source of feedback we received was to program a “select button”. The reasoning behind this was that some users thought that the start button also qualified as a select button and that they could record their answer before the timer ran out. This obviously was not the case and it confused the users. In order to program this, there were two directions that we could have gone it. The first would be to physically add and code in another button, but that would entail us having to fabricate another cover for the console and in the interest of time, it was not feasible. Thus, we decided to go the other route and try and code our start button to perform both operations. On the Arduino side of the changes we needed to make, this was relatively easy. All we had to do was create another conditional and boolean variable that would allow for the button’s value to be read only once while pressed in order for questions not to be skipped. Yet, for reasons we still have not figured out, the serial communication with processing was not working. In fact, we encountered a number of other issues once we incorporated this function into our code. Questions were not being read, the button was only working part of the time, and for some reason a fourth value was being read from processing that was making things more difficult. Every time we solved one issue, another three or four would arise. Therefore we ended up making the difficult decision not to include a select button. That being said things ended up working in our favor during the final presentation, for our directions were clear enough in that the users were not trying to double the function of the start button.

Below is a visual from what took place during our first user testing session as well as our initial presentation of our project.

In conclusion, the goals of our project were to create an increased connection between the user and a piece of art, have their personality reflected within the Mona Lisa Painting they create, all while maintaining an entertainment factor strong enough for the user to want to test it multiple times. I believe these goals, as well as the our outcome which satisfied all of them to one extent or another, also reflected my definition of interaction which is, “a continued conversation between two or more persons or things that convert different forms of energy into the physical and virtual worlds”. My reasoning behind this is threefold, first and foremost the serial communication factor ensured constant communication between Arduino and Processing. In addition, the user was had a consistent time limit where they had to respond to questions and did so accordingly, reacting to each of the options presented to them as well as the final product they created. The user was also able to physically move the potentiometer and press the start button in order to create a virtual image of the Mona Lisa. If I were to look back previous definition of interaction, which centered around the “open mouth effect”, it may not have satisfied it, but I believe that interaction goes beyond this phenomenon. In the end, our final project presentation was very successful. The users knew how to interact with things based off of the directions that were provided, they enjoyed the question and answer content, and they were left wanting to try the project again and see the different possible combinations they could create. That being said, there were still some things we would change about the project if we were allotted more time. The major change we would have made would be increasing the options that one could choose and expand to other paintings besides just the Mona Lisa. If we were to include other famous paintings and features, the users could have a much longer, more entertaining testing session. That being said I would say our biggest failure was that the overall message that we were trying to convey in regards to art was not necessary received by the users. Some users did not identify with the possible responses presented to them for each question and when finished testing the project, they had to ask us more questions about our intended message behind the project and the features that were being presented to them. Though frustrated with this result, I believed that we learned something critical from this experience, which is that we need to use more sufficient methods behind conveying our project’s purpose and think more about the general user who may not have the knowledge and understand to be able to even comprehend some of the basic aspects of our project that we were trying to convey. That being said, my partner and I accomplished above and beyond what we originally thought possible with this project and I am extremely happy to have worked with her. Producing this not only expanded my knowledge of coding, but it also provided me with many important lessons. The first lesson is that no matter how much work you put into something, it can and sometimes will always fail to complete its purpose during user testing. During this time period, despite the pain it may inflict, it is important for successful creators to truly listen and be open to the feedback and constructive criticism provided to them. I understand why interaction lab is more than its title. I truly believe that the hardest aspect of interaction lab is not creating something on a screen or building a circuit, but having a person successfully interact with what is on that screen or in that circuit. This final project taught me a lot about the human mind, what we find entertaining, and how we act with our surrounding environment. I truly believe that, despite not majoring in Interactive Media Arts, that the tools I learned about in this class will help me in the future and I do not regret for one moment taking this class and putting in all of the hard work and effort that I did on each of the projects I created for it. To conclude this documentation, I would like to explain the overall significance of my final project. Our project’s significance lies within the interaction it creates and the lesson it provides. Our project connects the user to pieces of art that they otherwise may not have paid as much attention to (ex. The Mona Lisa). It not only creates a fun, engaging experience with art, but it also provides a critique our relationship with art by exposing a form of interaction that has not been widely implemented. I believe that our project could be the foundation for much greater projects to come and I hope that one day, someone will expand upon this aspect of interactive art in the future.

Below are pictures and videos of our final product as well as the code that we created from scratch.

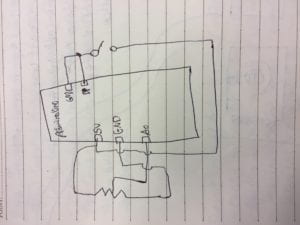

Circuit Schematic

Processing Code

//File Titles

//Mouths: 1 “mouth-1.png”, 2 “mouth-2.png”, 3 “mouth-3.png”, 4 “mouth-4.png”, 5 “mouth-5.png”, 6 “mouth-6.png”

//Eyes: 1. “eyes-1.png” 2. “eyes-2.png”, 3. “eyes-3.png”, 4. “eyes-4.png”, 5. “eyes-5.png”, 6. “eyes-6.png”

//Accessories: 1. “feature-2.png”, 2. “feature-3.png”, 3. “feature-4.png”, 4. “f5.png”,

//SERIAL COMMUNICATION

import processing.serial.*;

Serial myPort;

String Mouths[] = {“mouth-1.png”, “mouth-2.png”, “mouth-3.png”, “mouth-4.png”, “mouth-5.png”, “mouth-6.png”};

String Eyes[] ={“eyes-1.png”, “eyes-2.png”, “eyes-3.png”, “eyes-4.png”, “eyes-5.png”, “eyes-6.png”};

String Features[] ={“feature-2.png”, “feature-3.png”, “feature-4.png”, “f5.png”, };

//QUESTIONS

String[] questions = {“How do you feel when you see the Mona Lisa?”, “What was your last experience at a museum?”, “How often do you see yourself as an artist?”};

int questionIndex = 0;

boolean intro = true;

boolean oneTime = false;

//boolean reset

//ANSWERS

int[] answers = new int[questions.length];

int answer = 0;

String wholemessage;

int startbutton;

//TIMER VARIABLE

int startTime = 0;

int timerLength = 12;

//QUESTIONAIRE SWITCH

boolean askingQuestions = false;

boolean creatingPicture = false;

//mona lisa background (defining variable)

PImage mona_start;

PImage mona_question;

PImage mona_final;

PImage mouth[] = new PImage[6];

PImage eyes[]= new PImage[6];

PImage features[]= new PImage [5];

//Font

PFont font;

//PShape mona;

//PShape eyes1;

int[] values = new int[2];

String[] list = new String[2];

float alpha1 = 0;

float alpha2 = 0;

float alpha3 = 0;

void setup() {

fullScreen();

//size(1440, 900);

//size(600, 600);

mona_start=loadImage(“mona_q.png”);

mona_question=loadImage(“mona_qq.png”);

mona_final=loadImage(“mona.png”);

//mona=loadShape(“artboard1.svg”);

myPort = new Serial(this, Serial.list()[1], 9600);

//[0]= ellipse (100,200,20,30);

//background(0);

//ellipse (100,200,20,30);

for (int i =0; i < Mouths.length; i++) {

mouth[i]=loadImage(Mouths[i]);

}

for (int i =0; i< Eyes.length; i++) {

eyes[i]=loadImage(Eyes[i]);

}

for (int i=0; i<Features.length; i++) {

features[i]=loadImage(Features[i]);

}

printArray(Serial.list());

/*font = createFont(“Nobile-Bold.ttf”,60);

textFont(font);*/

String[] fontList = PFont.list();

printArray(fontList);

font = createFont(“PermanentMarker-Regular.ttf”, 40);

textFont(font);

}

void draw() {

if (intro==true) {

background(0, 0, 0);

imageMode(CENTER);

image(mona_question, width/2, height/2, width, height);

pushMatrix();

translate(640,130);

rotate(HALF_PI);

textSize(60);

text(“When the mind meets the eye”, -90, -50);

textSize(50);

//text(“Press Start to Begin”, 30, 70);

textSize(30);

text(“1. Press START”, -70, 70);

text(“2. Use Slider to Answer Questions”, -70, 130);

text(“3. Enjoy Your Creation!”, -70, 190);

popMatrix();

}

//shape(mona, 50, 50, 50, 50);

//my laptop’s screen size

//text(“positioning placeholder”, 900,200);

//image(mona, 0, 0, 1560, 2600);

//image(name,x,y,width,height)

//shape(pshape name, x, y, width, height);

//println(mouseX, mouseY);

//testing location and size

//Uncomment these lines to test features (SAM)

//image(mouth[0-5], 980, 425, 200, 200);

//image(eyes[0-5],1145, 400, 200,200);

//image(features[0-5],50,50,50,50);

updateSerial();

//println(answer);

//}

if (startbutton == 0 && !askingQuestions && creatingPicture == false) {

startTimer(); //put this wherever it is you start asking questions

askingQuestions = true;

intro = false;

// start image here

imageMode(CENTER);

image(mona_start, width/2, height/2, width, height);

}

if (askingQuestions == true) {

// questions image here

/*for loop checking that all the questions in an array

titled questions is being iterated through. e.g array will look like:

[“whats your favorite number out of 6? XD”, “another question”, “anotha”]

so when we iterate through each question, we will start with the first question at index 0, then

start the timer and check if the timers complete. once its complete, we can then store the answer

in an array of equal length to the array titled questions. after that, the loop will move onto the

next question and the process will repeat. what you do with the array of answers is up to you afterwards*/

//Show questions[questionIndex]

// start image here

imageMode(CENTER);

image(mona_question, width/2, height/2, width, height);

pushMatrix();

translate(width/2, height/2);

rotate(HALF_PI);

textSize(36);

fill(255);

text(questions[questionIndex], 0, 0);

textAlign(CENTER);

printArray(answers);

popMatrix();

//if the timer is up

if (millis()/1000 – startTime > timerLength) {

//record answer:

answers[questionIndex] = answer;

//move to next question: questionIndex += 1

questionIndex += 1;

//restart the timer

startTimer();

}

drawTime();

ellipse(650, height * 1/7, 30, 30);

ellipse(650, height * 2/7, 30, 30);

ellipse(650, height * 3/7, 30, 30);

ellipse(650, height * 4/7, 30, 30);

ellipse(650, height * 5/7, 30, 30);

ellipse(650, height * 6/7, 30, 30);

textAlign(CENTER);

textSize(30);

if (values[0] == 0 ) {

fill(255,249,121);

ellipse(650, height * 1/7, 28, 28);

pushMatrix();

//ellipse(600, height * 1/7, 30, 30);

translate(580, height * 1/7);

rotate(HALF_PI);

//his codes

if(questionIndex == 0) {

fill(255);

text(“Irrelevant”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“Boring”, 0, 0);

} else {

fill(255);

text(“Never”, 0, 0);

}

popMatrix();

}

if (values[0] == 1) {

fill(255,249,121);

ellipse(650, height * 2/7, 28, 28);

pushMatrix();

translate(580, height * 2/7);

rotate(HALF_PI);

if(questionIndex == 0) {

fill(255);

text(“Overrated”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“Perplexed”, 0, 0);

} else {

fill(255);

text(“I’m No Picasso”, 0, 0);

}

popMatrix();

}

if (values[0] == 2) {

fill(255,249,121);

ellipse(650, height * 3/7, 28, 28);

pushMatrix();

translate(580, height * 3/7);

rotate (HALF_PI);

if(questionIndex == 0) {

fill(255);

text(“Ambiguous”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“Touchy Feely”, 0, 0);

} else {

fill(255);

text(“Sometimes Warhol”, 0, 0);

}

popMatrix();

}

if (values[0] == 3) {

fill(255,249,121);

ellipse(650, height * 4/7, 28, 28);

pushMatrix();

translate(580, height * 4/7);

rotate (HALF_PI);

if(questionIndex == 0) {

fill(255);

text(“Intrigued”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“Selfie Time!”, 0, 0);

} else {

fill(255);

text(“Weekend Van Gogh”, 0, 0);

}

popMatrix();

}

if (values[0] == 4) {

fill(255,249,121);

ellipse(650, height * 5/7, 28, 28);

pushMatrix();

translate(580, height * 5/7);

rotate (HALF_PI);

if(questionIndex == 0) {

fill(255);

text(“Fascinated”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“Mesmerizing”, 0, 0);

} else {

fill(255);

text(“Daily Monet”, 0, 0);

}

popMatrix();

}

if (values[0] == 5) {

fill(255,249,121);

ellipse(650, height * 6/7, 28, 28);

pushMatrix();

translate(580, height * 6/7);

rotate (HALF_PI);

if(questionIndex == 0) {

fill(255);

text(“Pure Ecstasy!”, 0, 0);

} else if(questionIndex == 1) {

fill(255);

text(“In Love”, 0, 0);

} else {

fill(255);

text(“I AM ART”, 0, 0);

}

popMatrix();

}

//SHOW TIMER / TRACK INPUT

//check that you’ve reached the end

if (questionIndex == questions.length) {

askingQuestions = false;

creatingPicture = true;

questionIndex = 0;

}

}

//PART AFTER ASKING QUESTIONS

//each question as an “if” statement, else if…. and then within each have a switch

//ex. case 1…… break;

if (creatingPicture==true) {

// final image

imageMode(CENTER);

image(mona_final, width/2, height/2, width, height);

pushMatrix();

float yOffset1 = sin(alpha1) * 5;

alpha1 += 1.6;

translate(0, yOffset1);

switch (answers[0]) {

case 0:

image(mouth[0], 1010, 375, 67, 130);

break;

case 1:

image(mouth[1], 1010, 375, 67, 130);

break;

case 2:

image(mouth[2], 1000, 375, 67, 130);

break;

case 3:

image(mouth[3], 1020, 405, 67, 130);

break;

case 4:

image(mouth[4], 1000, 375, 66, 150);

break;

case 5:

image(mouth[5], 1000, 375, 90, 150);

break;

default:

//image(mouth[0], 1010,375, 67,130);

break;

}

popMatrix();

pushMatrix();

float yOffset2 = sin(alpha2) * 7;

alpha2 += 1.2;

translate(0, yOffset2);

switch(answers[1]) {

case 0:

image(eyes[0], 1130, 370, 85, 200);

break;

case 1:

image(eyes[1], 1130, 370, 100, 250);

break;

case 2:

image(eyes[2], 1130, 370, 108, 230);

break;

case 3:

image(eyes[3], 1135, 370, 161, 230);

break;

case 4:

image (eyes[4], 1135, 370, 97, 230);

break;

case 5:

image (eyes[5], 1130, 370, 113, 200);

break;

default:

//image(eyes[0], 1130,370, 85,200);

break;

}

popMatrix();

pushMatrix();

float yOffset3 = sin(alpha3) * 6;

alpha3 += 1;

translate(0, yOffset3);

// image(mouth[answers[0]], 980, 425, 200, 200);

//image(eyes[answers[1]], 1100, 400, 200, 200);

switch(answers[2]) {

case 0:

image(features[0], 1197, 217, 119, 85);

break;

case 1:

image(features[1], 321, 500, 280, 280);

break;

case 2:

image(features[1], 321, 500, 280, 280);

break;

case 3:

image(features[2], 345, 518, 310, 281);

break;

case 4:

image(features[2], 345, 518, 310, 281);

break;

case 5:

image(features[3], 1282, 287, 216, 280);

break;

default:

//image(features[0], 1197,217,119,85);

break;

}

popMatrix();

}

if (startbutton == 0) {

startTimer(); //reset

intro = true;

creatingPicture=false;

}

}

void updateSerial() {

//sending start message to Arduino

while (myPort.available() > 0) {

wholemessage = myPort.readStringUntil(10); //10 is the ASCII code for ‘new-line’

if (wholemessage != null) {

//println(wholemessage);

values = int(split(trim(wholemessage), ‘,’));

for (int i = 0; i < values.length; i ++) {

//values[i] = int(list[i]);

}

}

}

startbutton= values[1];

answer= values[0];

printArray(values);

}

void drawTime() {

fill(255, 255, 255);

noStroke();

rect(width/6, 0, width/30, height * ((startTime + timerLength)- millis()/1000) / timerLength);

fill(255);

rectMode(CENTER);

//text((startTime + 10) – millis()/1000, width/2, 5*height/6);

rectMode(CORNER);

}

void startTimer() {

startTime = millis()/1000;

}

Arduino Code

int sensor1 = A0;

int button = 9;

//int sensor2 = 9;

int startbuttonvalue = 0;

void setup() {

// put your setup code here, to run once:

Serial.begin(9600);

pinMode(button, INPUT_PULLUP);

//using this in order to not use a breadboard

//starts at 1 instead of 0

}

void loop() {

//while (Serial.available()) {

//Serial.read();

int sensor1Value = map(analogRead(sensor1), 0, 1023, 0, 5);

startbuttonvalue = digitalRead(button);

Serial.print(sensor1Value);

Serial.print(“,”);

Serial.print(startbuttonvalue);

Serial.println();

delay(20);

//}

}