Part A

Step 1: Plan

The Braitenberg vehicle I would like to model my KittenBot after is Insecure, which is the wall follower. A brief description of this robot would be that it roams freely until it hits a wall or obstacle, upon which it would turn out and roam again until it encounters another obstacle and so on. I chose this implementation because I find it really interesting and it has noteworthy real-life applications, such as the Roomba automated vacuum cleaner. I guess I would need further understanding on how to implement the turtle motor system and program it in Python, but I’m excited to develop my skills and try it out. The first thing I would do is assemble to motors and wire them together. Then, I would have to do a lot of research and figure out how to code it in Python, which would require a lot of trial-and-error, and I would also have to conduct several tests and flash several programs into the KittenBot.

Step 2: Program

Our code can be found on GitHub:

https://github.com/bishchand/BIRS-Lab4/blob/master/InsecureRobot.py

In order to mimic the behavior of the “Insecure” Braitenberg vehicle as much as possible, we programmed it according the following rules. The program was in a continuous while loop, because the robot’s purpose was to keep moving and exploring the environment indefinitely. The robot would move forward in one direction, until the distance sensor detects an object 30mm ahead in its path. Once an object is detected 30mm away, two events follow. First, the motors stop for a few seconds, and the servos controlling the direction of the robot turn by 90 degrees.

Step 3: Document

During this lab session, we set out to complete one task. It was to program our KittenBot to mimic the behavior of the Braitenberg vehicle, Insecure. We met our objective, and the end result was a robot that could explore the environment, while accurately detecting and circumventing any obstacles in its path.

Below is a video of our robot navigating its way around the classroom.

Part B

Step 4: Draw

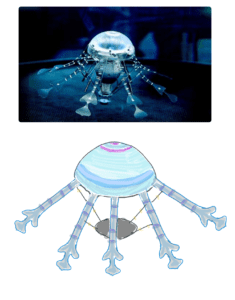

Step 5: Analyze

My robot’s depth perception and its tendency to steer away from obstacles mimics a biological that is present in all animals, albeit in varying degrees. That trait is instinctive behavior, or more specifically fear of harm. The robot autonomously prevents itself from crashing into the objects in its path, which could damage it. In the same way, animals have an instinct to protect themselves from harm and you would not normally see healthy living beings intentionally crashing into obstacles or putting themselves in harm’s way.

Step 6: Remix

The first successful iteration of my robot did what it was supposed to do, but did not mimic realistic movements, like an actual biological organism. The robot would move forward towards an obstacle and then almost crash into it. As soon as it encountered an obstacle, it would first stop, evaluate its surroundings, and then make a turn. Real living beings would turn away almost automatically instead. So, we removed the delays and that’s what made its turns and movements much smoother and seemingly more intuitive.

I also have conceptual ideas that are currently not within my capacity as a programmer. It would be interesting to add a tracking element to the robot, to replicate social behaviors and a child’s instinct to follow their mother. Using infrared sensors and emitters we could set up a wireless beacon for the robot to find. The idea is that as soon as the robot detects the beacon within range, by detecting the infrared signal, it will move towards finding the beacon.

Step 7: Reflection

Both readings taught two aspects of animal behavior, and the process of implementing it towards robots. The Braitenberg Creatures reading focused more on the mechanical part, and the rules that would set determine the robot’s movements and decisions. Whereas the Experiments in Synthetic Psychology reading explored the cognitive functions and inherent motivation behind such animal behaviors, that would help us further understand what we are trying to accomplish with the rules and behaviors we want our robot to demonstrate.