Visual Recognition–OrCam

Shenshen Lei(sl6899)

Visual Recognition:

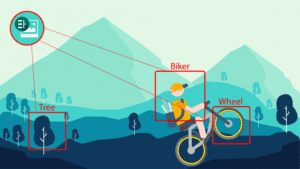

Visual Recognition is one of the widely employed AI technology today. There are plenty of Visual AI such as facial recognition, image search, color recognition, etc. Visual Recognition promotes the development of Zero UI. Our group found a typical and very useful product that based on Visual Recognition technology: OrCam.

OrCam is a company developed a series of products that help those who are blind or visually impaired. OrCam MyEye could recognize the text and faces at a certain distance in front of the user and then read out the content to them. It could also recognize some body language such as “looking at the watch” and tell the user what time it is. It improves the reading efficient of the users and makes their lives more convenient.

However, we believe that the product could be improved in several aspects. First, the way to trigger the text recognition function is to point at the word, or the cam will start to read the whole page of the text. Blind people may not know where the word is, so pointing-to-start sometimes does not work. Another thing is that if the cam could trace users’ pupil when they look at the text, users can get simultaneous feedback without using their finger to point texts. Also, the recognition range of the cam is too limited. If it can enlarge the distance of recognition it can help in more aspect such as recognize the traffic light or warning the user earlier if there are barriers. Finally, the OrCam may also change the design of the outlook.