Midterm Project Individual Reflection

“Plantalks”

By Lifan Yu (ly1164)

Instructor: Inmi Lee

- CONTEXT AND SIGNIFICANCE :

What is our “Plantalks”

We got our idea from my partner Xinran’s plants. She sometimes forgets to water her plants in her dorms. So we thought of creating a device that helps remind her to water her plants.

Our “Plantalks” is a device that facilitates the communication between humans and plants. It reminds people of when to water plants and indicate how much water those plants need. It helps plants to express themselves by detecting the wetness of their soil and making movements and sounds to help them “express” themselves (when they are in need of watering, when the amount of water is enough and when the soil is too wet.

When people stand within a distance to the device, it will start working. When soil is too dry, the device will carry plants to move up and down as if it was anxiously waiting for someone to water it. Meanwhile, it will play a piece of audio saying “I’m so dry, I need help, I need water, help!” and blink a red light. When wetness is just fine, the device will play a song saying “It’s unbelievable, this is as good as it gets.” And blink a green light. When the soil is too wet, it will play a piece of audio saying “I’m drowning, no more water please!” and blink a yellow light. A piece of hand-shaped colored paper will start waving as if saying “No”.

This way, the device helps plants communicate effectively with people. People can know when to water the plant and when to stop watering. This device makes possible a long-term interaction possible.

How my group project inspired me

My previous group project was Heal-o-matic 5000, a medical device that can diagnose people’s illnesses. People put their hands on a specially designed sensor screen and their faces are scanned for their ID. The device will then diagnose people’s illnesses by analyzing the data collected by the sensors and the diagnosis will be appeared on the screen. Meanwhile the relevant medicine will be dispensed.

This device mainly responds to the environment. The interaction level is relatively low. The person isn’t continuously interacting with the device. The person only sends out information for a small interval of time before the machine responds and this round of communication ends.

I wondered if I could build a device that can constantly communicate with the other “actor”. That is, it can detect the information of the other “actor” constantly. The other actor can also respond to the device’s movements, sounds etc. I also hope that this type of communication can last for a long time.

The project I have researched & what inspired us

Reactive Sparks by Markus Lerner. This is an installation of seven double-sided vertical screens that is currently in front of the OSRAM main office in Munich, Germany. When cars pass the road in front of the screens, light-colored lines will appear on the screen. Meanwhile, the orange-color waves on the screen rise when the numbers of passing cars increase.

This device displays the movements, speed and numbers of the passing cars. Through the changing lights people can see from a distance the traffic conditions on the road. It collected massive analog information and converted them into a simplified, more visualized and artistic form.

When I saw this project, I wondered if I could create something like that. But I didn’t immediately draw inspirations from this when designing the midterm project. Xinran Fan and I initially thought of creating a device that automatically waters plants according to the wetness of their soil. After consulting with instructors (instructors Inmi Lee and Marcela), we learned that as plants don’t talk, we can create a device that makes movements and sounds to help plants “express” itself so as to remind people of watering them.

This project we researched is actually a reactive device. However, this type of reaction fits well in our new idea. Showing something that can’t be easily noticed or attached importance on in an exaggerated or artistic style is actually what we need in helping plants to “talk”.

What is unique and/or significant about our project

Our project involves not only humans but also plants. It facilitate the communications between humans and plants. It presents the conditions of plants in an artistic and attractive way. People are not only interacting with the fun device itself because more importantly, the device made possible the interaction between people and their plants. Combining plants with and interactive device is the most unique aspect of our project.

special value to its targeted audience

While it helps people remember watering their plants in order to keep the plants alive, it also enriches people’s experience of watering their plants-make this a fun thing.

- FABRICATION AND PRODUCTION:

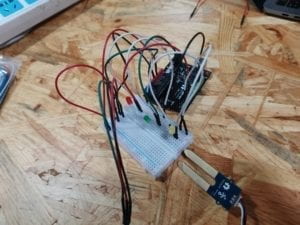

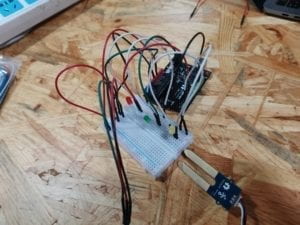

What our first circuit looked like:

Significant steps

3D printing

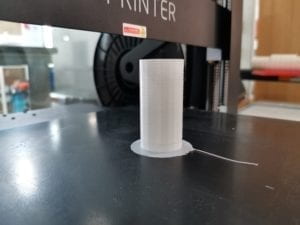

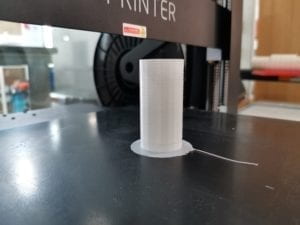

Servo motors are only able to turn from 0° to 180°. We need the part of our device that carries plants to move up and down. To convert the form of motion we need a wheel gear attached to our servo motor and a part that can be moved up and down when attached to the wheel gear.

The parts seem simple but we failed several times when printing. The first time the printer stopped generating heated plastic material:

The second time the printing process went wrong when printing the support parts. We finally succeeded the third time.

The original flower pot was too heavy for our device to carry. My partner Xinran Fan made a 3D model of a smaller flower pot. The first flower pot we printed broke soon after we took it off the printer. It seems the layers of material don’t stick with each other quite well.

We printed again setting the refill to 99%. However, soon after the printing began, the model couldn’t stick to the platform and started to move. We used another printer instead. This time I added raft. Out model was finally printed successfully.

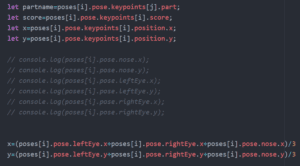

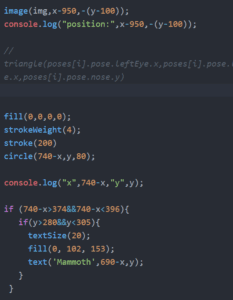

Coding

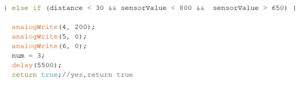

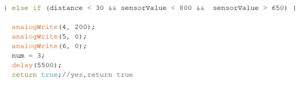

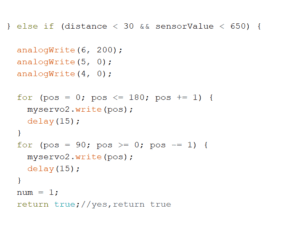

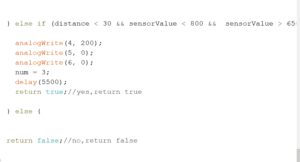

We used If, Then, Else, Else if to blink the lights. However, the red light indicating “too dry” didn’t stop blinking when the green light turn on. After testing repeatedly we finally figured out that in our code, we should also tell the other lights to stop blinking when one light is shining.

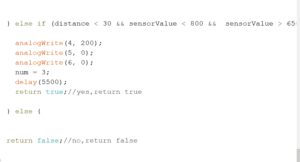

When adding the audio pieces, we found that a longer audio piece can’t be played until the end. It always stops playing after a few seconds. It took us a lot of effort to recall the function of “delay” and change the value of “delay”.

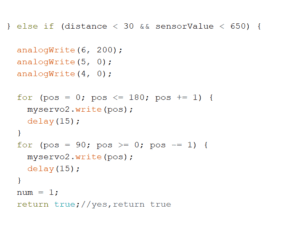

We thought of including the “If Then Else” statements of the lighting and motioning part in another “If Then Else” structure based on distance statistics collected by the distance sensor. But instructors advised us to use && to combine two conditions. Such as “if (distance < 30 && sensorValue > 800)”.

It was so hard to choose the intervals for “too dry”, “just fine” and “too wet”. We tested countless times to determine the suitable numbers. When the interval for “just fine” was too small, the device will go from shining a red light to shining a yellow light when we gradually pour water into the soil. After an arduous process we finally found the relatively suitable intervals.

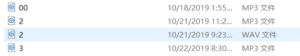

Adding audio files

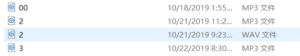

We added three recorded audio files into our project. These audio files will be played while the LEDs blink. We learned to convert mp3. format to wav. format. (In the end, however, we still used mp3. format) We tried several types of speakers before finding ones that are easy to work with and figuring out the code with the help of instructors.

User testing

At user testing we haven’t added the infrared distance sensor. An instructor advised us to add an infrared sensor so that when people are far away from the plant, the device will not be working. That is, the plants wouldn’t be calling for people “in vain”.

We didn’t put descriptions near our LED lights to describe what the blinking of each light means. Several people suggested us to add attractive descriptions, so we added a sad face, a smiley face and a surprised face with descriptions “too dry”, “just fine” and “too much water”.

These adaptions were effective because they helped users make sense of what exactly the plant is “conveying”.

What our device looked like without description:

- CONCLUSIONS

My goal is creating an interactive device that facilitates the communication between humans and plants. This device is interacting with plants as well as people.

My definition of interaction: Interaction is the process in which two people, devices, or a people and a device communicating with one another. This type of communication should include receiving information, processing the received information and giving a feedback, response or creating a certain kind of action according to the analytical results made in the processing stage. This idea is shaped by Crawford’s words “interaction is a cyclic process in which two actors alternately listen, think, and speak” .

Also, to distinguish interaction from reaction, both of the two interacting units should go through these three processes mentioned. They are both actively engaged in a rather continuous communication. Two-way feedback is indispensable.

In my project, the device, combined with plants can constantly send out their own information to people. People will see the information and react by watering the plants. This kind of long-term, back and forth information exchanging align with my definition of interaction.

During presentation, my audience interacted with our device by stepping forward to the device, seeing its reactions and slowly pouring water into the plant on our device. The different states of the plant are shown in a visual and auditory way. My audiences also put the moisture sensor into other plants provided on our table and saw the different information different plants “wish to convey”.

Improvements we could make if we had more time

An instructor advised us to change the simple, removable moisture sensor into something more complexed and that constantly stays in the soil. If the plant is to be put inside a room, the infrared distance sensor can be put at some other place where people can definitely walk by because people may not always walk up to the plants.

What we’ve learned

We explored the possibilities of facilitating interaction between plants, device and people, which is quite an innovative thing.

We kept facing difficulties in coding and 3D printing. We learned to figure out solutions step by step. When things don’t work out, we just patiently restart. Reflecting on our failures and keep trying is a right approach to success.