Link to concept presentation slides: https://drive.google.com/file/d/1jgxeo-knGx7nLrnWBmPZYLOIwz4mdJ8k/view?usp=sharing

Background

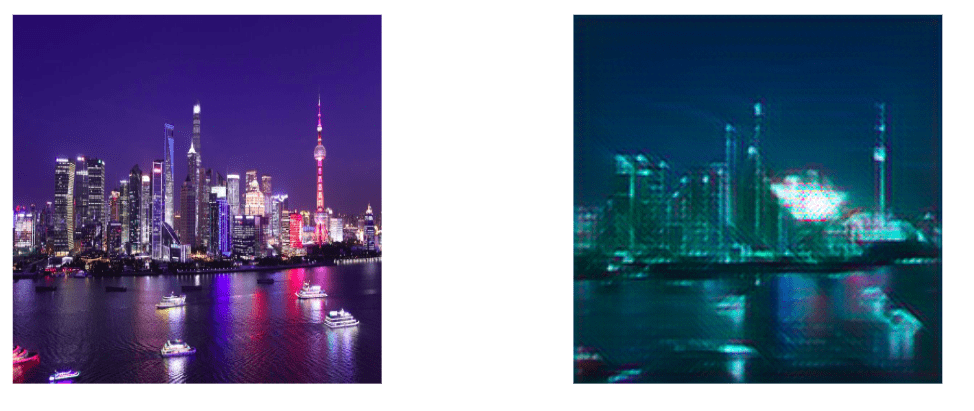

For my final project, I’ll be exploring abstract art with Deepdream in two different mediums, video and print. I plan on creating a series of images (printed HD onto posters to allow viewers to focus on the detail) and a zooming Deepdream video. I’ll create the original images with digital tools, creating abstract designs, and then push them one step (or several) further into the abstract with the Deepdream algorithm.

I love creating digital art with tools such as Photoshop, Illustrator, Data moshing and code such as P5, however I’ve realized that there are limitations to these tools in terms of how abstract and deconstructed an image can get. I’ve also been interested in Deepdream styles for a while and the artistic possibilities, and I love the infinite ways Deepdream can transform images and video. In the first week, I presented Deepdream as my case study, using different styles of the Deep Dream generator tool to transform my photos.

Case study slides: https://drive.google.com/file/d/1hXeGpJuCXjlElFr1kn5yZVW63Qcd8V5x/view

I would love to take this exploration to the next level by combining my interest in abstract, digital art with the tools we’ve learned in this course.

Motivation

I’m very interested in playing with the amount of control digital tools give me to create, with Photoshop and Illustrator giving me the most control over the output of the image, coding allowing me to randomize certain aspects and generate new designs on its own, and data moshing simply taking in certain controls to “destroy” files on its own, generating glitchy images.

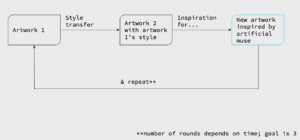

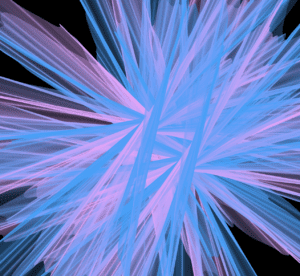

Created with p5 with randomization techniques and Perlin noise:

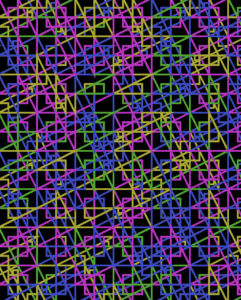

Created with datamoshing, filters and layout in P5:

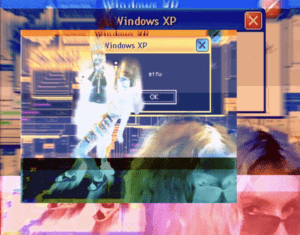

Abstract image created in Photoshop:

However, Deepdream takes away all most of this prediction and control, and while you are able to set certain guidelines, such as “layer” or style, octaves, or the number of times Deepdream goes over the image, iterations and strength, it is impossible to predict what the algorithm will “see” and produce in the image, creating completely unexpected results that would be nearly impossible to achieve in digital editing tools.

References

I’m very inspired by this Deepdream exploration by artist Memo Akten, capturing the eeriness and infinity of Deepdream: https://vimeo.com/132462576

His article (https://medium.com/@memoakten/deepdream-is-blowing-my-mind-6a2c8669c698) explains in depth his fascination with Deepdream, something I share. As Akten writes, while the psychedelic aesthetic itself is mesmerizing, “the poetry behind the scenes is blowing my mind.” Akten details the process of Deepdream, how the neural networks work to recognizevarious aspects of the reference image based on its previous training and confirm them by choosing a group of neurons and modifying “the input image such that it amplifies the activity in that neuron group” allowing it to “see” more of what it recognizes. Akten’s own interest comes from how we perceive these images, as we recognize more to these Deepdream images, seeing dogs, birds, swirls, etc., meaning viewers are doing the same thing as the neural network by reading deeper into these images. This allows us to work with the neural networks to recognize and confirm the images it has found, creating a cycle requiring both AI technology and human interference, to set the guidelines and direct it to amplify the neural network activity as well as perceive these modified images. Essentially, Deepdream is a collaboration between AI and humans interfering with the system to see deeper into images and produce something together.