For this week’s assignment, I decided to experiment further with Deepdream. The concept itself reminded me of a psychedelic-induced trip from contemporary media. Media such as Harold and Kumar, Rick and Morty, as well as others show moments where characters see their world distorted by drugs. In those scenes, the character is usually looking out towards nature, therefore, I chose to use a picture of the rainforest as my initial input.

I thought that the output would result in something interesting and similar to what I have seen in popular media.

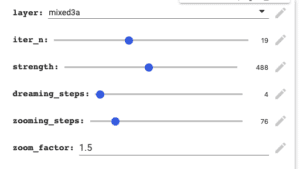

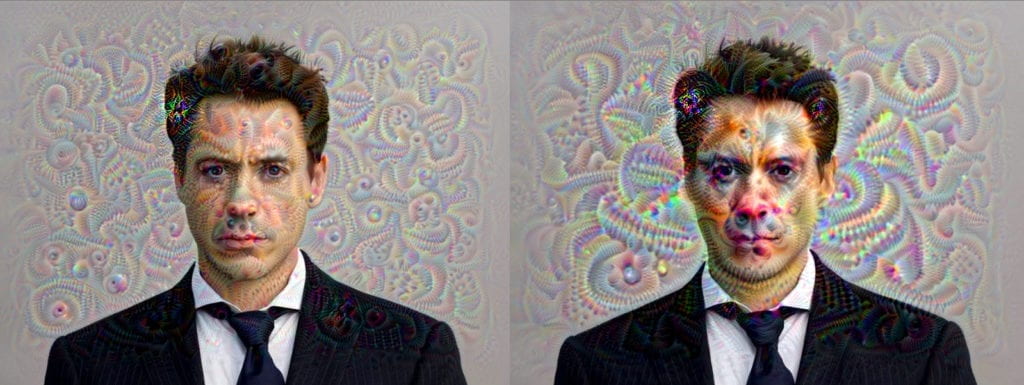

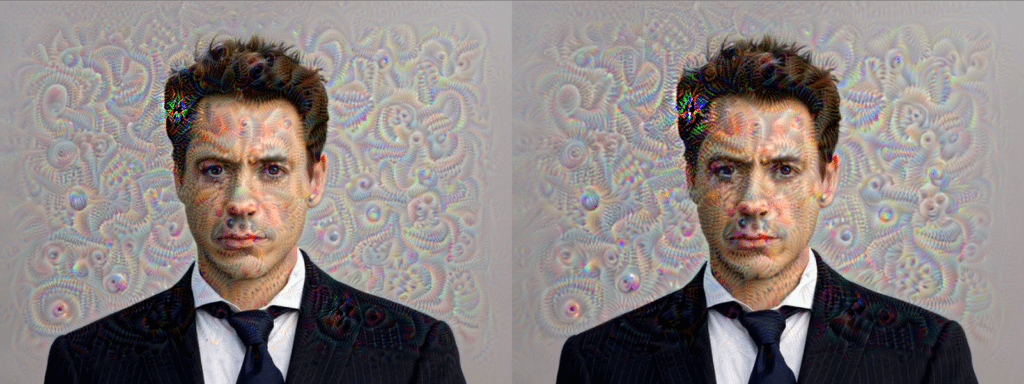

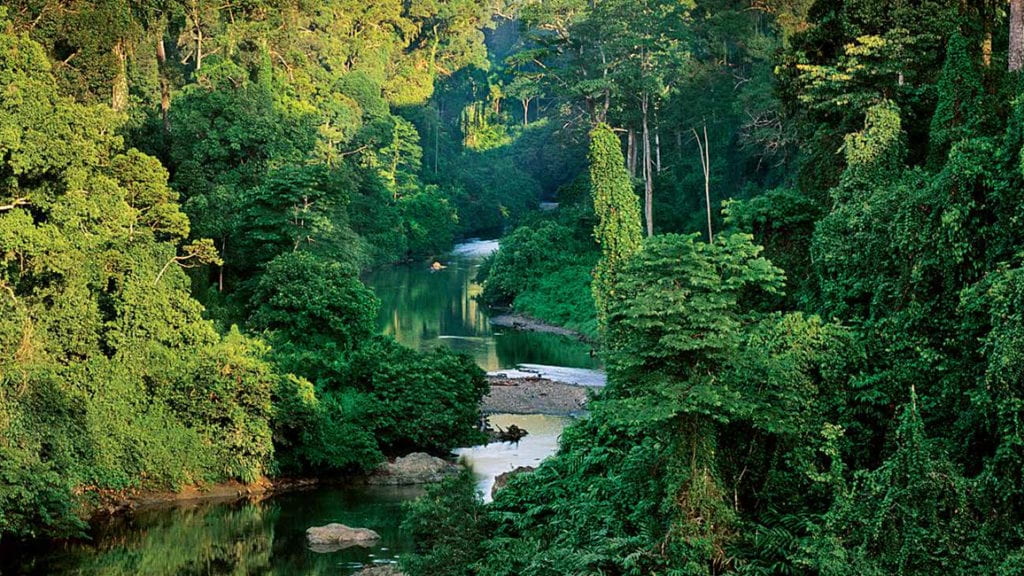

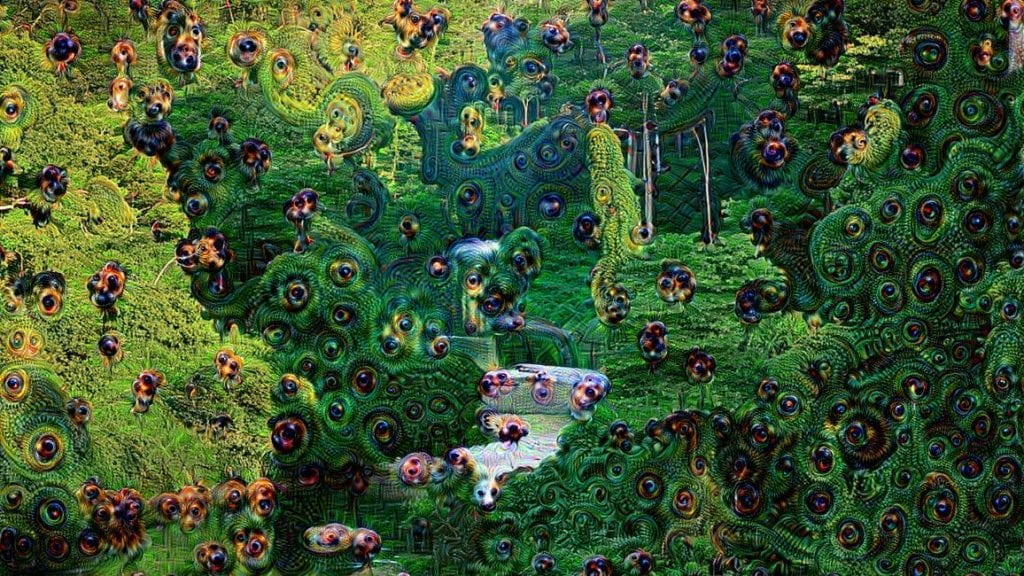

I started to play around with the layers first without playing with the other variables. The product of this was actually very interesting. It seemed to me like it produced different styles of the initial image. This image used the mixed 3a layer.

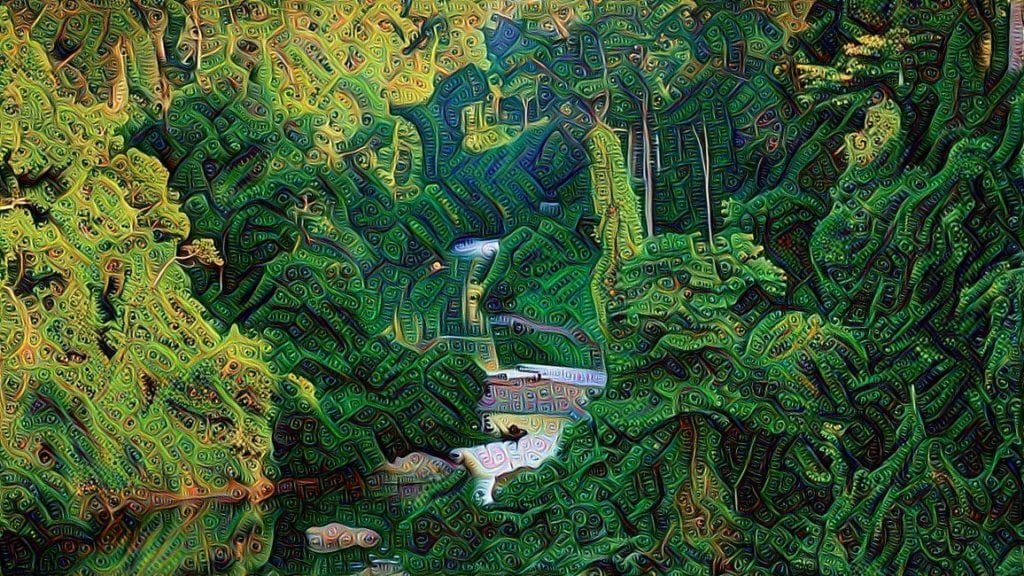

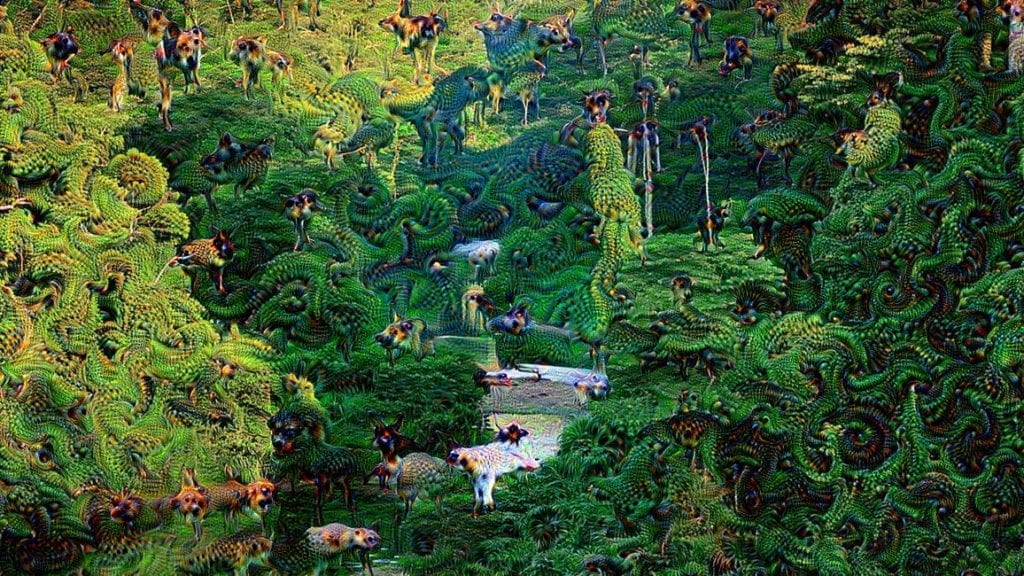

This image used the mixed3b layer.

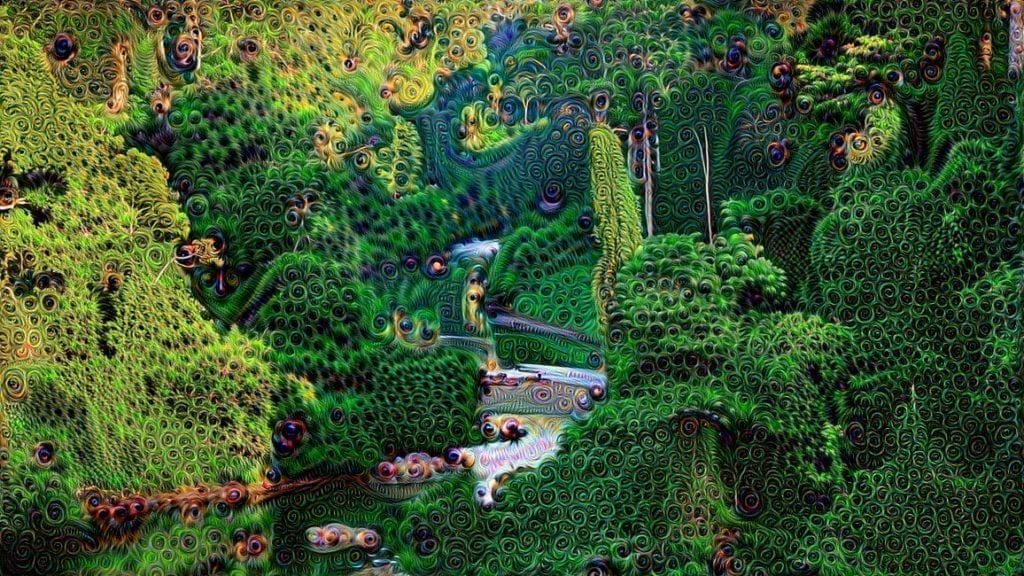

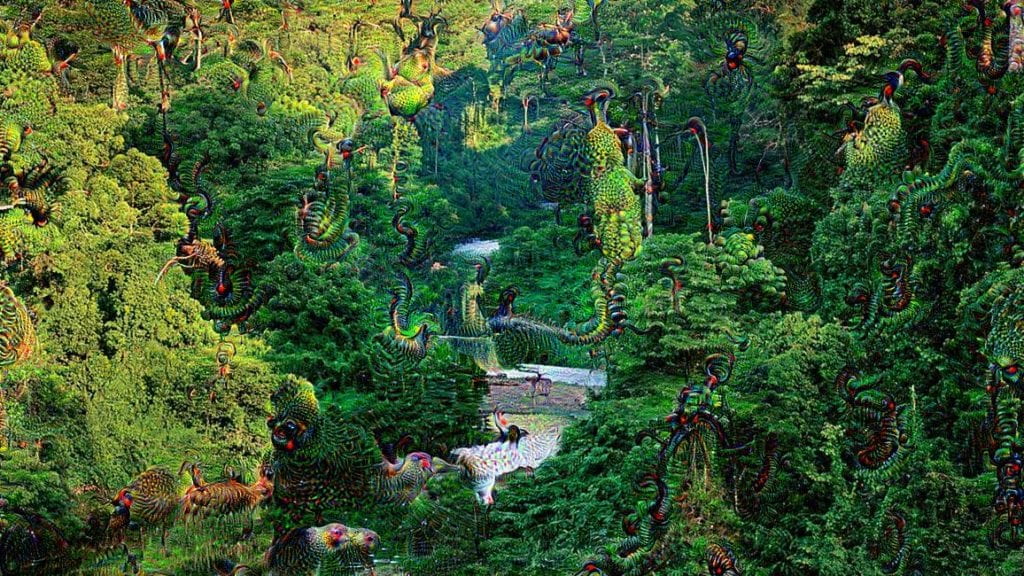

This image used the mixed4a layer.

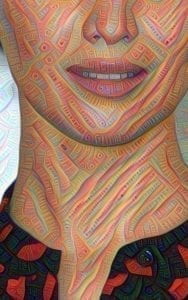

This layer used the mixed4c layer.

This layer used the mixed5a layer.

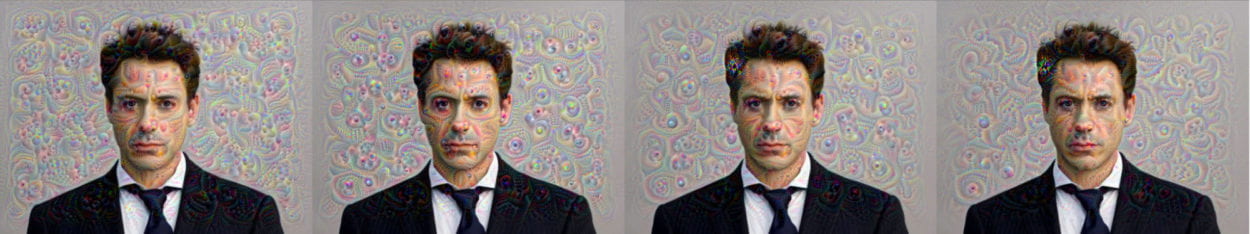

In terms of exploration, this experiment allowed me to replicate the forms of psychedelic trips that can be seen. Each layer altered the image in a different way, giving them unique styles. It seems as if the different layers chose the same locations on the image to alter, but the way in which they did was interesting to see.

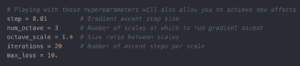

After this experimentation, I wanted to know what it would look like to produce a video similar to the one we saw in class. Then I came up with this.

Overall, I think that this experiment gave an interesting insight into what the Deepdream can do. I wonder whether it would be possible to maintain the style of the different layers by using style transfer and training a model based on the layer, and how different it would be compared to just using deep dream.

I also see myself using this as a way to produce interesting images of my cyberpunk cityscapes. The results should be pretty interesting I imagine.