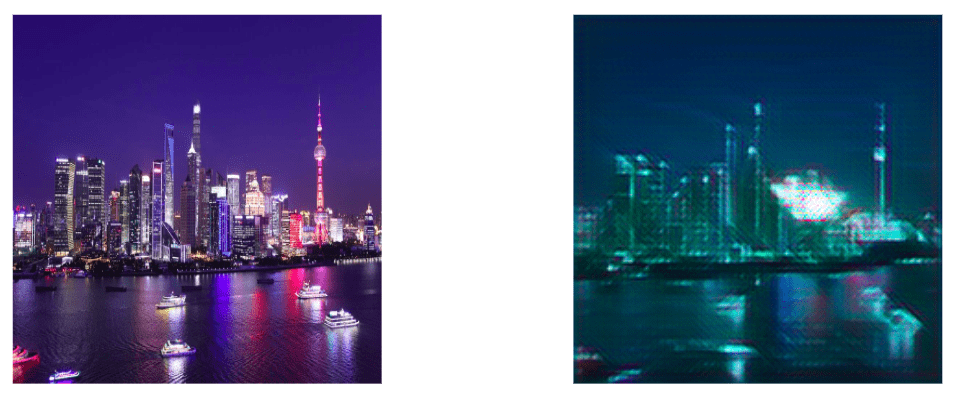

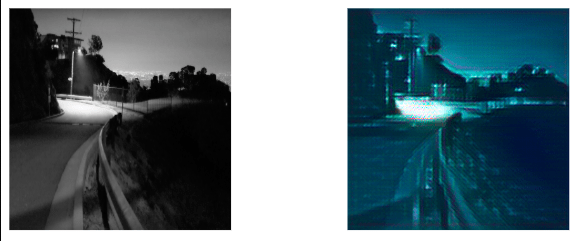

I had a lot of trouble with this assignment and ran into a few difficulties. In the end, I wasn’t able to train the Style Transfer despite overcoming most of the challenges I faced. However, I do feel like I learned much more about the files and the process in terminal after spending hours problem solving and researching the challenges I faced.

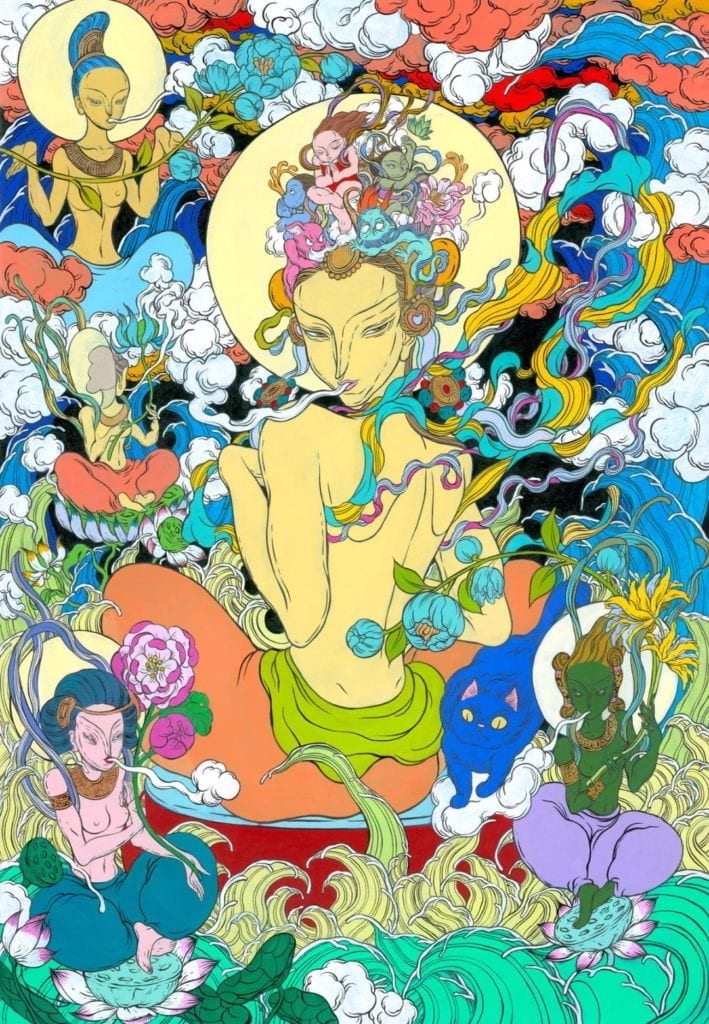

To start training, I cloned the repo, created the environment and checked the installation, but had to use a different command from the slides because I realized that the folder was named styleTransfer instead of styletransferml5, something which threw me off on several steps of the training. After successfully downloading the setup, I renamed my image, shapes.png, an interesting 3D shapes image I thought would produce interesting results, and loaded it into the images folder on my devCloud.

After completing these steps, I attempted to run the qsub -l walltime=24:00:00 -k oe train.sh code, but didn’t receive the “successfully training” message. Instead, I discovered that every time I ran the code, the program created a new “training” file, such as train.sh.o411228, and an error file, train.sh.e411228.

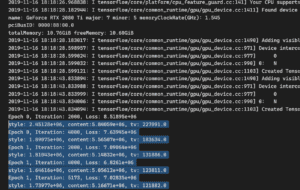

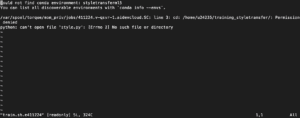

Initially I didn’t realize that train.sh.e411228 was an error file, so I kept checking the other version to find confusing outputs such as this:

Checking the error file showed this error, saying that style.py didn’t exist, so I reuploaded the style.py into the train_style_transfer_devCloud folder on devCloud and kept trying again.

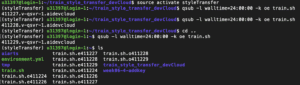

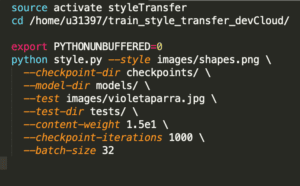

At this point I reviewed all my steps on the slides and realized that I needed to edit the train.sh file, as I had not realized that before. I went back into the downloaded folder from Github and changed the username and file name, but after a few more error messages I realized that the original train.sh indicated the folder was styletransferml5, which is why the program couldn’t find the style.py file, as it was under activate styleTransfer in the train_style_transfer_devCloud folder. Here is my edited train.sh file:

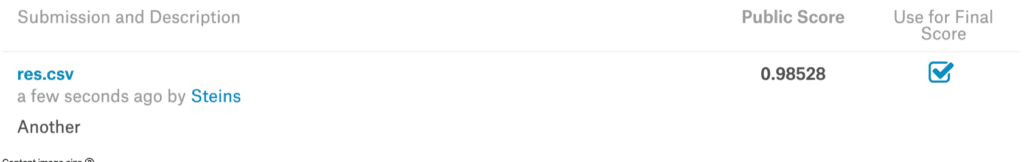

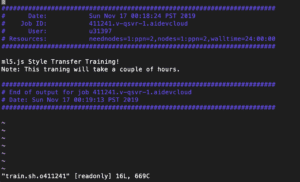

After correcting my mistake, I reuploaded the train.sh file again and submitted the qsub -l walltime=24:00:00 -k oe train.sh command, and was very excited to see the message, ml5.js Style Transfer training!

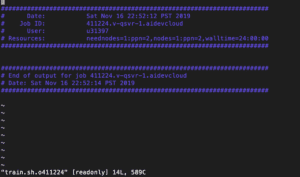

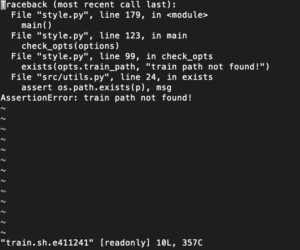

However, after a day and a half went by, the message was still the same with no successful models trained. At this point you directed me to check the error file, which showed this new error, train path not found.

The final hurdle was realizing that when cloning the repo the unzip train2014.zip had failed to work, which meant that despite problem solving earlier the file was still zipped and therefore couldn’t be used to train. I redownloaded the file again and attempted the use the unzip command in Colfax, but got these error messages after several attempts.

![]()

In the end, I kept getting the “broken pipe” message, so I had to give up my attempts. While I feel like I got very close and got to know all the parts that go into style transfer training, experiencing all the complications was very frustrating especially after spending hours problem solving, getting help from friends and fellows, and feeling so close to the finish line after the success messages.