PROJECT

DEMO: https://nhibiki-nyu.github.io/AIArts/Week3/dist/

SOURCE: https://github.com/nhibiki-nyu/AIArts/tree/master/Week3/

DESCRIPTION

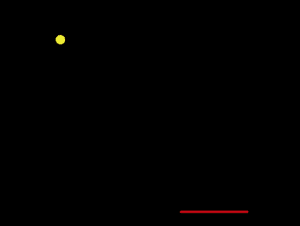

For the week3 assignment, I made use of BodyPix to detect the position of the player’s face with the help of WebCam. BodyPix is a network that cuts human’s outline from a picture which can also help to detect the human’s position in one picture.

So, in this project, I started the Web Camera to get a real-time picture, and send the picture to the input of network in order to detect the real-time position of player’s face. Then, I integrate the position data with a game – Pong. The real-time position of face can be a controller for the board. When player move the position in front of the camera, the board will shift along with the player.

SHORTAGE

When I was building this project, I faced (mainly) two problems:

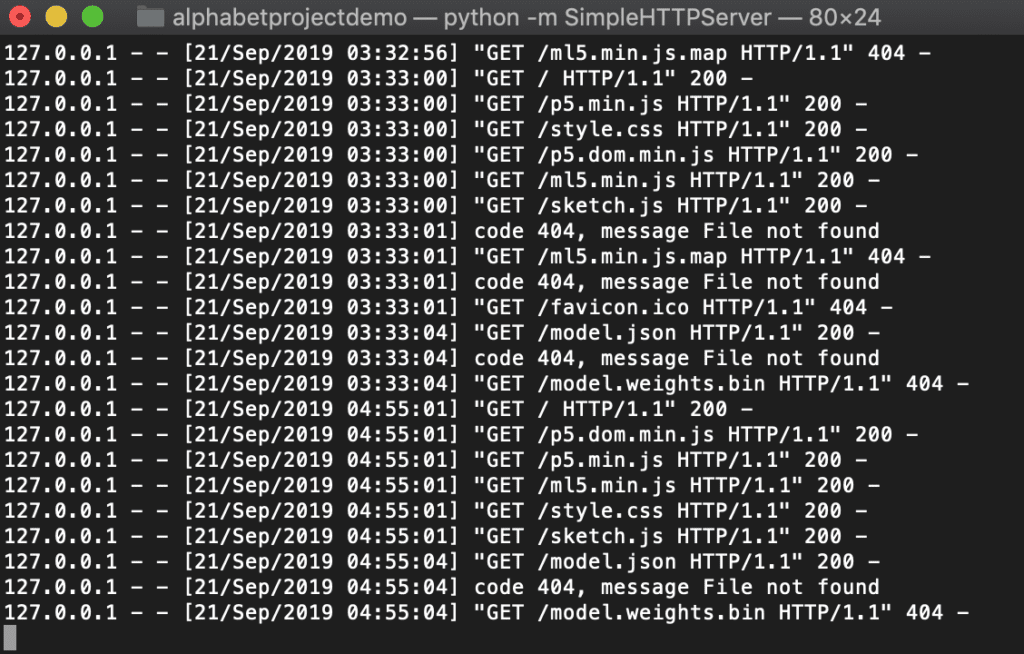

- weak computational power of my OLD late 2013 13” Macbook Pro.

- other objects that in front of the camera might interfere with the detection.

For the first problem, I read one blog from Google posted early this year. It has mentioned that with the help of tfjs, the 2018 15” MBP has framerate of 25/s. So when I run the website on another laptop, it has better performance.

And for the second one, I want to introduce FaceNet, an other network that can distinguish different faces in one picture. So we can just track one face in the camera.