Magenta Studios

Slides: https://drive.google.com/open?id=1fXMxelSqzy15b-pwVr40K46TJRxdlGAo48KnkDRgK34

https://magenta.tensorflow.org/studio

DRUMIFY 0 – Document 2 Chords progrssion

DRUMIFY 0 – Document 2 Chords progrssion

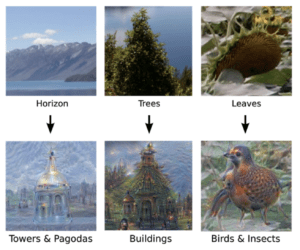

Magenta is an opensource machine learning project that was created with the purpose of creating art in visual and audio form. Through this, they have created various different sets of programs that use algorithms and deep learning to create art pieces.

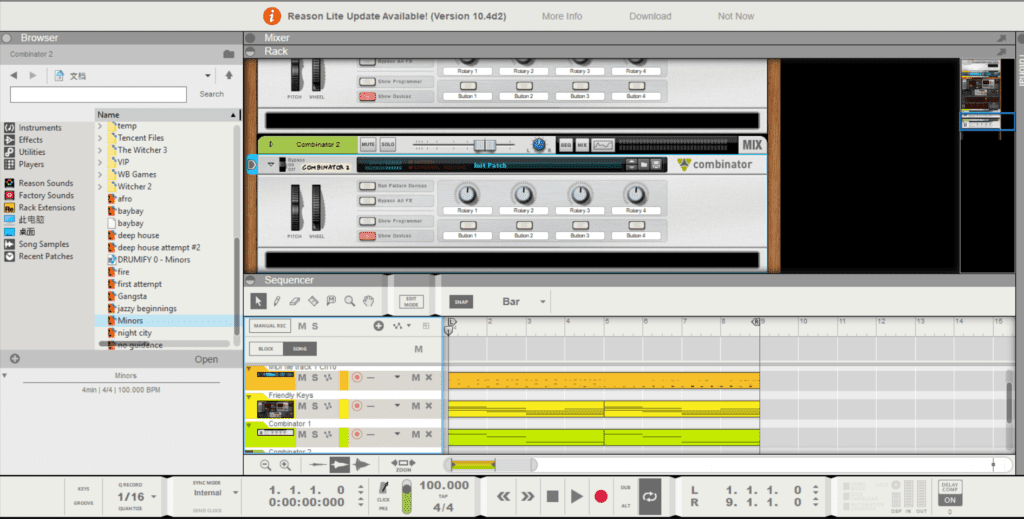

Magenta Studios is an audio enhancement tool that helps artists further their process of creating music. It can be used on Ableton (music producing program) as a plug-in, or it can be used on its own as a standalone program.

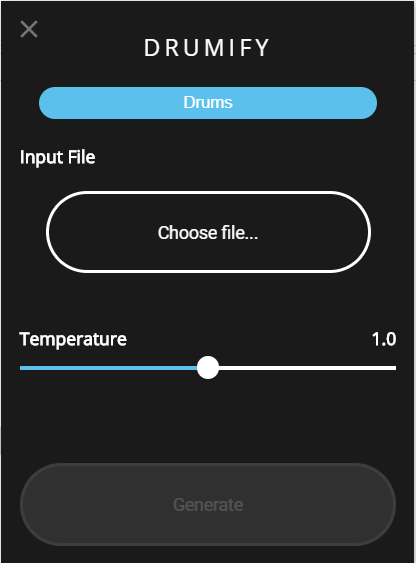

I decided to download the standalone program and test the usage and results were amazing. By uploading a chord progression in the form of a “.midi” file, I uploaded the file into the program called Drumify which creates drum loops based on the chords and melodies that you feed it. The results were actually very interesting. It managed to create a drum loop that almost felt like a live performance.

Although the results were amazing, the creation of something like this leads to the question of what is authentic music in the modern age. If we can create music that is indistinguishable from live music with the help of AI and machine learning, what will happen to the music industry?