Demonstration

Process

Virtual button

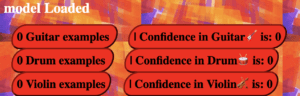

The first step to make my project is to replace buttons that users need to use the mouse to press by to the virtual button triggered by the human body part, for example, the wrist. To achieve it, I searched for the image for the button and found the following gif to represent the virtual button. To avoid touching mistakenly, I put the buttons on the top of the screen which looks like the following picture.

I used the poseNet to get the coordinates of the user’s left and right wrists and then set a range for each virtual button. If the user’s wrist approaches the button, the button will change into GIFs containing different instruments (Guitar, drum and violin). These GIFs play the role of feedback to let the user know they have successfully triggered the button.

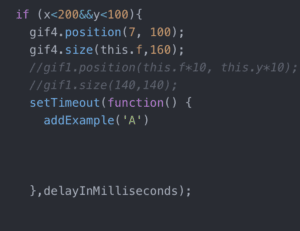

After that the model should automatically record the video as the dataset. The original one is that if the user press the button once, there will be five examples added to the class A. For my virtual button, the recording part should run once the user trigger the button. However, I need to set a delay function to give users time to put their hands down and prepare to play a musical instrument. Because the model shouldn’t count the image that users put their hands down as the dataset. So I set 3s delay for the users. But collecting examples is discontinuous if I keep raising my hand and dropping it.

Sound

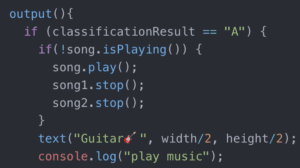

The second step is to add audio as the output. At first I said if the classification result is A and then the song will play (song.play( ); ). But the result is that the song played a thousand time in 1 second. Thus I can only hear noise not the sound of guitar. So I asked Cindy and Tristan for help, and they suggested me to use the following method: if the result is A and the song is not playing right now, the song will play. Finally it worked. there was only one sound at a time.

UI

The third step is to beautify UI of my project. First is the title: Virtual Instrument. I made a rectangle as the border and added a image to decorate it. It took some time to change the size of the border to the smaller one. Also I have added shadow to the words and added 🎧🎼 to emphasize music.

![]()

Then I added some GIFs which shows the connection between body movement and music. They are beside the camera canvas.

At last I added the border to the result part:

Experiment

The problems I found in the experiment are as follow:

- The process of recording and collecting examples is discontinuous. It often gets stuck. But the advantage is that the user will know whether the collection part end by seeing if the picture is smooth or stuck. Also the stuck image may have something to do with my computer.

- Sometimes the user might touched two buttons at the same time, but it is hard for me to avoid this situation through the code. So I just changed the range of each button to widen the gap between them.

- I have set the button to start predicting but it was hard for the model to catch the coordinates of left wrist. Sometimes it took a lot of time for the user to start predicting. Thus I have changed the score from 0.8 to 0.5 to make it better.

- Once the user pressed the start button, there would be a sound of the drum, even though the user didn’t do anything. It made me confused. Maybe it is because that KNN cannot consider the result that doesn’t belong to any classification. The model can only consider the most possible classification the input belongs to and give the corresponding sound.

Therefore, the next step is to solve the problems and enrich the output. For example I can add more kinds of musical instruments. And also the melody can be changed according to different speed of the body movement.