Logistics

Group Member – Casey, Eric

Github Repo – CartoonGAN-Application

Previous Report – Midterm Documentation

Proposal – Final Proposal

Background

Motivation

For this stage of this project, we want to further refine our work done up to this point, including 1) our web interface/API to the CartoonGAN models and functionalities; 2) the web application utilizing CartoonGAN, which would have more layers of interaction and possibility to it with the new features we plan for it to have.

We sincerely hope that through these refining work, CartoonGAN can finally become a powerful and playful tool that can be used by learners, educators, artists, technicians, so that our contribution to the ml5 library would truly help others, and spark more creativity in this fascinating realm.

Methodology & Experiments

Gif Transformation

Developing gif transformation on a web application is a more demanding task than we imagined. Due to the fact that there isn’t any efficient modern gif encoding/decoding libraries, my partner who worked on this functionality went through quite some effort to find usable libraries for working with gifs in our application.

*This could be a potential direction for future contributions.

Front-end wise, we implemented a simple but effective piping algorithm in order to recognize the type of input the user uploaded, and trigger respective strategies accordingly.

Demo gif outputs:

Styles: Original – Chihiro – Shinkai – Paprika – Hosoda – Hayao

Some experiments:

This cyberpunk kitty is recorded during one of our experiments with GIF transformation. As shown in the video, the transformation (original style to Miyazaki’s Chihiro style) output is glitchy, resulting from a single frame loss. This could be resulting from issues with GIF encoding and decoding in our web application, as we currently work with GIF in the following way:

GIF ➡️ binary data ➡️ tensor ➡️ MODEL ➡️ tensor ➡️ binary data ➡️ GIF

Therefore, encoding issues could largely effect our final outcome. This is a problem that needs to be looked into in the future.

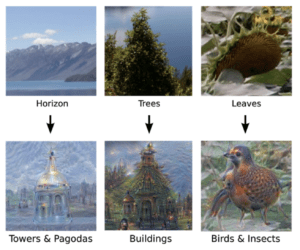

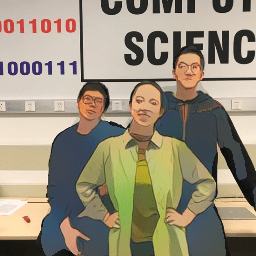

Foreground/Background Transformation

Foreground/background transformation is one of out biggest feature updates to our CartoonGAN web application.

The main methodology we used to develop this feature is to implement BodyPix as a tool to recognize humans from their background, and use that as a mask for the input image. This mask is then used to manipulate the pixel data from the image, so that the cartoonization can be applied to either foreground, background or both depending on the user’s choice.

We hope this could bring our user experience to another level, as we try to bring our users the experience of seeing themselves in the cartoon world of their choice, by either turning themselves into a cartoonized character, or turning their surrounding world into a fusion of their reality and fantasy.

Demo foreground/background outputs:

Foreground –

Background –

Social Impact

ml5 library

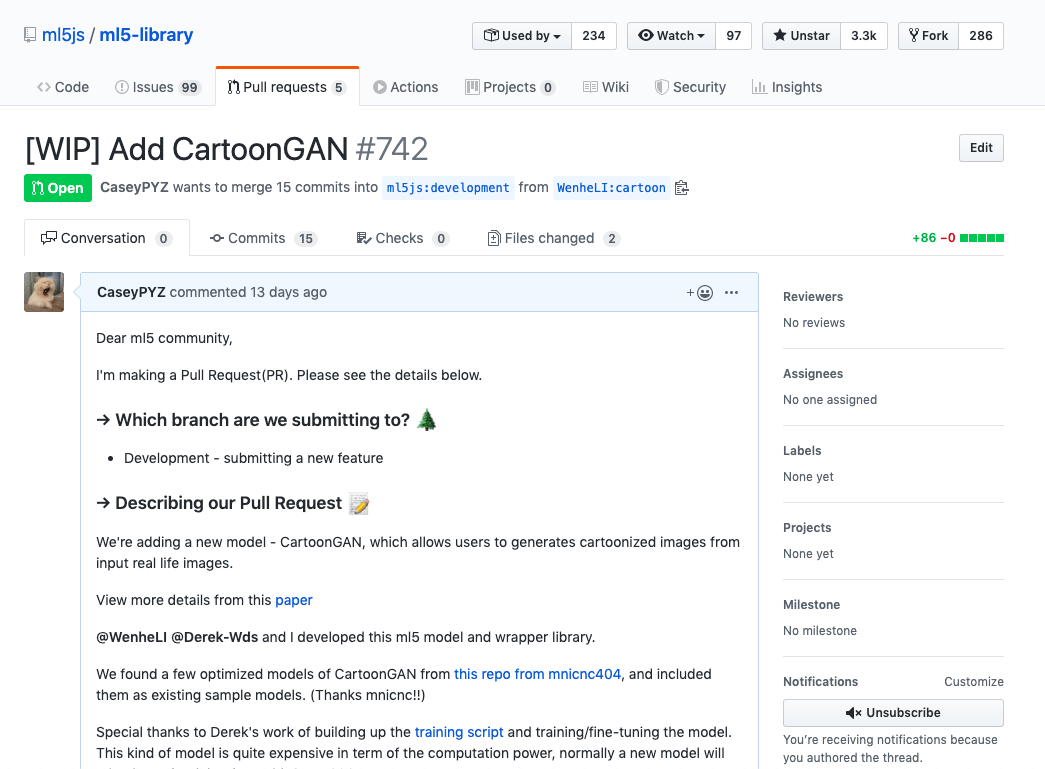

We wrapped CartoonGAN into a ml5 library, and submitted a pull request to merge our work into the ml5.

The reason we included this as part of our project goal is that we hope our work would become real contributions to the creative world out there. Machine learning on the browser is still a relatively new and merging field, the more work and attention it receives, the faster it will grow. Though I am a newbie myself, I really hope that my efforts and contribution could help ml5 grow as an amazing tool collection for the brilliant innovative minds in this realm.

Further Development

There are still work to be done and room for improvement in this project to bring it fully up to our expectations.

Web application wise, GIF transformation is still relatively slow and buggy, due to the insufficiency of existing tools to work with gifs on the browser. We did our best to accommodate these issues, but we still want to look into potential ways of improvement, maybe even new issues to contribute to.

The CartoonGan ml5 library is still a work in progress. Although we have the barebones ready, there’s still work needed. We are currently in progress of building tests, examples, guides and documentation for the library, and designing wise, we still need to improve the library in aspects like error and corner cases handling, image encoding and other input format supports. These are all necessary elements for CartoonGAN to become an easy-to-use and practical library, which is our ultimate hope.