This a surveillance camera on the wall. It records videos.

This is the door of an elevator, it senses the presences of people or objects. The door will stay open if there is something in the middle.

This is an access control device at the front door of the building I live in. There are two ways to access. Either you have a key card, swipe it on the screen then the door will unlock; or you can enter the room number and call the residents living here to unlock the gate from their home.

This is the back side of that access control device. To unlock the door from inside, you only need to put your hand in front of the “button” and block it. The door will open when sensing something blocking the sensor. I’m guessing it’s a light sensor or an inferred sensor.

This is the entrance of a subway. There is x-ray security screener that checks your bag, and a metal detector that checks if you are carrying dangerous objects. There is also a temperature detector that monitors the body temperature of the passengers.

This is the gate to enter and take the subway. It has a detector on the top, where you can swipe your card, ticket or your phone to pay for the fee. After you swipe your card, the gate will open for you to pass. Similar as the elevator door, the subway gate can also sense people or objects, and keep the gate open whenever there are things blocking the way.

This is a camera that captures pictures and record information of cars that are parked illegally. After taking down the information of the cars, it will show the plate number of the cars on the screen, in order to warn other cars.

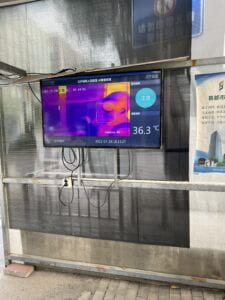

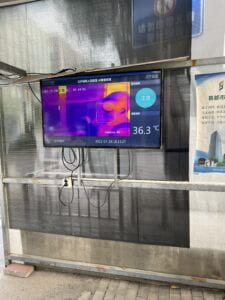

This is a inferred temperature sensor that senses the body temperature of people entering. I think it uses AI algorithms to separate human from other objects or animals. When a person passes by, it marks the face of that person and shows the person’s body temperature on the right side of the screen.