Demo (there’s music so please turn on your speaker):

Concept:

This week we are assigned to build an interface utilizing KNN Classification. I was messing around with the example code provided by the professor and found using video as input very interesting. I followed the codes and developed a themed webpage for children to play with. A lot of children are interested in superheroes, they often imitate their gestures and movements. Thinking of that, I chose six difference superheroes from Marvel and DC to be my characters. They’re Superman, Iron Man, Batman, Spiderman, the Hulk, and Captain America.

Design:

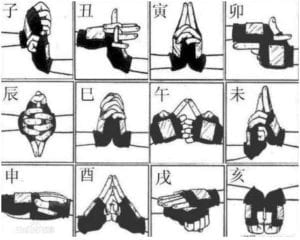

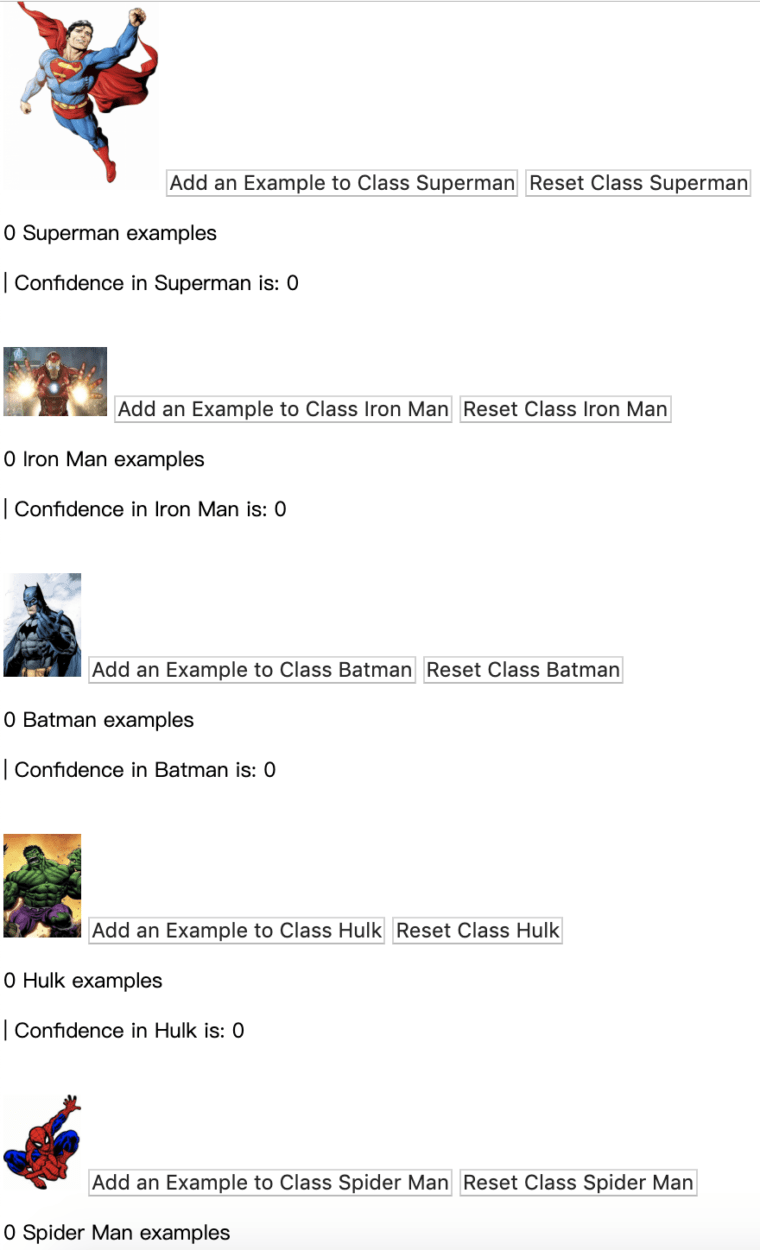

These superheroes all have a signature pose where people could instantly think of. Using that, I recorded these movements and poses into the dataset, and loaded into the webpage. All of the poses I recorded are similar to the ones shown in the picture, so people could easily figure out what poses could trigger each character when they are messing around.

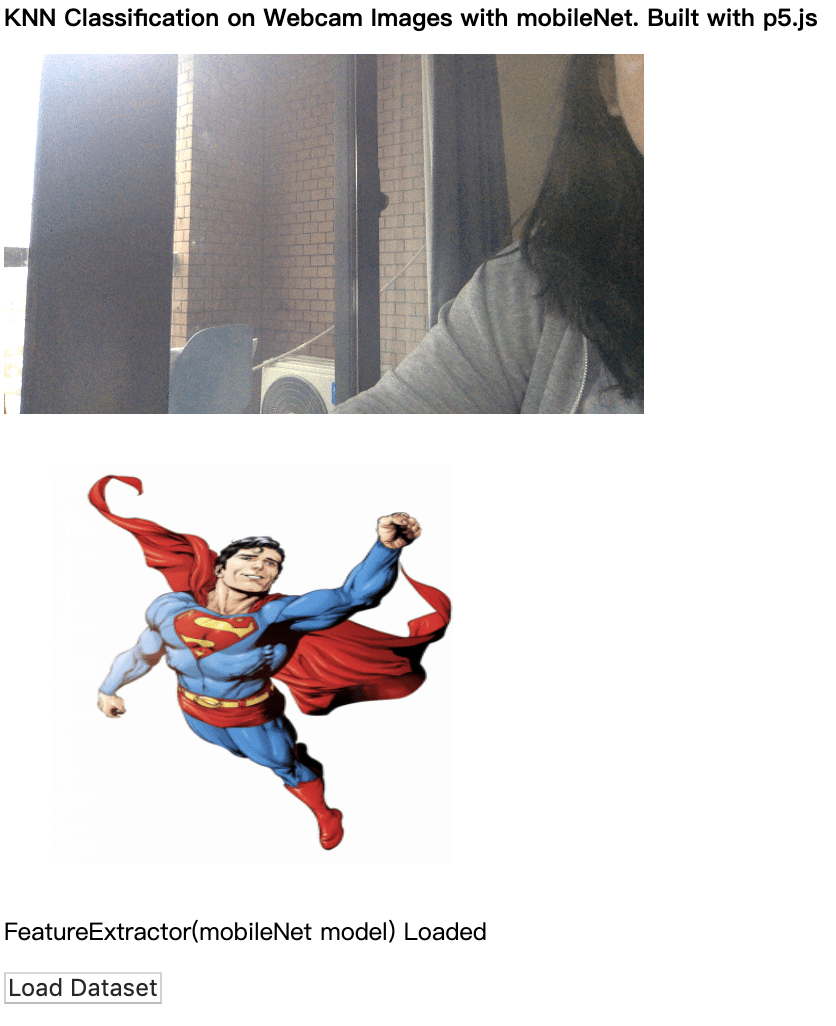

Since I added more class to the code, the webpage was too long to show the webcam and the “Confidence in …” text for every character at the same time. To make it more clear of which superhero was the user’s movement classified as, I added a div to show the image of the character being triggers.

Since I added more class to the code, the webpage was too long to show the webcam and the “Confidence in …” text for every character at the same time. To make it more clear of which superhero was the user’s movement classified as, I added a div to show the image of the character being triggers.

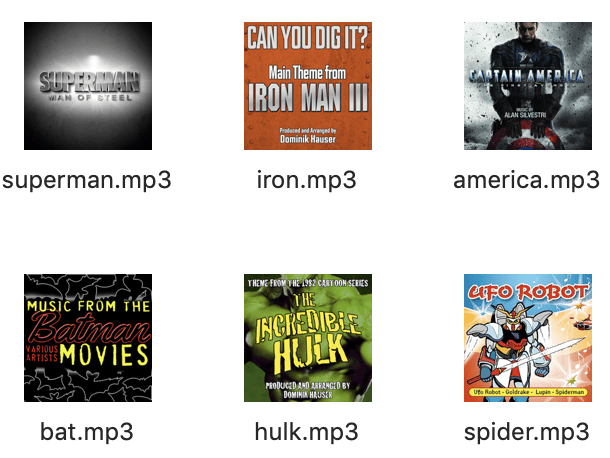

All of the pictures I chose for each character are from the cartoon or the original comic design. To match the aesthetic, I chose the theme song from the tv show or cartoon, where most of them are produces in the 1960s, to be played whenever the character comes up.

Code:

- I used the example code from class, KNNClassification_video to train and get the dataset. Then I changed the layout for each class using HTML.

- To add the audio, I first added the source audio in HTML and gave it an id, then I called it in Javascript.

- To appear the corresponding image under the webcam, I added a div in HTML, styled it in CSS, and changed the image in Javascript by changing its source image.

Difficulties:

I was trying to let it appear gifs from modern-day films of the superhero under the webcam, but it wasn’t working that well. The gif froze and slowed down the entire site. I think it might be because it was unable to process that much at the same time.