CONCEPTION AND DESIGN:

In the group research paper, according to Crawford’s article The Art of Interactive Design, I defined interaction as a cyclic process where two actors take turns to receive and respond to the message, which in computer terms are called: input, processing, and output. But as the learning progresses, my definition of interaction becomes richer and more detailed. in the final research paper, I studied a project called Anti-Drawing Machine.

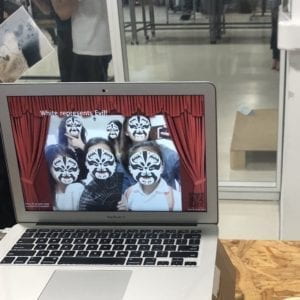

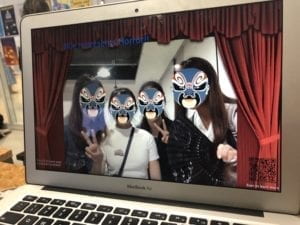

Through users’ feedback in the video, we can see that users enjoy the project. Also, after discussing with professors and some classmates, I find this project can reflect human’s feeling of being not able to control machines and then trigger people’s thinking about the relationship between people and machines. Therefore, I think this project is not only interesting but also meaningful. So I have two new understandings of interaction: 1. The product should make the user feel motivated to proceed to the next round of input; 2. The product should be interesting and make users think or learn something or expose to a new experience. So I begin to think about creating something both interesting and meaningful. Meanwhile, I happened to see the mask-changing performance online and it’s reported that many people, especially teenagers, are not valuing or even forgetting the Chinese traditional mask-changing art. I think it’s mainly because of the lack of promotion of media. So I decided to make a project to promote this kind of art and catch people’s attention to it. First, in order to track users’ face, I used the OpenCV library in Processing to achieve the purpose of face recognition and tracking. Then I found some famous existing masks in the mask-changing performance on the Internet and used the image processing software to adjust its size and background to match the user’s face. Instead of choosing a bottom, I’d like to use a distance sensor to make the user’s body (or hands) move as much as possible. Also, I planned to provide background music and the costume to create an atmosphere of the performance.

FABRICATION AND PRODUCTION:

My first step was to program the mask stickers to the user’s face. I successfully implemented this function by using the knowledge about image and media I learned in class. I then installed the distance sensor to transfer the information from Arduino to processing. Here I encountered a difficulty: when the distance detected by the sensor is less than a specific value, processing receives the data and makes the mask keep changing. In other words, I failed to make it change the mask just once with a change of distance. I tried a lot of logic, like if(), while(), and so on, but it didn’t work. Finally, I came up with an idea of using a Boolean function. With the help of Nick, I successfully wrote the code on Arduino to realize the function of changing the mask once with one change of distance.

After implementing the basic functionality, I adjusted the position of the distance sensor to make it more sensitive to the movement of the user. Also, following the advice of professor Rudi, I added the frame of a stage curtain at the edge of the computer screen to make it have more atmosphere of stage performance. After the first round of user test, I got a lot of valuable opinions and had the following modification plan:

- Change the way sensor function

- Flip the video mirror-move in the same direction as the user

- Optimize the speed of camera video

- Let the curtain cover the video to create the feeling on stage

- Add the sound of a fan

- Make screenshot (use saveFrame() function)

- Attach Arduino to the fan

I want to first focus on the choice of the sensor. During the user test, many users told me that they couldn’t understand the meaning of moving their hands closer to the computer screen (to trigger the distance sensor), which made me rethink how to design the sensor to make users feel natural to move. So I thought that I could attach an acceleration sensor to a fan to change the way the sensor function. Then I tested it.

The accelerometer worked well. So I attached it on the fan. Here I had a problem: because the accelerometer measured three-dimensional space and the user’s gestures were so diverse that it is difficult to predict their movement, the sensor on the fan was not as sensitive as I measured before. After careful consideration, I chose a fan with a distance sensor as my Arduino part. Later, I invited some friends to experience it as users. The feedback they gave me was that the sensor system gave users a sense of performance while being sensitive. So I chose the distance sensor. In addition, another big problem I encountered in the production process is that the camera’s image display is not smooth. For this reason, I have optimized and checked my Processing code several times. With the help of Rudi and Tristan, I corrected the order of importing pictures, deleted some unnecessary delay () functions, and removed the “resize()” process of background pictures. In this way, my project ran much smoother than before. Also, I optimized the above seven items and made my project better. I think the user test is very helpful for my project because it lets me know the real experience and thoughts of users and gives me ideas to improve the project.

CODE FOR PROCESSING

(Attached below)

CONCLUSIONS:

The main purpose of my project is to let people experience the fun of mask-changing performance. This traditional Chinese Art can only be given by performers with professional skills. But with this project, everyone could have the chance to give a unique mask-changing performance. What’s more, I provided the background knowledge (different colors’ meanings in masks) to let users know more about mask-changing. By providing the QR code, this project gives people with interest in learning more direct access to the information about mask-changing performance. This project meets my definition of interaction. The first one is that “the product should make the user feel motivated to proceed to the next round of input”. During the IMA show, I felt my users, especially kids and teenagers, were very excited about my project. And some kids could play it for more than 5 minutes. Users were attracted by different kinds of masks and their faces on the screen. Many users would like to make a screenshot or use their phones to take photos. So I felt my project was interesting and attractive to users. For the second one, “the product should be interesting and make users think or learn something or expose to a new experience”. During the IMA show, many users came to me, asked me about mask-changing performance and scanned the QR code to learn more. Also, when interacting with the computer, many mothers would ask their kids about the meanings of different masks’ color, which were shown on the screen. So I think my project could arouse people’s attention to traditional mask-changing performance and somehow play a propaganda role for it. In short, my project met my expectations. In the future, I will try to develop this project, such as perfecting screenshot function, adding video recording function and seizing the opportunity to find more users’ test and feedback.

CODE FOR PROCESSING

import gab.opencv.*;

import processing.video.*;

import java.awt.*;

import processing.serial.*;

import processing.sound.*;

SoundFile file;

PImage a;

Serial myPort;

int sensorValue;

String w;

Capture video;

OpenCV opencv;

// List of my Face objects (persistent)

ArrayList<Face> faceList;

// List of detected faces (every frame)

Rectangle[] faces;

// Number of faces detected over all time. Used to set IDs.

int faceCount = 0;

// Scaling down the video

int scl = 2;

PImage[] myImageArray;

PImage bgphoto;

PImage QR;

//PImage img2; if….

int i1;

int sizeX = 896; //this has to be a multiple of ???? 640/480

int sizeY = 672;

int offsetX = -120;

int offsetY = 50;

void setup() {

fullScreen(); //size(800, 600);

video = new Capture(this, sizeX/scl, sizeY/scl);

opencv = new OpenCV(this, sizeX/scl, sizeY/scl);

opencv.loadCascade(OpenCV.CASCADE_FRONTALFACE);

faceList = new ArrayList<Face>();

video.start();

printArray(Serial.list());

// this prints out the list of all available serial ports on your computer.

myPort = new Serial(this, Serial.list()[ 16 ], 9600);

myImageArray= new PImage[15];

for (int it =0; it< myImageArray.length; it++) {

myImageArray[it]=loadImage(it + “.png”);

}

//img2 = loadImage(“balabala.jpeg”);

bgphoto=loadImage(“12345.png”);

bgphoto.resize(width, height);

QR=loadImage(“QR1.png”);

}

void draw() {

background(96, 0, 7);

while ( myPort.available() > 0) {

sensorValue = myPort.read();

}

//println(sensorValue);

//scale(scl);

opencv.loadImage(video);

pushMatrix();

scale(scl);

translate(video.width, 0);

scale(-1,1);

image(video, offsetX, offsetY );

// pushMatrix();

// translate(100, 100);//(width – sizeX, height -sizeY);

detectFaces();

// Draw all the faces

for (int i = 0; i < faces.length; i++) {

noFill();

strokeWeight(5);

stroke(255, 0, 0);

//rect(faces[i].x*scl,faces[i].y*scl,faces[i].width*scl,faces[i].height*scl);

//rect(faces[i].x, faces[i].y, faces[i].width, faces[i].height);

if (sensorValue== 1) {

file = new SoundFile(this, “fan.wav”);

file.play();

i1=int(random(0, 15));

}

image(myImageArray[i1], faces[i].x+offsetX, faces[i].y+offsetY, faces[i].width, faces[i].height);

//delay(10);

}

//popMatrix();

//for (Face f : faceList) {

// strokeWeight(2);

// //f.display();

//}

//scale(scl);

popMatrix();

image(bgphoto, 0, 0);

if (i1==0) {

w=”Yellow represents Bravery!!”;

textSize(48);

fill(252, 233, 3);

text(w, 230, 140);

}

if (i1==1) {

w=”Blue represents Horror!!OMG!”;

textSize(48);

fill(0, 0, 255);

text(w, 230, 140);

}

if (i1==2) {

w=”White represents Evil!”;

textSize(48);

fill(255, 255, 255);

text(w, 230, 140);

}

if (i1==3) {

w=”Red represents Loyalty!”;

textSize(48);

fill(255, 0, 0);

text(w, 230, 140);

}

if (i1==4) {

w=”White represents Evil!”;

textSize(48);

fill(255, 255, 255);

text(w, 230, 140);

}

if (i1==5) {

w=”Red represents Loyalty!”;

textSize(48);

fill(255, 0, 0);

text(w, 230, 140);

}

if (i1==6) {

w=”White represents Evil!”;

textSize(48);

fill(255, 255, 255);

text(w, 230, 140);

}

if (i1==7) {

w=”Red represents Loyalty!”;

textSize(48);

fill(255, 0, 0);

text(w, 230, 140);

}

if (i1==8) {

w=”Green represents Horror!!”;

textSize(48);

fill(0, 255, 0);

text(w, 230, 140);

}

if (i1==9) {

w=”White represents Evil!”;

textSize(48);

fill(255, 255, 255);

text(w, 230, 140);

}

if (i1==10) {

w=”Red represents Loyalty!”;

textSize(48);

fill(255, 0, 0);

text(w, 230, 140);

}

if (i1==11) {

w=”Green represents Horror!!”;

textSize(48);

fill(0, 255, 0);

text(w, 230, 140);

}

if (i1==12) {

w=”Blue represents Horror!!OMG!”;

textSize(48);

fill(0, 0, 255);

text(w, 230, 140);

}

if (i1==13) {

w=”Blue represents Horror!!”;

textSize(48);

fill(0, 0, 255);

text(w, 230, 140);

}

if (i1==14) {

w=”Yellow represents Bravery!!”;

textSize(48);

fill(252, 233, 3);

text(w, 230, 140);

}

textSize(20);

fill(255, 255, 255);

text(“Press R to save your”, 0, 730);

text(“wonderful moment!”, 0, 750);

textSize(15);

text(“Scan to learn more”, 1200, 740);

//imageMode(CENTER);

image(QR, 1200, 600);

// popMatrix();

}

void keyPressed() {

println(key);

if (key == ‘r’ || key == ‘R’) {

saveFrame();

}

}

void detectFaces() {

// Faces detected in this frame

faces = opencv.detect();

// Check if the detected faces already exist are new or some has disappeared.

// SCENARIO 1

// faceList is empty

if (faceList.isEmpty()) {

// Just make a Face object for every face Rectangle

for (int i = 0; i < faces.length; i++) {

println(“+++ New face detected with ID: ” + faceCount);

faceList.add(new Face(faceCount, faces[i].x, faces[i].y, faces[i].width, faces[i].height));

faceCount++;

}

// SCENARIO 2

// We have fewer Face objects than face Rectangles found from OPENCV

} else if (faceList.size() <= faces.length) {

boolean[] used = new boolean[faces.length];

// Match existing Face objects with a Rectangle

for (Face f : faceList) {

// Find faces[index] that is closest to face f

// set used[index] to true so that it can’t be used twice

float record = 50000;

int index = -1;

for (int i = 0; i < faces.length; i++) {

float d = dist(faces[i].x, faces[i].y, f.r.x, f.r.y);

if (d < record && !used[i]) {

record = d;

index = i;

}

}

// Update Face object location

used[index] = true;

f.update(faces[index]);

}

// Add any unused faces

for (int i = 0; i < faces.length; i++) {

if (!used[i]) {

println(“+++ New face detected with ID: ” + faceCount);

faceList.add(new Face(faceCount, faces[i].x, faces[i].y, faces[i].width, faces[i].height));

faceCount++;

}

}

// SCENARIO 3

// We have more Face objects than face Rectangles found

} else {

// All Face objects start out as available

for (Face f : faceList) {

f.available = true;

}

// Match Rectangle with a Face object

for (int i = 0; i < faces.length; i++) {

// Find face object closest to faces[i] Rectangle

// set available to false

float record = 50000;

int index = -1;

for (int j = 0; j < faceList.size(); j++) {

Face f = faceList.get(j);

float d = dist(faces[i].x, faces[i].y, f.r.x, f.r.y);

if (d < record && f.available) {

record = d;

index = j;

}

}

// Update Face object location

Face f = faceList.get(index);

f.available = false;

f.update(faces[i]);

}

// Start to kill any left over Face objects

for (Face f : faceList) {

if (f.available) {

f.countDown();

if (f.dead()) {

f.delete = true;

}

}

}

}

// Delete any that should be deleted

for (int i = faceList.size()-1; i >= 0; i–) {

Face f = faceList.get(i);

if (f.delete) {

faceList.remove(i);

}

}

}

void captureEvent(Capture c) {

c.read();

}