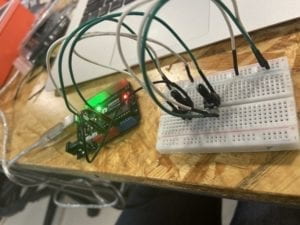

In this week’s recitation, I am asked to work individually to create a Processing sketch that controls media by using a physical controller made with Arduino. I choose to use potentiometers to control an image.

My Processing Code:

PImage img1;

import processing.serial.*;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

void setup() {

size(800, 800);

background(0);

setupSerial();

img1 = loadImage(“angel.png”);

imageMode(CENTER);

}

void draw() {

updateSerial();

printArray(sensorValues);

background(255);

image(img1,400,400,sensorValues[0],sensorValues[0]);

filter(BLUR, sensorValues[1]/100);

tint(255,sensorValues[0]/3);

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 3 ], 9600);

myPort.clear();

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

I use the first potentiometer to change the size and the transparency, and the second potentiometer to change the blur length.

Since I have practiced how to use arduino to control Processing, this week’s recitation task is quite simple. All I need to do is to use the Pimage to load an image and use tint, blur etc. to edit the image. I think if I have more time, I will try to load more pictures to let the characters seem like interacting with each other by changing their positions and sizes.

Reflection:

After reading Computer Vision for Artist and Designers , I got a lot of inspirations. The article introduces various types of computer vision techniques. The project mentioned in this article I am interested in most is Messa di Voce’s interactive software. It visualizes the sound. If the user is speaking, the sound he or she made will be transformed into an image. This makes me think of one writing skill I learned in my high school —synaesthesia. It inspires me that different senses can be associated with each other. I can comment a song as blue to show that it is sorrowful, and I can define a girl’s smile as sweet to show that she is so cute. In my project, I can also use this kind of skill to use people’s different senses to fertilize the interaction process.