Interactivity

In reading What Exactly is Interactivity? , I learned interaction involves two actors, which together have an input, processing, and output. The author explains interaction through an analogy of two people communicating. One person speaks and the other listens, thinks, and reacts (5). In this analogy speaking would be equivalent to an input (for the second person), listening would be taking in the input, thinking would be processing the input, and reacting would be the output. Fredegund has three steps to complete after Gomer’s input, listening and talking in all of the information, cognition and forming their own thoughts, and responding with words (5). In addition to this analogy, What Exactly is Interactivity? explains that there are different levels of interaction, some being less complex and some being more complex(6). Physical Computing’s Greatest Hits (and misses) introduced many examples of physical computing and interactive projects. The article explained how many projects especially musical or entertainment projects are interactive, showcasing projects like “Meditation Helper,” a device that takes in inputs like breath rate, posture, and resistance on a person’s skin which the machine interprets as indicators of certain meditation (13). This machine was inspirational for our futuristic machine because it helped us realize the possibilities of sensor inputs being processed as moods and feelings. Although it is not really possible right now, it has more potential for a future interactive device because the very basics of it are established in the “Meditation Helper.” In the passage Physical Computing – Introduction, O’Sullivan and Igoe, the authors explain the basics of physical computing and circuits, including input, processing, and output. The authors explain that interaction is about listening, thinking, and speaking or the computer equivalent of input, processing output. They also describe how digital inputs and outputs compared to analog inputs and outputs.

2 Researched Projects

I researched analog/ mechanical wall clocks for my less/non-interactive project. According to the interactive definition consensus among my group and among the readings, interaction contains input, processing and output. There are also different levels of interactivity. Mechanical clocks have three requirements to keep time (normally through gear mechanisms), to store potential energy, and to display the time (How Do Analog Clocks Work?). How Do Analog Clocks Work? discusses the most basic of a mechanical clock, an hourglass. This device has a source of energy, gravity pulling sand down, displays time through the amount of sand left, and keeps consistent time through a constant amount of sand being able to fit through the hole. The mechanical clocks I researched would not completely fit the definition of interactive. There is no complex interaction between two actors. Although some mechanical clocks have input of winding or flipping the hourglass, and all mechanical clocks have an output of displaying time, there is no real or complex processing of the input to produce an output. Especially in the simple example of an hourglass, the device does not process the input to create an output uniquely based on the input(at any complex level).

In contrast, I researched Dance Dance Revolution the Wii or arcade game for my interactive device. Specifically I looked at a DIY version that use Arduino to build and program their own version as it was more articulate about the input, output, and processing. The article “Building a DIY Dance Dance Revolution,” explained the building process and a built behind input, processing, and output. Specifically, the creator explained building the pressure sensors for the DDR (Dance Dance Revolution) game. The pressure sensors are placed in a mat under wooden squares and respond to pressure applied. When pressure is applied they send signal to the Arduino system essentially acting like a keyboard, with only four keys. The pressure indicated a button press and sent a keystroke to Arduino, which then processed the keystroke interpreting it and telling the visual display to show the player either hit or miss that dance step. There are many factors like timing that also are considered. This I consider to be an interactive device as there are two actors, the human and they game system, there are also inputs, processing, and outputs. The processing is comparatively more complex that a person and their refriderator’s actions, as it includes interpreting multiple types of input (four different keys) and processing the input compared to the timing of the game to decide whether the output is a his or miss for each step.

Group Project Watch Alfa

Regarding my groups project, the Watch Alfa we focused most on a variety of sensory inputs to establish our device. The device is intended to take in an extensive amount of inputs, interpret their data as specific moods, feelings, and health status, relate those moods, feelings, and health statuses, to specific meals, and then instruct a smart kitchen to cook the meal (the smart kitchen basically acts like a smart home or Ihome but for a kitchen, cooking and preparing whatever meal it is instructed to). Each step of this device’s interactions, input, processing, and output are all complex. We focused on articulating various sensors to capture what information someone would need to decide what feeling, mood, or health status a person had. And we included sensors like, chemicals, hormones, sounds/voices, temperature, heart rate, breath rate, light, and blood pressure. During the presentation we showcased three possible scenarios of Watch Alfa responding to feelings (homesickness), health (fever/cold), and mood (a romantic dinner). Although the presentation featured the device talking, in reality the speaking would only be for certain scenarios like the date night, where the device consulted with Kris (the man who forgot his anniversary).

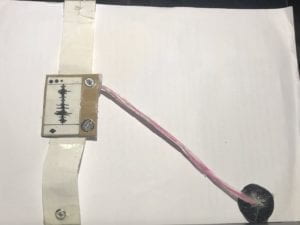

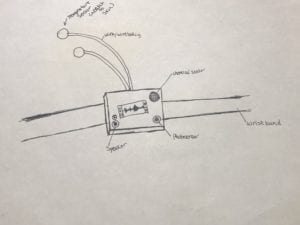

The article, Physical Computing’s Greatest Hits (and misses), sparked an idea in our group, reminding us of the various sensor inputs that a device can use. Specifically “Meditation Helper,” helped our group thinks about a futuristic device we would want to create, because right now devices cannot really interpret mood or feelings well, but in the future, anything is possible. Combining the goals of using interesting sensors to gather inputs and creating a device that processes feelings and mood, the idea was born to have a device be able to sense moods and feelings. As a joke, one member suggested it make people food based on their feelings, and we took that idea and expanded on it, discussing possible specific inputs and corresponding outputs along with scenarios to showcase the variety of moods, feelings, and health responses the device has. To simplify the device, the inputs would be information taken from many sensors on the wrist attachment of the device(see sketch and device below). The signal would be sent to the modem (which for our presentation was a headband and antenna on the device actor’s head). The processing would be the interpretation and cognition of those inputs, taking that information and matching it to stock moods and feelings that would then correspond to certain meals, mostly comforting the user. The output would be actually sending the signal to the smart kitchen and producing the meal. And the two actors would be the user and the device itself (both the wrist sensors and its connection to the processing unit). Compared to the two research projects, unlike the mechanical clock, our device has complex processing like interpretation of many inputs and decision making to decide what feeling or mood the person is having. Also, processing includes identifying the mood/feeling and recognizing which meal to prepare for the user. Compared to the DIY DDR, both have sensor inputs and process relatively complex information. DDR processes timing and keystrokes matching up to dance steps. The output of our device is a bit more complicated as it involves creating a more complex multi ingredient product, a meal, where as DDR’s output is the display of either a hit or miss (although still relatively complex with the display on the screen involved). Our group process started with early discussions of our researched interactive and non/less interactive devices that lead to brainstorming for our personal device. We discussed a lot about AI type devices and devices that are like personal assistants, but we wanted to stray away from spreading existing assistive technology like Alexa and focus on a more specific niche food. I recently read part of a book called Omnivore’s Dilemma by Michael Pollen for a corse on Environment and Society, which highlighted an everyday problem of especially Western societies like America and increasingly China as well that lack a culture around food. This causes a dilemma (hence the name) about what to consume on a daily basis. People often have to think about the problem at least three times a day, even causing anxiety about what someone should eat, and what a healthy meal really is. This device can hopefully aid in reducing anxiety coming from the omnivore’s dilemma, especially while it reads health imputes and moods, deciding what to cook for you instead of you worrying about what to eat and what healthy really is jumping on any new fad from food experts.

In conclusion if I would make any future alterations to improve our device, I would also focus on the smart kitchen to develop that futuristic device more, because although our intentions with our current device are to decide what meal to make you and cook it, we focused on the input and processing more than how the food could be made by this device.

A3 Poster (horizontal):

Sketch of Device:

Main Device: