Reflection:

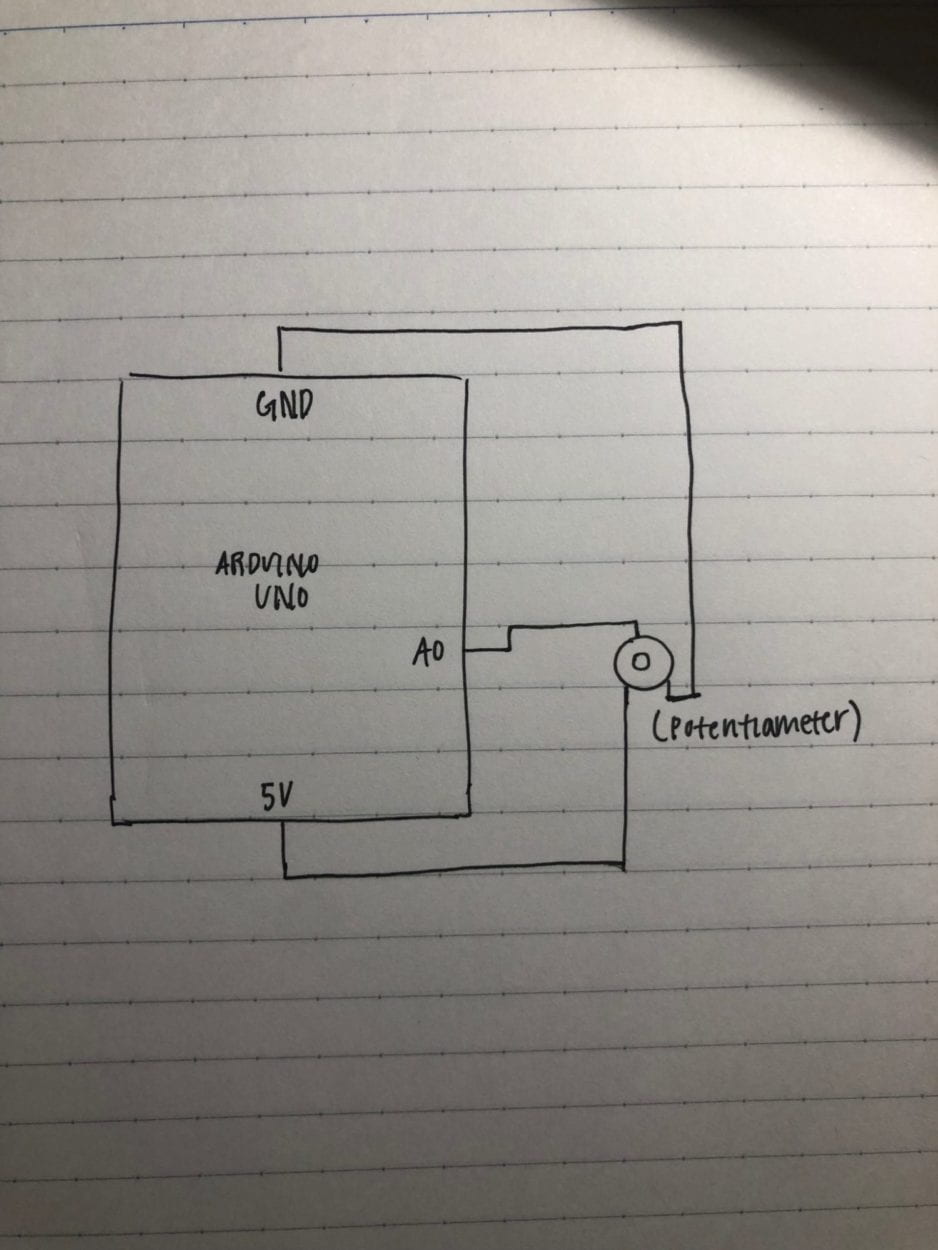

The purpose of this recitation was to create a Processing sketch that controls a video or image through manipulation of the media using a physical controller attached to Arduino. I chose to work with an image that would be altered by a potentiometer. I chose an image of a Tiger off of Unsplash (https://unsplash.com/photos/DfKZs6DOrw4).

In Golan Levin’s article “Computer Vision for Artists and Designers: Pedagogic Tools and Techniques for Novice Programmers”, he introduces the term “Computer vision”. He defines Computer Vision as “a broad class of algorithms that allow computers to make intelligent assertions about digital images and video” (1). The utilization of Computer Vision techniques have paved the way and made it accessible for individuals to create artistic works. In this project, the algorithms within Processing has allowed students like me to alter live videos and create our own projects. Levin notes that processing is an ideal platform because it “provide[s] direct read access to the array of video pixels obtained by the computer’s frame grabber” (7). In my project for this recitation. I chose to work with an image and using a potentiometer, altering the clarity of the image. As you turn the potentiometer left, the image becomes more pixelated and as you turn right, the image becomes clearer. In Processing, images are merely stored data that show the amount of red, green, blue in a given pixel. This is the basis of Levin “Computer Vision” term which he points out in his article. The improvement of technologies and software tools are opening doors for interactive artworks, games and other media.

recitation exercise:

Arduino Code:

void setup() {

Serial.begin(9600);

}

void loop() {

int sensorValue = analogRead(A0) / 4;

Serial.write(sensorValue);

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(10);

}

Processing Code:

import processing.serial.*;

Serial myPort;

int valueFromArduino;

PImage img;

void setup() {

size(800, 1202);

background(0);

img =loadImage(“tiger.jpeg”);

printArray(Serial.list());

// this prints out the list of all available serial ports on your computer.

myPort = new Serial(this, Serial.list()[ 1 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index number of the port.

}

void draw() {

// to read the value from the Arduino

noStroke();

while ( myPort.available() > 0) {

valueFromArduino = myPort.read();

}

int rectSize = int(map(valueFromArduino,0,255,1,100));

int w = img.width;

int h = img.height;

img.loadPixels();

for (int y = 0; y < h; y=y+rectSize) {

for (int x = 0; x < w; x=x+rectSize) {

int i = x + y*w;

fill(img.pixels[i]);

rect(x, y, rectSize, rectSize);

}

}

img.updatePixels();

println(valueFromArduino);//This prints out the values from Arduino

}

The coding for this project was not hard, being that I used the format of code taught to us during class. I did have trouble mapping the values though. One part that I had a little trouble with was when I remapped the values from (0,255) to (0,100). The Processing code was not working. I then remapped the values to (1,100) and got the code to work.

Conclusion:

Through the completion of this weeks recitation, and reading Golan Levin’s article, I have a clearer understanding of the algorithms that allow us to create interactive works. I have also learned how complicated it can be to incorporate human interaction with technology. “Computer Vision” is creating interactive works incorporating human movements. Although my project is simple and does not incorporate the level of interaction the projects Levin brings up in his article. Through completing this exercise, I was still able to gain a better understanding of how the pixels work within Processing. It made me reflect on my own final project and allowed me to rethink how I can incorporate the level of interaction he displayed in his article. Instead of merely having a button to interact with, I can find a way to include human movements within my own work.