This recitation was relatively simple because we had already done something similar during class. Using the example we had already I just replaced some parts of the code and got this project to work as it is. After reading the reading from this week, it really surprised me how far we had come with computer vision. I take for granted all the technology we have assessable like face detection and Snap Chat filters. About a decade ago all of this technology was just being created by people at the top of computer software, and now any kid with a phone has access to it. Take, for example, the smile detector from this week’s reading, the technology involved with that is used to be very complicated, but as we refined it now with enough time a high schooler could use processing to detect that. My levels of expertise are not that high, so I went for a more simple altercation with my project. With my project, I focused on directing the user’s attention. Where I moved the photo using the potentiometers that would be where the focus of attention from user would be. I also included a larger Orca at the end to show how you could also incorporate different photos on the same background. So you could show someone a beautiful landscape at first, but at any time you could change to the great seas with a monster orca staring at you. All in all, this week’s reading was interesting and really makes me happy to live in a time where the complicated applications of the past are now commonplace.

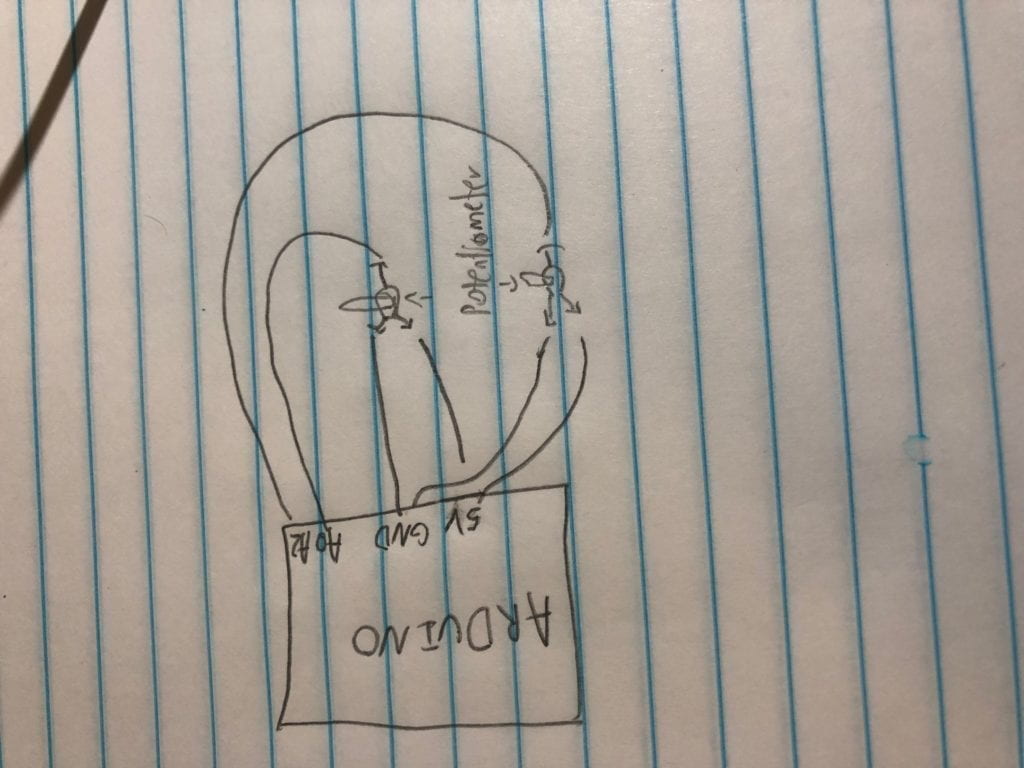

Schematic

CODE: