CIFAR Dataset Training

DATE: March 18th, 2019

Introduction

For this week’s assignment, the goal was to train the model for the highest accuracy. Since the code was already given to us, I just tweaked the three variables batch_size, num_classes, and epochs to give me the best result. From my understanding, batch_size controls the number of training examples in one forward or backward pass. One epochs is a full iteration through the dataset. I’m not sure if I was suppose to change num_classes, but I experimented with it anyways.

Process

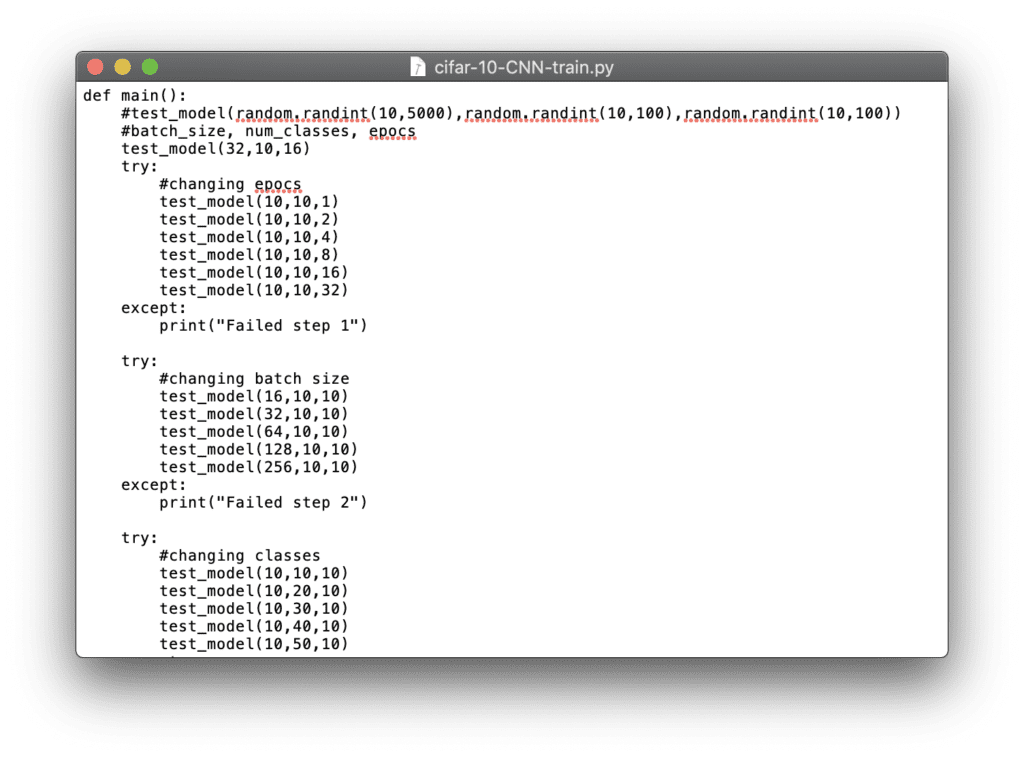

I modified the code so that I could call a single function test_model to run the model overnight. I ran it for about 6 hours and was able to process 16 different models with various parameters. I used control variables and only modified one variable at a time to understand the effect of each individual variable on the accuracy. However, this approach isn’t correct because the model is not a simple linear function. I outputted the batch size, epochs, and number of classes, along with accuracy and loss to a text file for analysis later. In hindsight, I wish I could have logged the amount of time each model took as well.

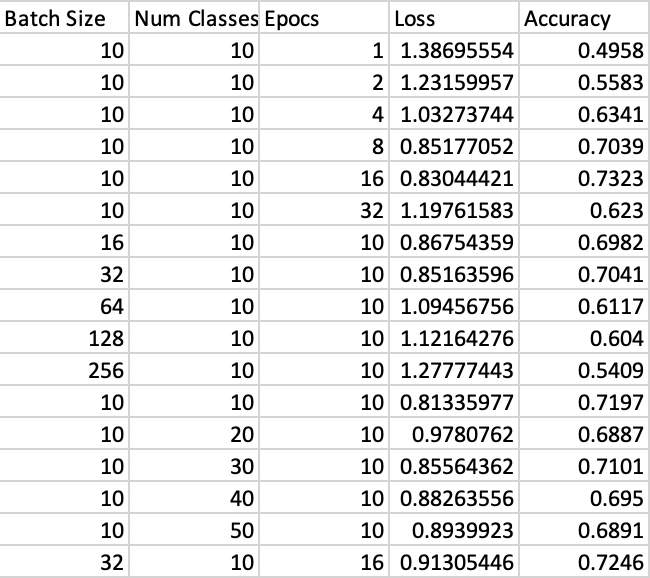

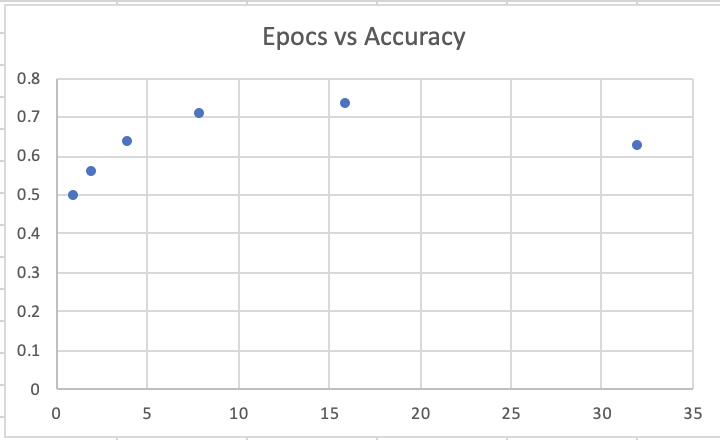

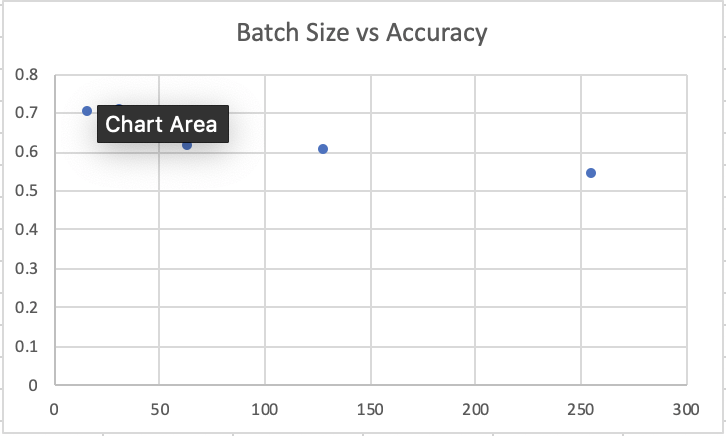

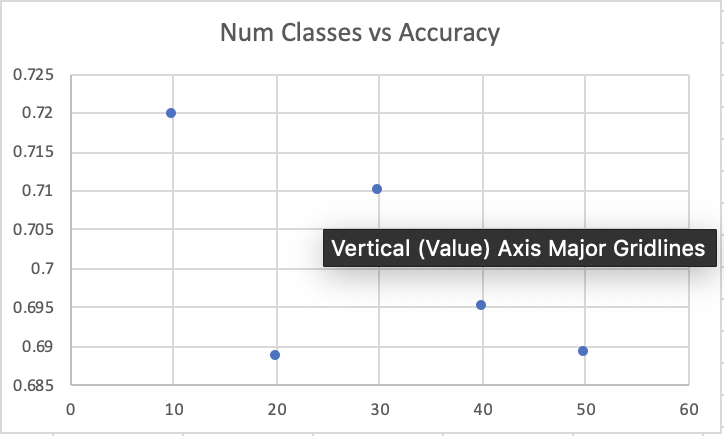

Below are the results I obtained in table form and in a line graph. There are some interesting relationships that seem to occur.

As number of epochs goes up, the accuracy increases, but only up to a certain extent. After 16, my guess is that the epoch size will go down or stay relatively constant. As the batch size increases, there is a small relationship that shows the accuracy decreases. The accuracy goes down as the number of classes increases.

Conclusion

The best result I got was with a batch size, class size, and epoch value of 10, 10, and 10 with an accuracy of 73%, similar to the example given. I think this is pretty reasonable considering the amount of time it takes to train isn’t too bad. I also tried running the model with the best variable from each set, batch size of 32, class number of 10, and epoch of 16; this worked well with an accuracy of 72% but it wasn’t all that surprising. I hope that as I learn more machine learning concept I will be able to understand how this all works. Under-fitting and overfitting is apparent from the results I obtained. I am very excited to learn how to use the Intel AI cloud service to run my code, because the amount of time it takes to train the model is too long. I hope to revisit this in the future and see if I can do a better job of training the model.