Living Image

GitHub Link: https://github.com/DaKoala/living-image

This is an interactive webpage allow users to interact with images using their faces.

Usage

There are plenty of movements to interact with the image.

- Move face forward/backward: Change the scale of the image.

- Move face left/right: Change the brightness of the image.

- Tilt head: Rotate the image.

- Turn head left/right: Switch image.

- Nod: Switch image style.

Inspiration

Before midterm, I found that the Style Transfer was really cool, so I decided to do my midterm project with it. However, the project would lack interaction if I only used Style Transfer. Finally I integrated Style Transfer with PoseNet to develop a project with sufficient interaction and cool visual effects.

Typically, people interact with images in websites using mouse and keyboard. With the help of machine learning technology, we can make images reactive. In this project, it seems that images can “see” our faces and change their status based on our faces.

Development

Code Split

To organize my code well, I used TypeScript + parcel to code the project.

TypeScript is superset of JavaScript, it has all features that JavaScript has. Besides, it has static type check, which can prevent a large number of errors before during editing period.

Parcel is a tool to bundle my code. I split my code into different modules during development, but in the end all the code would be bundled into one HTML file, one CSS file, one JS file and some static asset files.

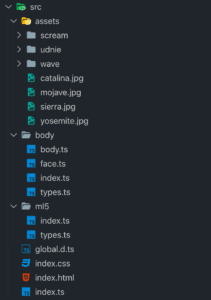

Structure of my source code

Structure of my source code

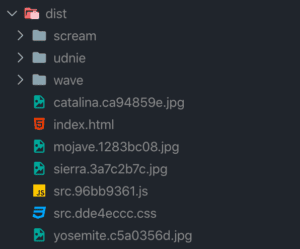

Structure of my distribution code

Structure of my distribution code

Face

In the project, I used a singleton class Face to detect user interaction.

Basically, it retrieves data from PoseNet every frame to update the position of different facial parts and compute the state of the face.

I used a callback style programming method to trigger functions when users nod or turn their heads.

To prevent image shaking, I set some thresholds. Therefore the image will update only if the difference between two sequential frames exceeds a certain value.

Update Image

I used Style Transfer to add special effects to the image. Other properties of the image, such as size and direction are all implemented by CSS.

Difficulties

At the early stage of this project, I spent a lot of time trying to make Style Transfer work. The biggest problem I had was that Style Transfer never got the correct path of my model resources.

Finally I solved this problem by looking at the source code of ml5.

https://github.com/ml5js/ml5-library/blob/development/src/utils/checkpointLoader.js

When we use Style Transfer, we usually pass a relative path of the model as the first parameter. In the source code, I learned that during execution, it will send a HTTP request to get the model. That is the reason why we must use a live server to host the webpage in class.

The previous failure was that the development server treat the path of the resource file as a path of the router, instead of a request to a static file hosted by the server. To solve the problem, I added a .model extension to all model files. In this way, the server will recognize it correctly.

Future Improvement

This project proves the feasibility of making websites interactive by equipping them with machine learning libraries.

In the future, I want to develop some well-functional applications with the same methodology I used in this project.