Shenshen Lei (sl6899)

For the midterm project, I intended to create a museum viewer imitating 3d effect. After researching on the current online museum, I have found that there are mostly two types of online museum viewing interaction: the first is by clicking the mouse, which is discontinuous, and the second is using the gravity sensor on the mobile phone, which asks the user to turn around constantly.

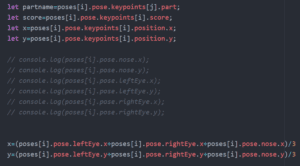

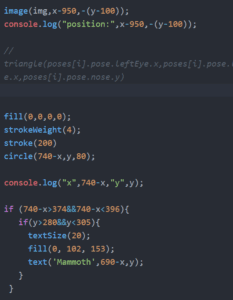

In my project, I want the user to have an immersive and continuous experience of viewing online museum without busy clicking and drugging the screen. To mimic an immersive experience, I used Postnet (the Posenet reacts faster than Bodypix) to track the position of the user’s eyes and the nose. These three-point form a triangle that can move when facing different directions. The coordination of the background picture move following the position of the triangle. In this process, one thing bothered me is that the user’s movement caught by the camera is mirror side, so I have to change the x-coordinate by using the width of canvas to subtract detected x. I calculated the coordinate of the centroid of the face triangle so I could use one pair of variables rather than six.

To show the position of detected face, I drew a circle without filing color. Also, I added a function that when the circle of gaze moved on to the staff, the name of the thing will display in the circle. (While I initially believed that the circle can imply the users of the viewing process, but it seems confusing sometime.)

So my current project works as the following gif:

After presented my project to the class and guests, I have received many valuable suggestions. For the future improvement of my project, I will make some changes.

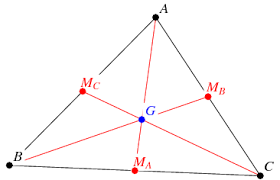

Firstly, with the suggestion from the professor, I will use the ratio of sides of the triangle rather than calculating the centroid, because using the vector as the variable will save the moving distance of the user. And that will also smooth the moving of the background picture. It may also improve the immersive experience from changing coordinate to changing viewing angles, as suggested by my classmates. Another thing is that I will add a zoom-in function that when the user moves closer to that camera, the picture will turn bigger. The method to solve it is to measure the distance of the user’s two eyes detected by a computer camera. Finally, for the instruction part, I will add a signal before the item introductions jump out. For example, when the camera detects the user’s hand and then will show the introduction in a few seconds.. I was inspired by Brandon’s projects for employing the voice commands, but the accuracy of voice command should be tested. There will be more thoughts on improving the user experience of my project. I am considering to keep on working on the project and show a more complete version in the final project.

Thanks to all the teachers and assistances and classmates who helped me or gave out advice on my project.