I went through a lot of iterations with my project. At first, I wanted to base something off Avatar: The Last Airbender, but after working with the poseNet I realized that wrist detection was spotty and anything based on it would be difficult regardless of what I did.

My next step was to try something a bit less ambitious. Inspired by Eszter’s initial flower idea, as well as the #TeamTrees thing on Youtube, I wanted to try making trees that would sprout from my shoulders. I was able to make the trees and the shoulder detection was good, unfortunately, I couldn’t find a way to combine the two. I had been looking at someone else’s code for making the trees and they were using an HTML canvas. I think with enough effort I would be able to combine the two, but at that point, I didn’t really have a lot of time.

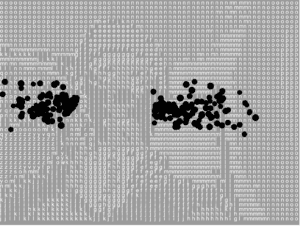

In fact, what I will most likely use as my final product (the picture you see above) was originally meant to be a backup just in case I couldn’t finish the tree idea. I wanted to combine a couple of things that we learned in class so that I wouldn’t have to worry so much about meshing them together as I did with the trees.

At first, I wanted to make letters spill out of my ears, a visualization for me trying to retain information in classes. However, we had been using particles so I wasn’t able to make the shift from particles to characters (since much of the code seemed to be reliant on the fact that they were working on particles).

With my final idea, I used the letters to make the picture, as we had done in class. Except for this time, black particles would be streaming out of my ears. Part of the inspiration was me realizing that poseNet was able to detect my ears even when they were covered by big headphones. Although serendipitous I think the visual works very well and fits with how I feel when I listen to music: I’m blocking out all the noise around me.