Balance Between Nature and Human

- Project Demo

I want to explore the balance between human society and nature. This concept is inspired by Zhengbo, an artist committed to human and multispecies equality, who recently exhibited his project Goldenrod in our Gallery. I want to use the machine learning model to simulating a project that can be able to raise people’s attention to protecting the environment.

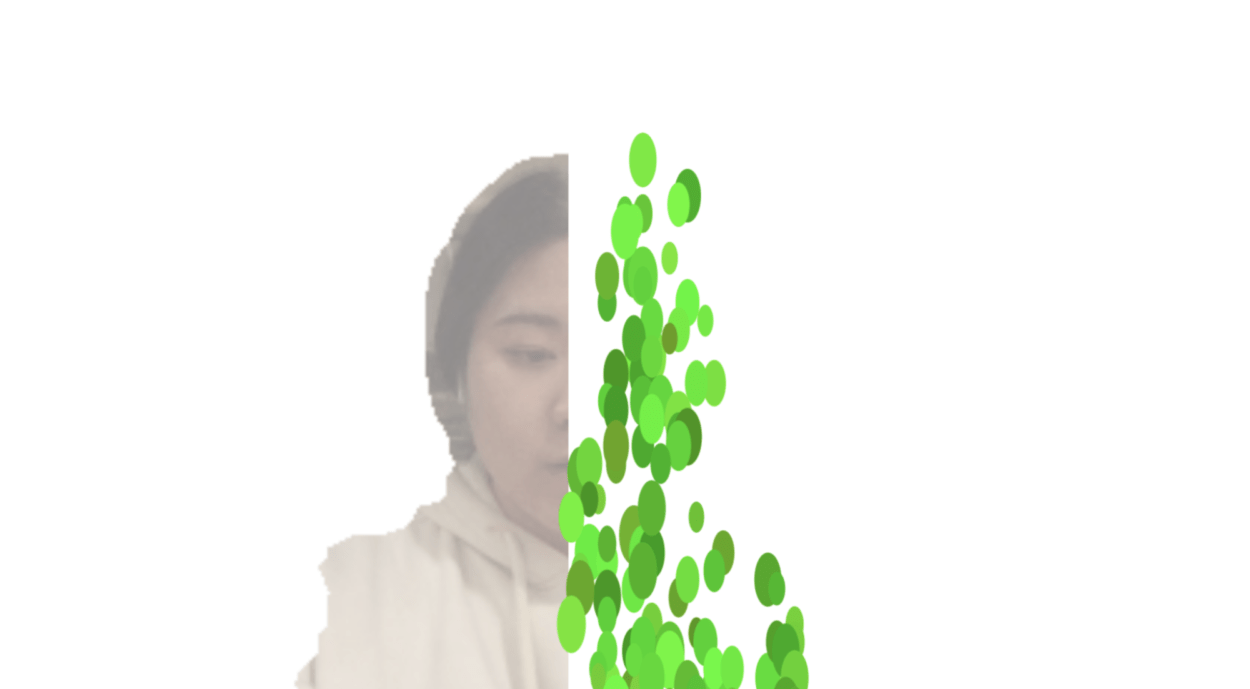

For the interface, I was inspired by the project called Transcending Boundaries by Teamlab. By projecting the flowers and other natural elements on the human body, it is not only beautiful to look at but also interactive.

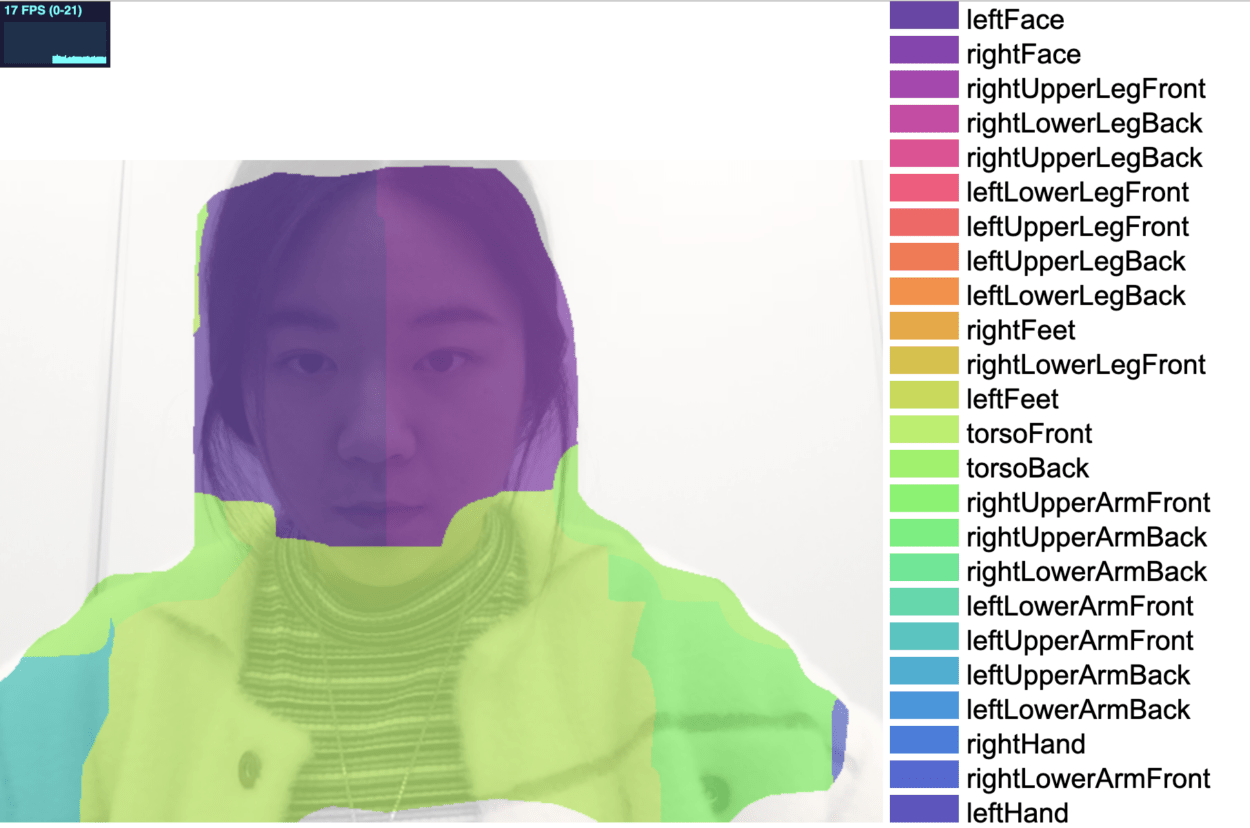

- Machine Learning Model

The machine learning model I used is bodyPix. Since bodyPix is Real-time Person Segmentation in the Browser, it allows me to separate the different segments of the body and applied my idea of revealing the importance of keeping a balance between humans and nature. The body segments I used include: the leftFace (id: 0); the rightFace(id: 1); and the torsoFront (id: 12).

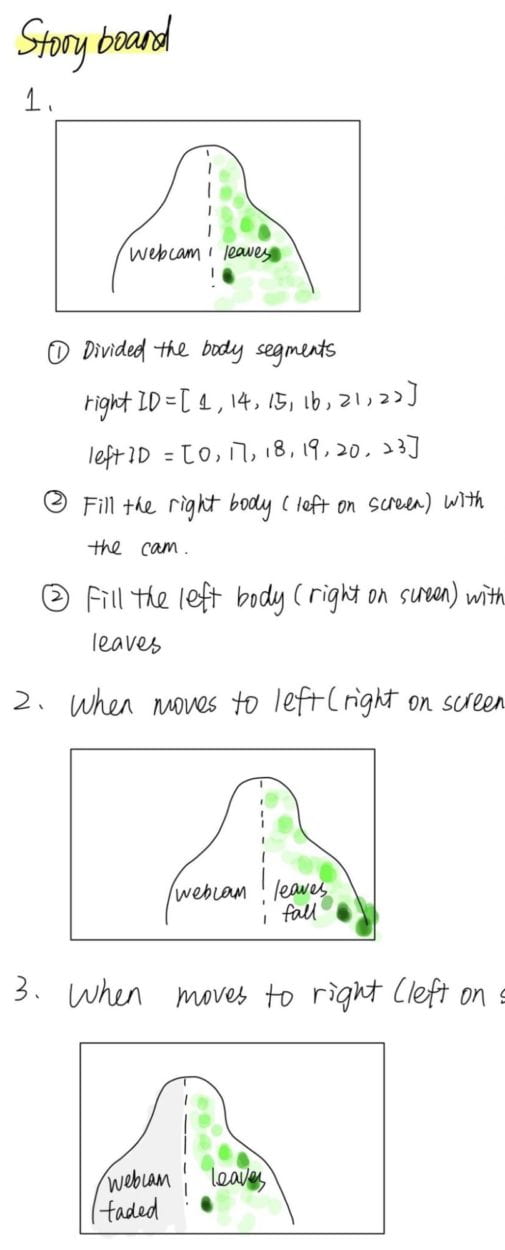

- Storyboard

Before initiating this project, I draw a storyboard. To imitate the human side, I first decided to use the real-time camera image from the webcam. For the nature side, I decided to use different ellipse to imitate the leaves.

The interaction embedded in this project is simple. The interface will change accordingly to the position of the user while different effects will be triggered as the user reached a certain position.

- Coding

-

- Hiding the background

In order to track the different segments of the human body, I needed to use

bodypix.segmentWithParts(gotResults, options);

However, it was not able to apply a maskbackgroud to this model. Alternatively, I added with an if statement so that only the needed segments will be displayed, otherwise, a white background will be added.

if (data[index] != 1 && data[index] != 0 && data[index] != 12) {img.pixels[colorIndex + 0] = 255;img.pixels[colorIndex + 1] = 255;img.pixels[colorIndex + 2] = 255;img.pixels[colorIndex + 3] = 0;}

2. Speed

As more and more elements added to the project, the speed of detecting the movement was slow down. After I discussed with Moon, I did the following manipulation to my code.

First, I was calling the machine learning model in the draw function, so that the machine learning model was run every second when drawing the new interface. To call the machine learning model just once, I put it in the gotResult function and the project speeded up a lot.

Second, I reduce the resolution of the image by adding a scale.

video.size(width/scaling, height/scaling);img = createImage(width/scaling, height/scaling);

After these effective manipulations, the project is much more smooth and improved the user experience.

3. Separated the torso

Since the model cannot detect the left and right side of the body, I need to separate the body by tracking the minimal x position of the left face and applied to the torso.

I first create an empty array called posXs = [ ]. After running the machine learning model, I pushed all the x position of the left face into this array. Then I find the minimum value within this array and applied to the torso. To limited the length, I also wrote an if statement to splice the first data in the list when the length reaches the limit of 80.

if (posXs.length < 80) {posXs.push(x);} else {posXs.splice(0, 1);posXs.push(x);}

4. Pixelization

After realizing the concept of this project, I reconsidered the interface since using the webcam seems a little bit inconsistent and distractive.

After inspiring by peers and Moon, I decided to capture the color from the webcam and with it for doing some pixel manipulation. I created another class for the human side and used the color from the video.

let face = new Face(mappedX,mappedY);r = video.pixels[colorIndex + 0];g = video.pixels[colorIndex + 1];b = video.pixels[colorIndex + 2];face.changeColor(r,g,b);faces.push( face );

- Reflection

Overall, I think the final deliverable meets my expectation and the interaction is intuitive and easy to be understood. For future improvement, I think I will probably add more elements to imitate nature, at the same time, make the human side more realistic. More movement can be added into this project to trigger more effects in the future.