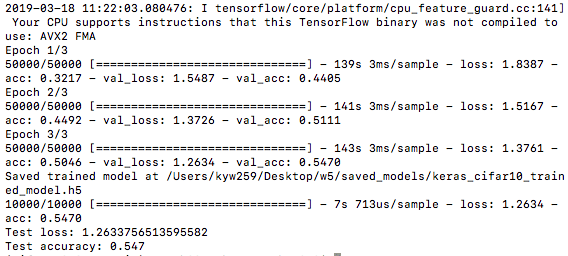

At first, I started with 100 epochs. But the process went too slow so I quit it and restarted with 3 epochs.

Then I tried different batch sizes starting from 2048 to 32. I found the runtime didn’t change too much and was around 6-7 minutes in each case. As for the test loss and accuracy, here are the results.

-

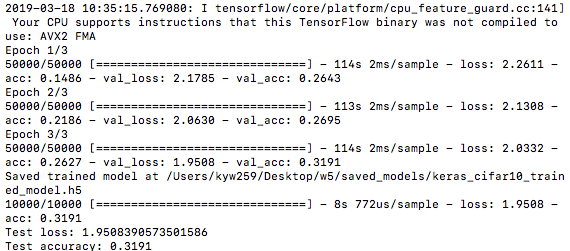

batch_size = 2048

-

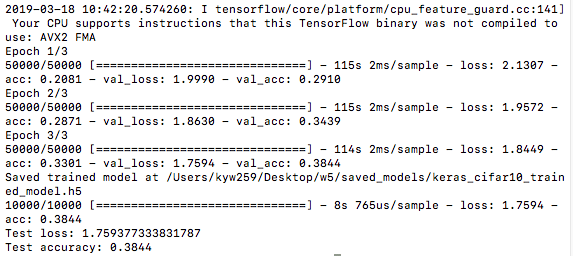

batch_size = 1024

-

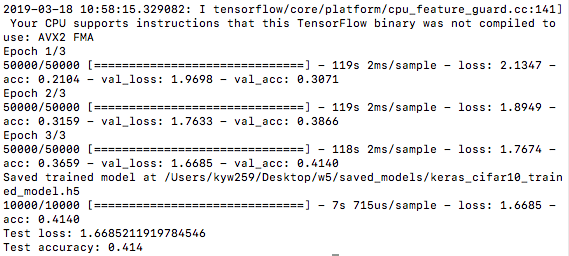

batch_size = 512

-

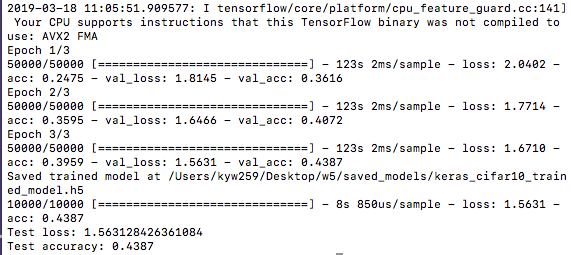

batch_size = 256

-

batch_size = 32

So, we can see as the batch size gets smaller, the test accuracy gets higher with the test loss decreasing.

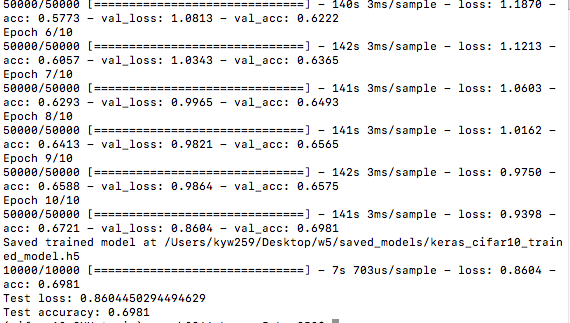

Then I kept batch size fixed at 32 and tried different epochs number. I found that when the epochs number gets bigger, the test accuracy also gets bigger.

As a result, I think the parameters in the training process are fundamental to the result. In order to get more accuracy, we may need to decrease the batch size and increase the epochs. Also, since the growth of epochs will increase the runtime, it also needs some limit to reduce the runtime in a reasonable range. But I am not very clear about the principle behind it, which I would like to explore more in the future.