For this week’s assignment, I chose to look closer at the Image Classifier; this uses neural networks to “recognize the content of images” and to classify those images. It also works in real time, and the example I tried specifically uses a webcam input.

You can tell a bit about its development based on what you see on the user side. I noticed that it wasn’t too bad at assessing images if they were visually straightforward—I held up a water bottle against a mostly plain background, and it caught on quickly. But when I would turn it on its side or upside down, the classifier had a harder time trying to identify it.

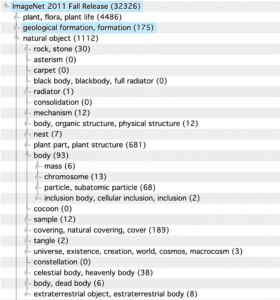

I looked more into the training of this model and learned that it was trained on the ImageNet (image-net.org) database. ImageNet has around 14 million images divided into different synsets, which are labelled and monitored by humans.

Image Net database

I started to think more about how that training really translates to its function, and what the computer is actually ‘seeing’—if it’s only recognizing one angle of a given object, does it only learn in groups of pixels? Even if that’s the case, is it possible for it to understand those groups if they were rotated? I’m not sure if these questions have obvious answers, but I’m excited to hopefully understand better over the next few months.