Training Cifer-10

The first thing I did when I started working on the assignment this week is to get my tensorflow-gpu working on my desktop. The following training results are done by a GTX 1080 graphics card.

100 Epochs with different batch sizes

- batch_size = 2048

4s 83us/stepTest loss: 1.0191390594482421

Test accuracy: 0.6451

4s is a promising speed comparing with CPU training. I noticed that the parameters are not pushing the GPU to its full capacity so that I decided to raise the batch size (which turns out to be irrelevant).

- batch_size = 4096

4s 79us/stepTest loss: 1.1939355976104737

Test accuracy: 0.5823

The step time does not change much, and neither does the epoch time. It is interesting becuase as each step it is passing twice the data and the total amount of time remains the same. However, a considerable drop-down in the accuracy is noticeable. That is because bigger batch size will cause overfitting the model to the training data with loss in generality. Therefore, I shrink the batch size to 64 next.

- batch_size = 64

9s 172us/stepTest loss: 0.5949330416202545

Test accuracy: 0.8038

Now it seems that the step time is fixed no matter how large the batch size is. Smaller batch size results in longer steps and thus longer epoch. The most exciting point is the accuracy increse. Smaller batch size also makes the training result more robust to general test data.

The reason behind is the more delicate process of regression. The use of batch size is to feed many data at a time for one regression process to utilize parallel computation power like GPU. Using smaller batch size examines data closer.

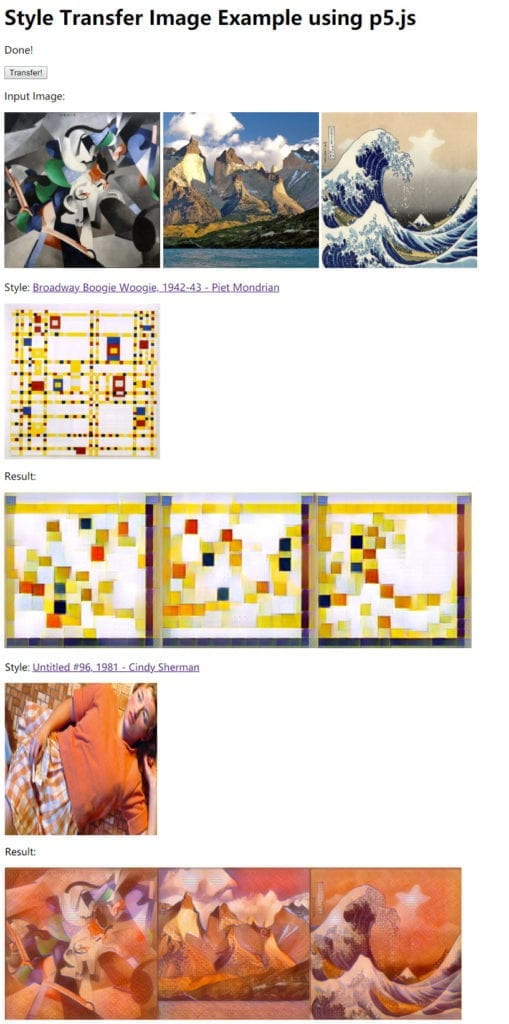

Training Style Transfer

Besides training Cifer-10, I also tried style transfer. I follow the instructions provided by ml5.js and trained the model with 2 different style. The result leaves a lot to be desired most probably due to the inadequate number of epochs. The training duration is several hours as I am not counting and the program doesn’t log it.

Here is a link where you can try it.

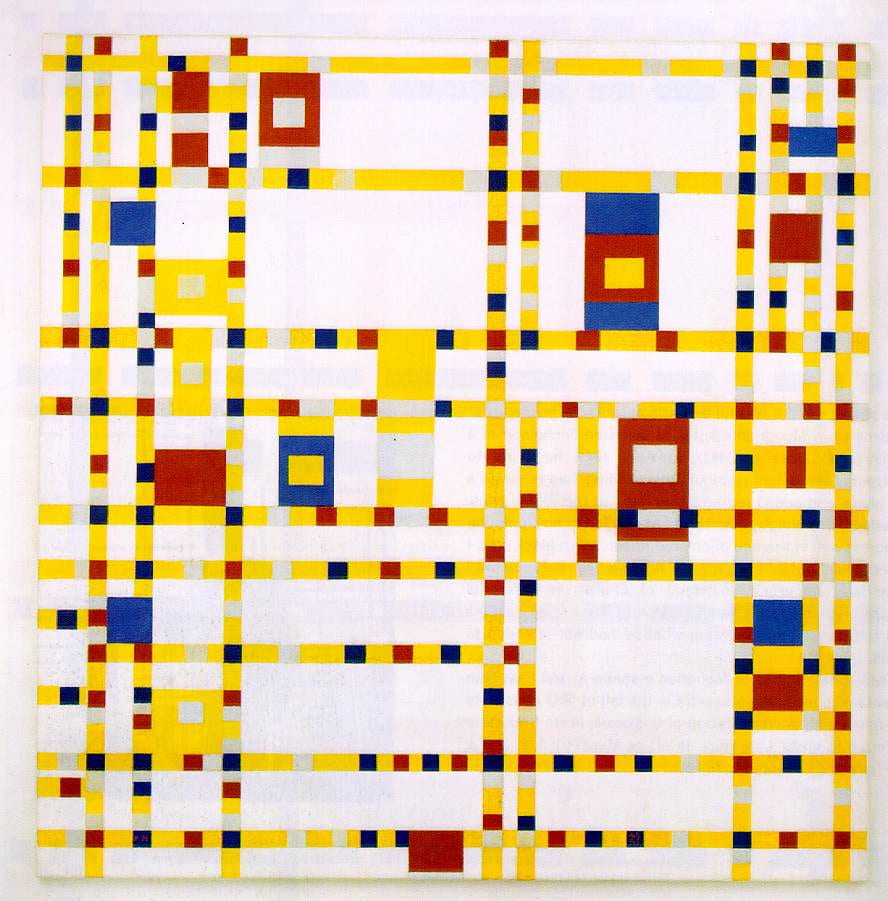

Styles

These two are probably not the best choices for style transfer, one abstract painting and one portrait (sort of) photography.

Configurations

--checkpoint-dir checkpoints/ \--model-dir models/ \--test images/violetaparra.jpg \--test-dir tests/ \--content-weight 1.5e1 \--checkpoint-iterations 100 \--batch-size 16The batch size was tuned down from the out of the box number of 20, because my GPU kept crushing, short of memory perhaps. The checkpoint iteration is also tuned down to keep me informed that it is still working. (There is a long story of my GPU refusing to work for god knows what reason.)

Result