Idea

For this week, I wanted to use deep dream to create that trippy zooming in kind of video. I couldn’t figure out a proper way to do what I wanted with the code we used in class. I found an implementation of deep dream with tensorflow online. I followed this guide to use deep dream with different kinds of parameters.

I started off with this picture of a random scenery:

Here’s a sample output with the standard parameters for starry night that I pulled from the tutorial:

Method

The tutorial had a very handy list of what effect each layer of tensors would have. The process was not too complex: it would do the same things we did in class and feed back the output to the algorithm after zooming in a very small bit. After creating many of these images, we can chain them to create the trippy looking video.

layer 1: wavy

layer 2: lines

layer 3: boxes

layer 4: circles?

layer 6: dogs, bears, cute animals.

layer 7: faces, buildings

layer 8: fish begin to appear, frogs/reptilian eyes.

layer 10: Monkies, lizards, snakes, duck

I used layer 6 to get the dogs, and ran it for about 120 images. Each generated image would be fed back into deep dream to generate the next one.

Output

Here’s the output after 5 recursions on layer 6:

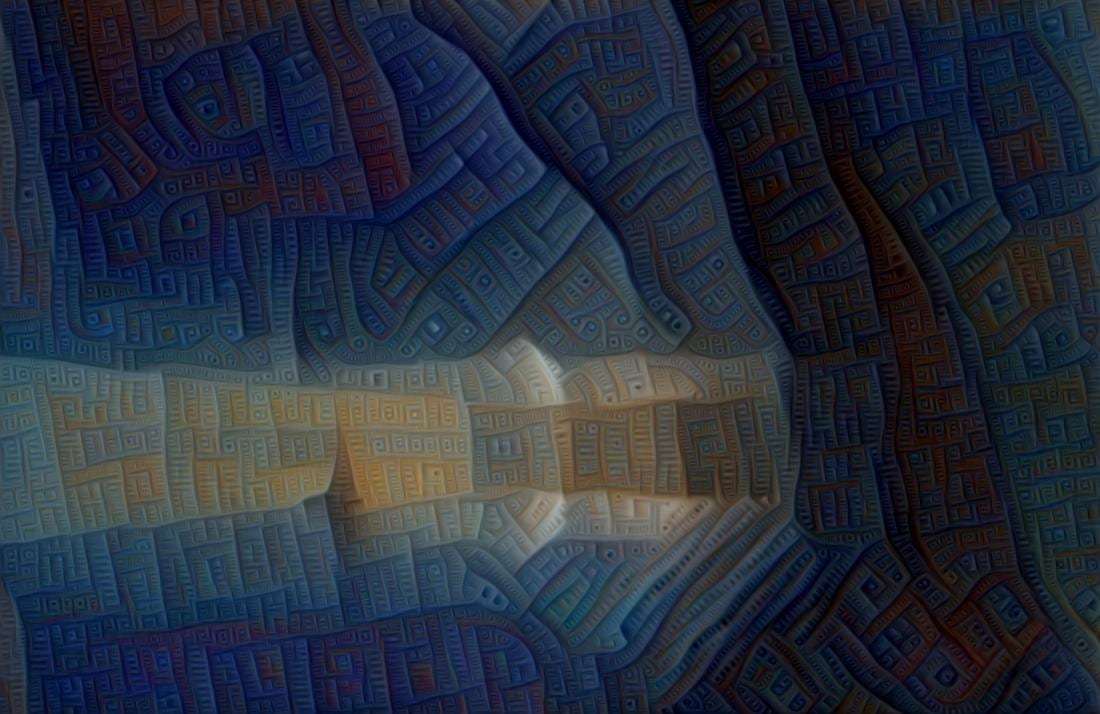

Here’s the output after 5 recursions on layer 3 (boxes):

Here’s the video I got by chaining all the images together on layer 6 (123 images). It’s playing in original speed.

Here’s the video with layer 3 (50 images). It changes layers somewhere in the middle to layer 4 to get the circles. It is 0.5x of original speed.

I would ideally like to be able to train for longer and more images to create a video for around ~1 minute but I fear it’d take far too long.