(This post is 3 days late, my bad!) – I forgot to publish my saved draft.

Progress on Midterm Project:

Data Collection

I managed to scrape pokemon data off pokemondb.net. The data that was most necessary for me was a compilation of the pokedex entries from each season. The scraped data was collected into a CSV file which I processed late.

Choosing and Training the model

I planned to use a Doc2Vec model to get a vector representation of each pokemon’s description. The idea is to get the user to input a paragraph or some kind of text, convert that to a vector using the same model, and compare cosine similarity between vectors to give the closest pokemon to the paragraph they typed. To accomplish this, I used a python library called “gensim”. They have an implementation of a doc2vec model which seemed like the exact thing I wanted to use.

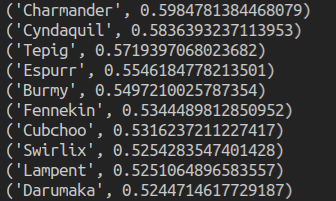

I trained the data and figured out how to use the model to find the closest input document, and now I get the 10 closest pokemon to the document I type. An example below:

With the input document as “super hot fire pokemon. burns everything to ash!”, here’s the output I get:

Next Steps:

Moving forward, I want to create an interface with a webcam where after a user has typed in some text, they can see their pokemon budyy (or buddies) on the screen with them and be able to take a picture as well. For this, I’ll use ml5’s PoseNet to get the keypoints of different body parts and place pokemon around the user. Since data needs to be sent from a server to an interface (frontend) I’ll have to create a Web App which I will do most likely in Flask (a python framework).