I presented the SketchRNN() last week. This model was trained based on the world’s largest doodling dataset Quick Draw, it has over 15 million of drawings which are collected when users are playing the game Quick, Draw! This model can help users to complete their drawings based on a certain chosen model. This model was integrated into the ml5.js and can collaborate with p5.js. This example shows how to build an interactive project using ml5.js and p5.js. It used the p5.js to build a canvas for the user to sketch and the SKetchRNN() will finish the rest of it.

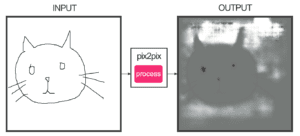

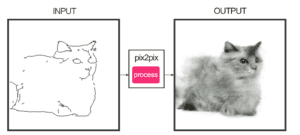

When I was going through different models that ml5.js offered. I found another interesting one called pix2pix(). It is an image-to-image translation model. From its website, we know that it is able to “generate the corresponding output image from any input images you give it”. That’s to say, if you sketch an outline of a cat, the model will be able to generate a corresponding real cat image for you. And this image generated by this model was created based on the outline you drew. The user experience is like the model is automatically filling the color for your sketch. It also gives examples of using this model to generate building facades, shoes, etc. Though those examples show very good results. When I am trying to draw things myself, the outcomes were far from perfect. Most of them were simply filled in the yellow color. It seems the model can’t recognize what I drew. Besides my bad drawing skill, I guess this is because the model was trained based on sketches with quite perfect outlines. The result might become different if it can be trained with the Quick Draw dataset, and I want to explore this part in the future. The example it gives is also based on p5.js, which made me wonder that if I can combine pix2pix with SketchRNN.

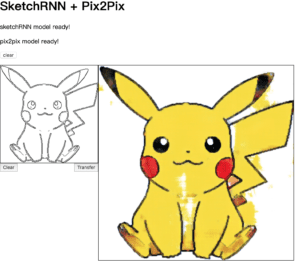

The idea is that the SketchRNN will help the user to finish his drawing and the pix2pic model will help the user to color it. This video shows what I got. When the user clicks on the sketch board, it will automatically generate the figure. This figure is sketchy and the user can help to refine it using the sketch function provided by p5. Once the user feels satisfied with his work. He can click the transfer button to let the pix2pix() to color it.

Though the results were not as good as I expect, they showed at least these two models were actually doing something. I came up with two ways to improve the outcome: 1. retrain the pix2pix() with low-quality sketch dataset such as Quick Draw to improve its ability of recognizing bad drawings; 2. retrain the SketchRNN() with high-quality doodles so it can generate well-enough sketches that can be able to recognize by pix2pix(). Due to the time limit, the model chosen for SketchRNN was the cat model. Given more time, I might add more model for the user to choose and figure out a way to let the machine predict what the user wants to draw and choose the model automatically. Besides retraining the model, the future works also include improving the UI and adding more instructions.