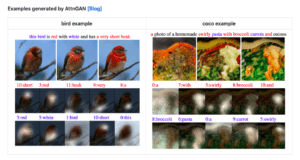

AttnGAN is an example of an attentive generative adversarial network, developed and compiled into a browser-testable version by Cris Valenzuela form NYU ITP. Its primary task is generating images out of text input. The images are based on a dataset of 14,000 photos.

The generative adversarial neural network in use is trying to generate an image by matching it with a set of images marked as original. It is a pair where one component tries to generate a fake image, and another component (neural network) classifies it as real or fake. The generative component, in turn, tries to pass the fake image as real, and the aim of the cycle is to make fake images unrecognisable from the real ones.

In some cases, the model achieves some impressive results, for example when the text includes mentions of certain animals (birds). However, it does not generate photorealistic images most of the time, and fails completely when confronted with more complex sentences. What I find most interesting about it is the visual representations of abstract concepts, such as: “the importance of being yourself” or “y tho”.

In future work, I would like to use the concept of GANs to create my own inferences. Perhaps, I could find better datasets to accomplish this.

My specific idea so far is to make a neural network that recognises corporate logos in public spaces.