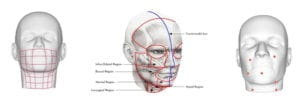

I came across an especially interesting project being developed at MIT’s Media Lab called “alterego”. It is an AI headset that is able to detect and understand the user’s subvocalization (talking in your head). The headset is equipped with electrodes that receive input from facial and vocal cord muscle movements that appear during internalized vocalizations. These signals are not detectable by the human eye, but the headset can pick up on these subtle movements, and feed the input into a machine learning system that correlates specific signals with words.

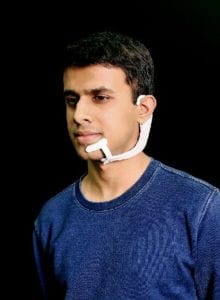

The machine is worn on the ear, but spans across the user’s jaw and cheek, in order to receive a variety of facial movement signals. With alterego, the user can effortlessly complete tasks such as interfacing with software. The user will receive output through bone conduction.

The lead developer, Arnav Kapur, wanted to create an AI device that felt more “internal”, as if it was an extensions of the human body. Kapur sees alterego as a ‘second self’ where the human mind and computer intersect.

Currently, the prototype displays a 90% accuracy on application specific vocabulary, and requires individualized training. However, Kapur foresees the alterego being seamlessly integrated into our everyday lives, providing us with a new level of privacy and effortless communication, as well as aiding those with speech impairments.

Video Demonstration:

Project Link: https://www.media.mit.edu/projects/alterego/overview/