I was very interested in utilizing poseNet to create a simple, interactive project that would show me just how well the model worked, and how accurate it was in detecting parts of the body.

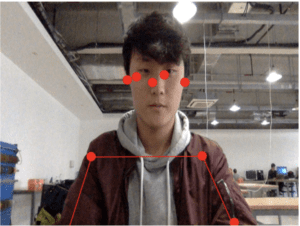

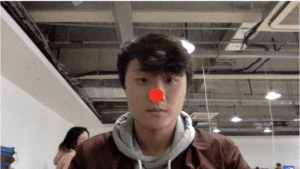

Initially, I imported the model into my sketch, where it drew out ellipses for key points on the body, creating a ‘skeleton’. There were some small issues with video noise, as the points kept jumping from one spot to another, but it was probably due to the lighting.

Then, I had it detect the nose, as I assumed it was probably the most stable feature.

For my project, I was inspired in part by the Braitenberg Vehicles, which are a set of robots created by MIT’s Media Lab. The robots themselves utilize very simple sensors, but the interaction between them seems quite complex. Specifically, I really liked the relationship between ‘Attractive’ and ‘Repulsive’ , which are two robots that act a bit like magnets. ‘Repulsive’ moves in a straight line towards ‘Attractive’, but when it enters a certain distance threshold, ‘Attractive’ moves away from ‘Repulsive’ until there is enough distance between the two robots.

Braitenberg Vehicles:

‘Attractive and Repulsive’ from the MIT Media Lab Braitenberg Vehicle Abstract:

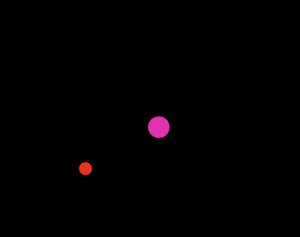

The setup I envisioned was pretty simple; the two robots would be represented by ellipses, with one ellipse acting as ‘Repulsive’, which will be controlled by the user, and one ellipse acting as ‘Attractive’, which will shy away from the user controlled ellipse.

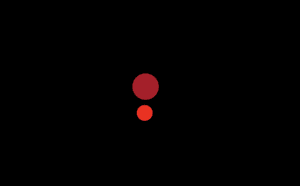

To begin, I imported the original poseNet with webcam code, and modified it to detect the left wrist (since the video will be inverted, it will actually be the user’s right arm), so that the ellipse can be controlled through arm movement. I drew a black background over the footage, and drew another ellipse in the center of the canvas. To have the ellipse act ‘repulsed’ by the user controlled ellipse, I measured the distance between the two shapes, and instructed the ‘attractive’ ellipse to move away accordingly. Finally, I added some small details like the ‘jitter’ effect and color/sound to make it more interactive.

Demo:

Screenshots:

Ultimately, I really liked how it turned out, although the detection is a bit all over the place. I found that having your arm over a white background works the best in terms of stability, although the nose is still the most stable feature.

Try it!