For this week’s project, I experimented with different ml5.js models and made several attempts to implement them in interesting and/or useful ways.

My first idea was related to the KNN classifier. I was playing with the two examples: KNN Classifier with Feature Extractor and KNN Classifier with PoseNet on ml5js’s website. The fact that they are able to distinguish with different poses really interested me. I thought about the possibility of using this to classify gestures from sign languages. Next, I trained my own data with some simple hand signs like “Hello” and “Thank you”. However, as I discovered, it was difficult for the algorithm to identify subtle differences in my hand movement. It is only when there were major body movements that the classifier became really accurate.

After some more brainstorming, I ended up deciding on the idea of turning webCam images to random text. This combines two ml5.js models – image classifier and charRNN. It works like this:

- 1. We capture an image from the web cam. Then such image is classified by a pre-trained model.

- 2. Result from step(1) is stored. If the result is too long, only the first element is used.

- 3. We feed the result as the first input (seed input) and let the model predict what text might come next.

- 4. Step(1) to (3) can be repeated. We can also tune two parameters: length and temperature to produce different final output.

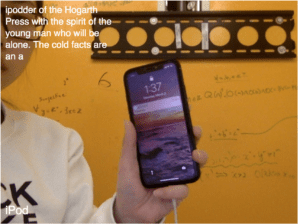

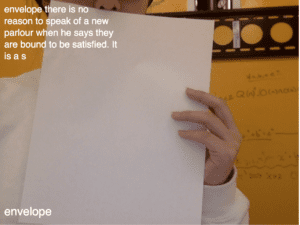

- There are some sample results:

There is a demo